All:

This week we visited the library together and selected more books to test on. In the coming few weeks we will be testing on the different books to select which to select for our final demo.

Celine:

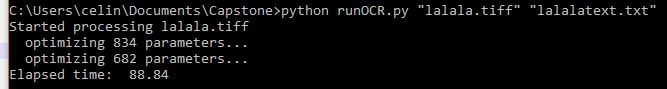

This week I focused on enabling SSH on my Windows computer, where I have been running and testing the runOCR code, and optimizing that code. From testing with an Ethernet cable connecting my laptop and the Raspberry Pi, I’ve found that the Ethernet cable eliminates the download/upload time of the images and text files. However I have only executed these commands from my laptop to the Pi, not the other way around. Over the course of the week I have been looking up how to setup OpenSSH on my laptop and make it available to the Pi to connect, over Ethernet or internet. Tomorrow I will be going to the lab to test uploading/downloading data from the Raspberry Pi to my laptop.

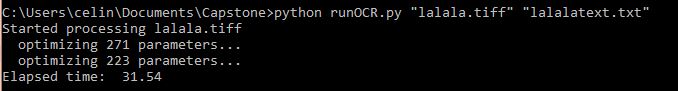

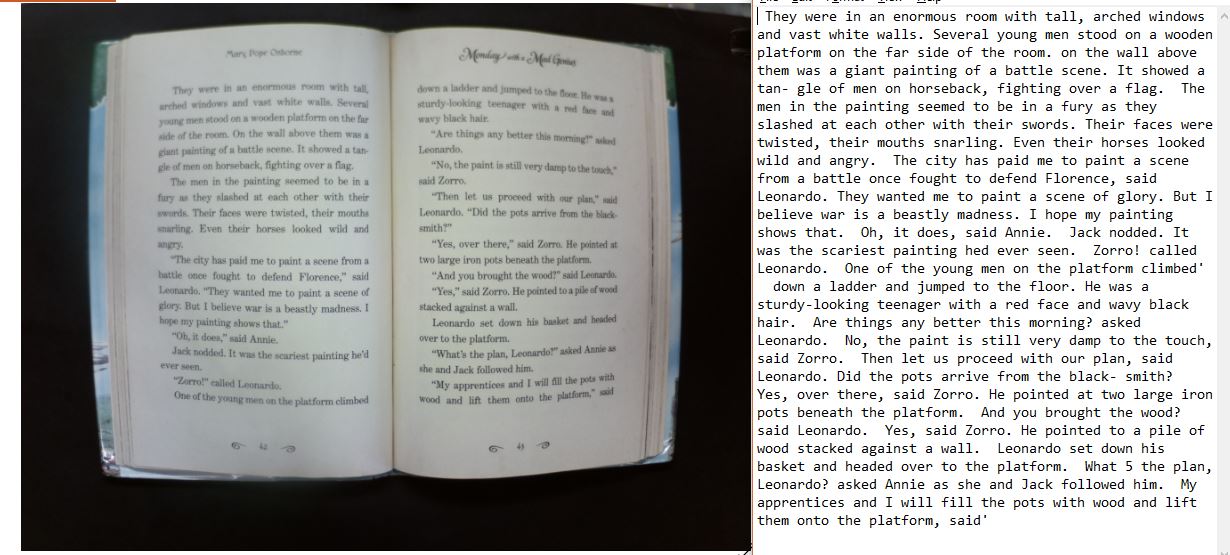

After recalling and reexamining some notes from previous signals classes, I down-sampled the image to promote faster computation time for the dewarping of the image. Where at full scale the image once took more than 100 seconds to fully process, now it only takes a little more than 30 seconds, and this is for the case when the page set is full of text.

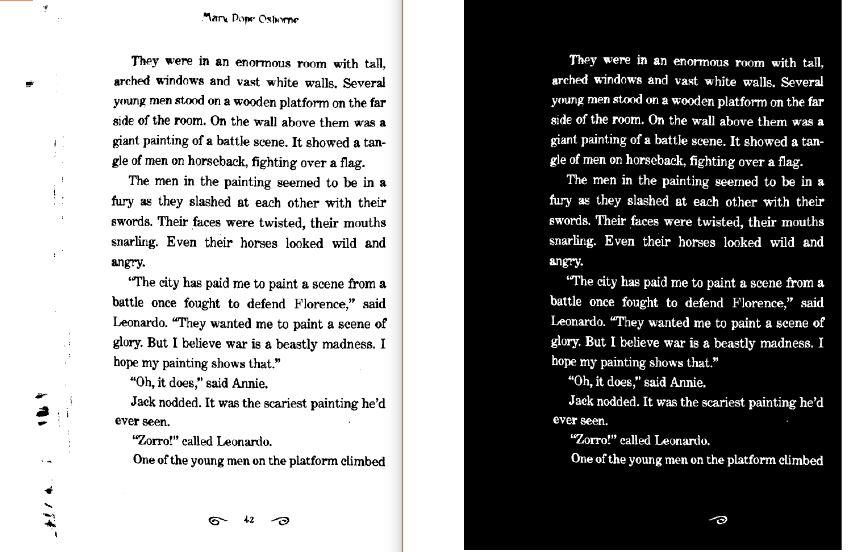

There is no decrease in accuracy, too:

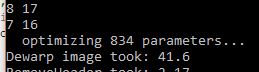

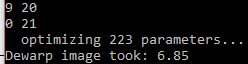

Page sets with full text have more lines in them, which in turn produce more data points to be analyzed. By down-sampling/decimating the image though, there are fewer data points over all! Thus fewer parameters need to be processed during parameter optimization, as can be seen in these snapshots:

Although this simple fix cut down the computation time around 60%, I will still investigate the remainder of the code to see where optimizations can be made, mostly in the removal of images and headers. I will also test this optimized code on the Raspberry Pi tomorrow.

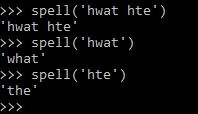

I touched a little bit on using the autocorrect package for Python, and found that it only works on a word-by-word basis, it does not do anything to inputs of more than one word:

As a group, the main focus of this week was how to move from our mid-semester demo to having a final cohesive product in the next three weeks. In the event that the aforementioned decreased processing time is not enough when running on the Pi to make it worth staying on the Pi, then we will still need to have the Pi outsource image processing computation. First we will have it working with outsourcing over Ethernet to my laptop. After that we will move to having it connect to my laptop over WiFi. Once that is working, considering upload/download time will not be that different regardless of the other server, I will look at using AWS for running computations. The advantages of AWS are that we won’t need a physical machine available whenever we want to run the image processing on the Pi and that AWS has its own TTS API called Polly. We will be able to make use of AWS S3 to store our images and text files, and possibly setup an AWS Lambda function that is triggered when we upload a new image and runs the image processing code. I just set up an AWS account today, so I can begin looking into how to set that up soon.

In this next week, I will finish enabling connection over Ethernet, then move to connecting my laptop over WiFi, and at least start trying out AWS, scoping out whether or not it is worth picking up at this point in time. I will also fiddle around a bit with my OCR code to see if I can continue cutting down computation time. Additionally, I will be documenting my code and start preparing materials for our final design paper. Finally, I will collect more text outputs from image samples I will get from the books to quantify the accuracy of the OCR.

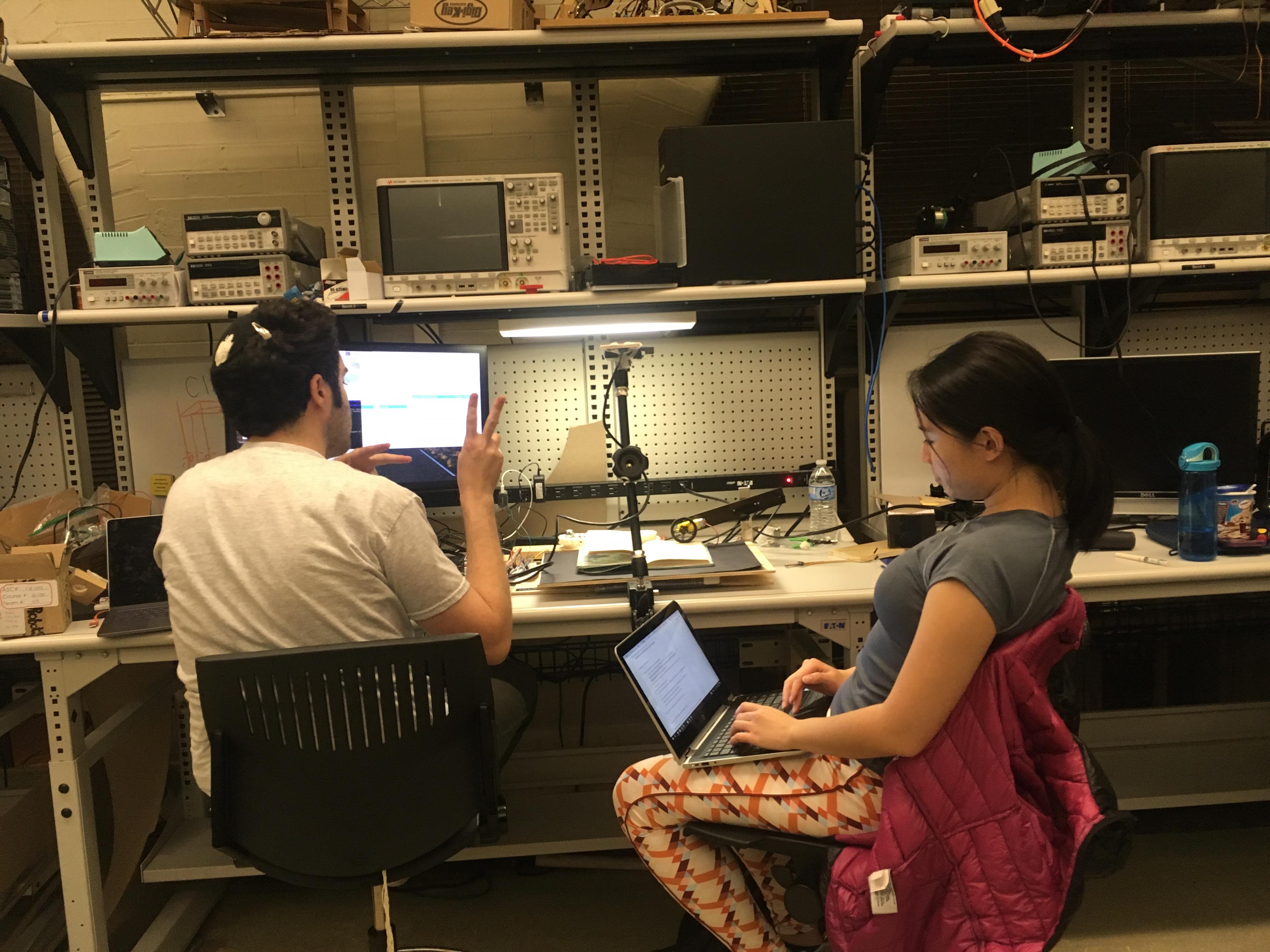

Indu:

This week my team and I made plans on what we should do moving forward, and how we are going to make sure all the parts of our project meet our constraints as we get closer to the final demo. I spent this week building the stand and spent a considerable amount of time trying to drill holes into acrylic to screw the camera in. After being unsuccessful with drilling by hand, I used SolidWorks to make a file that could be laser cut, but that was not successful either, and since it is taking too much trouble to do this, I will just stick with a wooden plank that will have screws for holding the camera up.

In the Maker Space, I was able to build the stand, so now it just needs to have the camera attached to it, which will be done by this weekend. I also made a 2nd wheel pivot device to do page flipping in reverse. As of now, both wheel pivot devices are done and can be held firmly into place on the board we are using.

I also ordered extra parts to be able to secure the page down after it has been turned as we have noticed that even when we are able to get a page to be separated and turning, sometimes it stands in the air, and needs something to fully bring it down.

In the coming week I will be looking more into how to use the microphone we ordered to connect to the Pi and how to use it to start up our device, as we mentioned in the stretch goals. I also plan to help Effie with text to speech issues as they arise.

Effie:

This week our class time was spent mostly on the ABET surveys and questions. That said I did spend considerable time outside of class getting the new servo hat for the raspberry pi soldered together and working with our servos as they weren’t ready yet for our demo last week since the hat hadn’t arrived yet. I simplified my code considerably and got the servos turning in series and timed with rotating the motors for the wheels so the operation of the page flipping is pretty much there – now we hopefully will be able to just try out a bunch of speeds/pulse widths on the servos/motors until we find the ideal speeds that make page turning work consistently.

I rewrote the basic threading implementation so that audio can be played at the same time as page flipping and text detection for the next page – but still have some more to do on that next week. I played with setting up my Mac to act as an SSH server so that I can actually scp files over from the pi to my Mac (until now we only could do it the other direction). As such next week I aim to tryout the text-detection code Celine wrote on my Mac (I’ll have to install the dependencies) since maybe running it on MacBook Pro would be the fastest and easiest to deal with (we’ve had trouble with connecting it through windows and it running to slow on the pi itself).

I also looked further into espeak and messed with some other voicings which do sound better, and tried to get Google’s text to speech library working on the pi but was unsuccessful. This coming week I aim to get google text to speech working on my Mac as well – which might make more sense anyways so that we’re effectively offloading the intensive computation (both text detection and text-to-audio production) onto the Mac which can do it much faster than the pi, and leaving the pi for really handling the mechatronics of the project.

Lastly as a group we spent time mapping out our progress and clarified responsibilities for going forward.