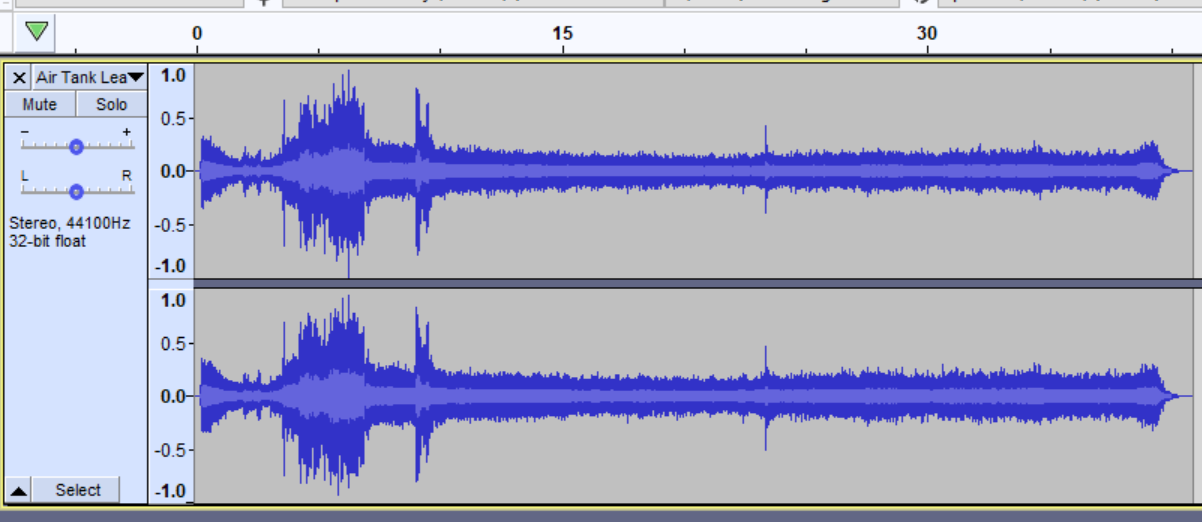

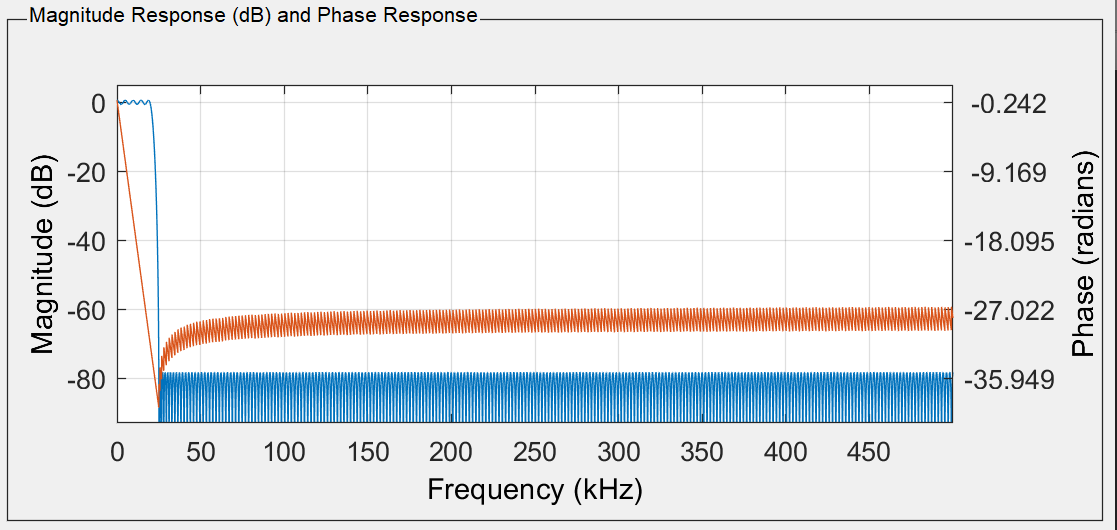

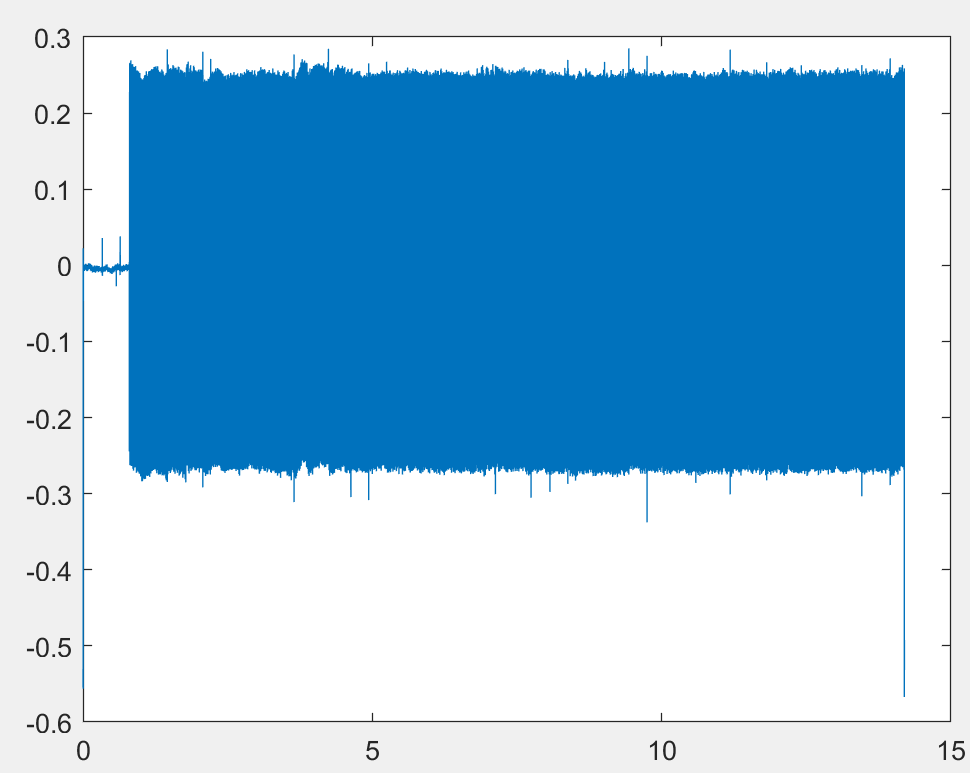

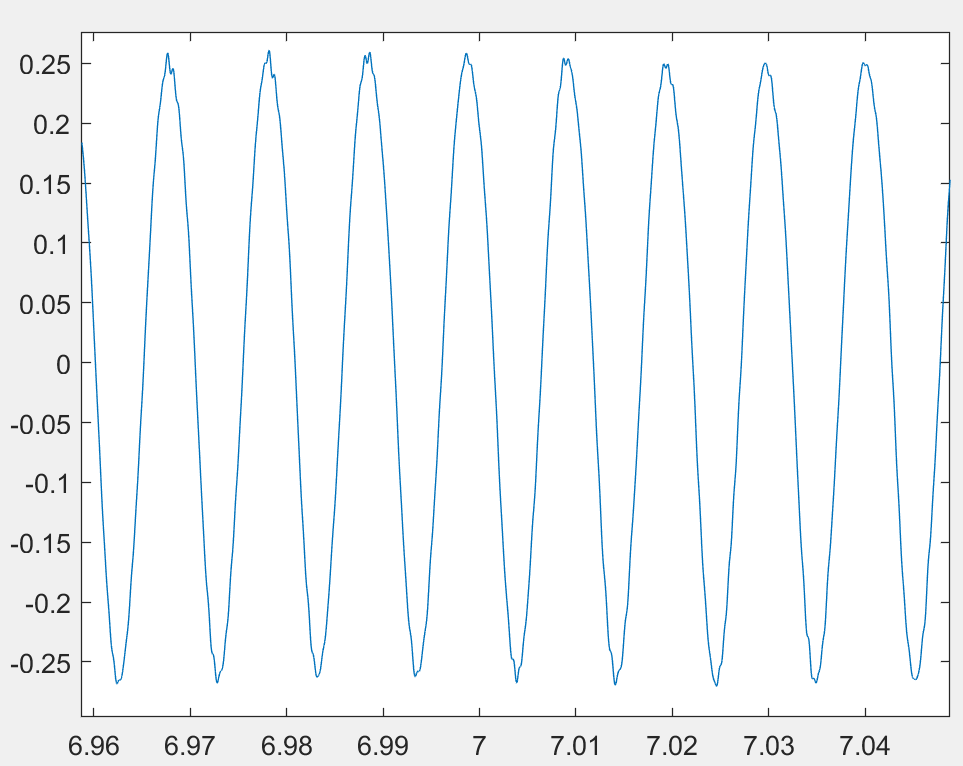

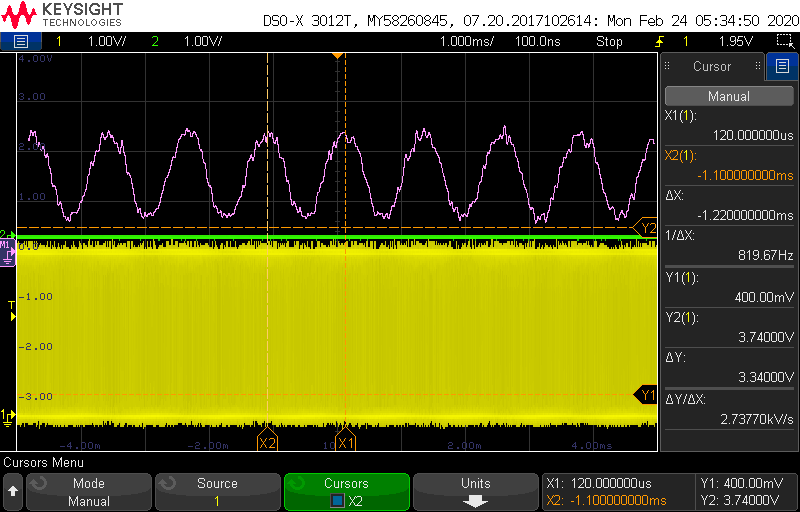

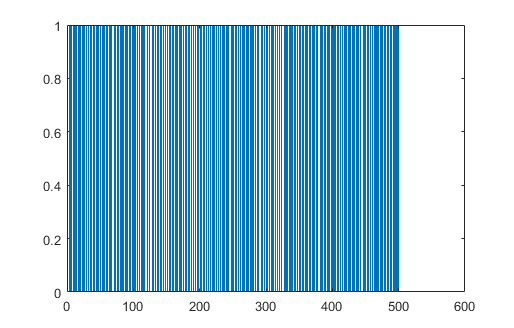

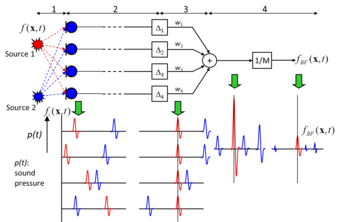

In Matlab, Sarah and I wrote the algorithm for beamforming using the sum & delay method. A simplified block diagram is shown below:

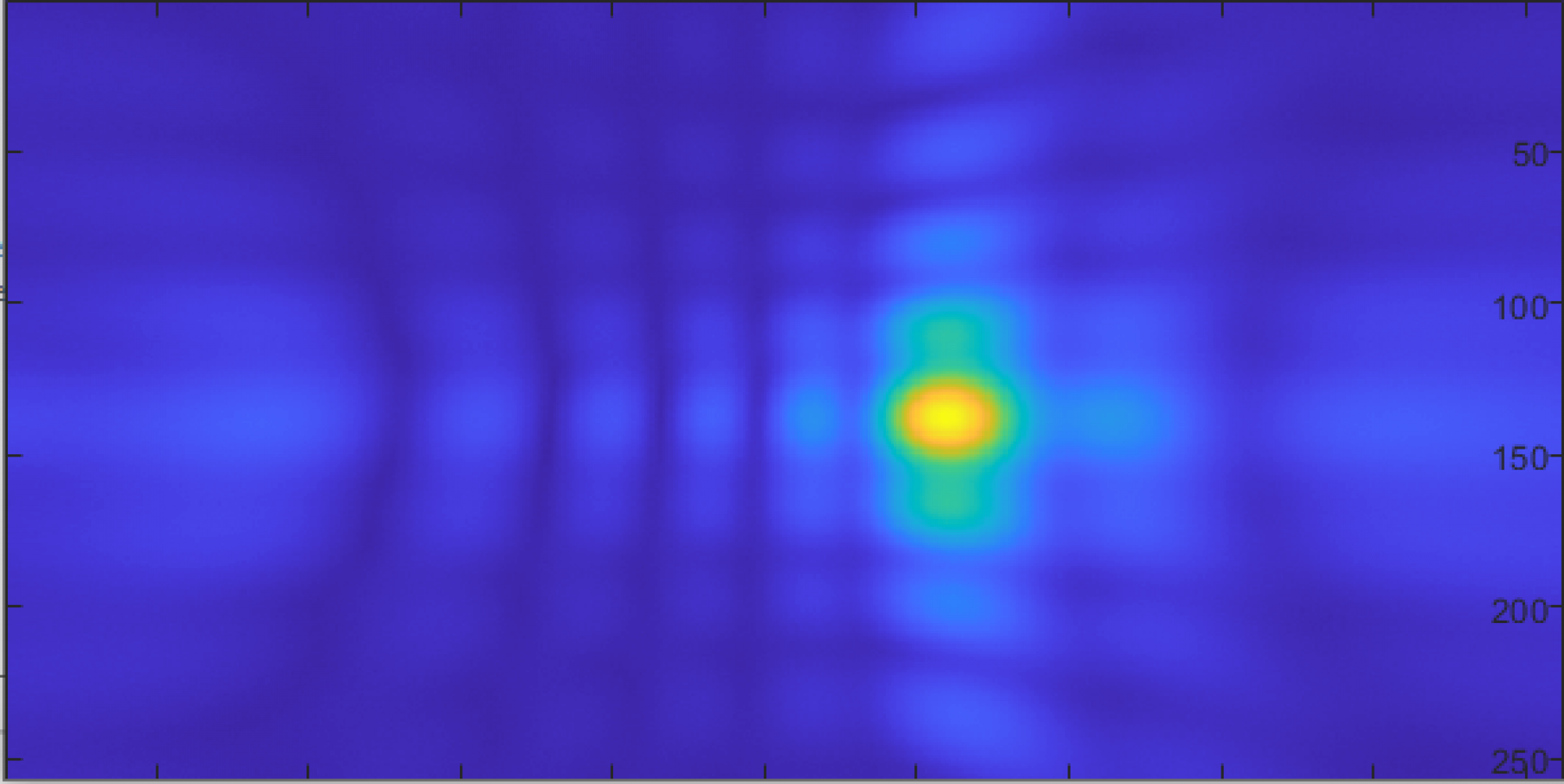

When we have an array of microphones, because of the distance differences between each microphone and the source, we can “steer” the array and focus on a very specific spot. By sweeping the spot over the area of interest, we can identify from where the sound is coming from.

The “delay” in sum and delay beamforming is attributed to delaying the microphone output for each microphone depending on its distance from the sound source. The signals with appropriate delays applied are then summed up. If the sound source matches the focused beam, the signals constructively interfere and the resulting signal will have a large amplitude. On the other hand, if the sound source is not where the beam is focused, the signals will be out of phase with each other, and a relatively low amplitude signal will be created. Using this fact, the location of a sound source can be mapped without physically moving or rotating the microphone array. This technology is also used in 5G communications where the cell tower uses beamforming to focus the signal onto the direction of your phone for higher SNR and throughput.