This week I mainly worked on updating the FPGA firmware and computer network driver. Boards arrived yesterday, but I haven’t had time to begin populating them.

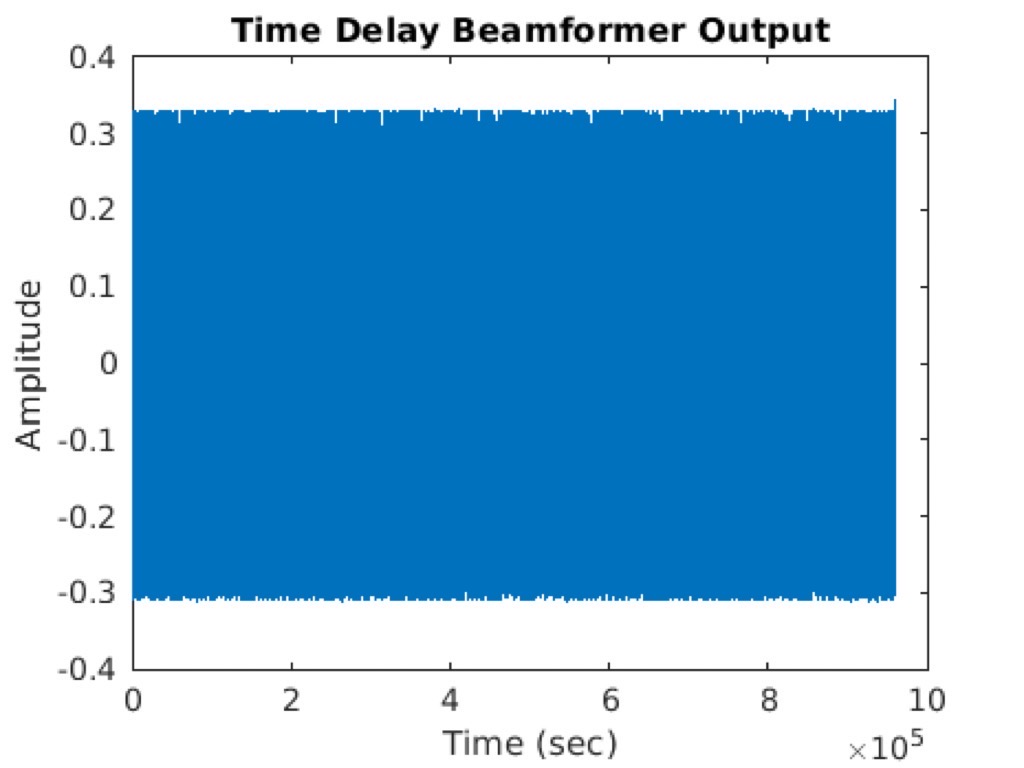

Last week, the final components of the network driver for the FPGA were completed, this week I was able to get microphone data from a pair of microphones back from it, do very basic processing, and read it into a logfile. This seemed to work relatively well:

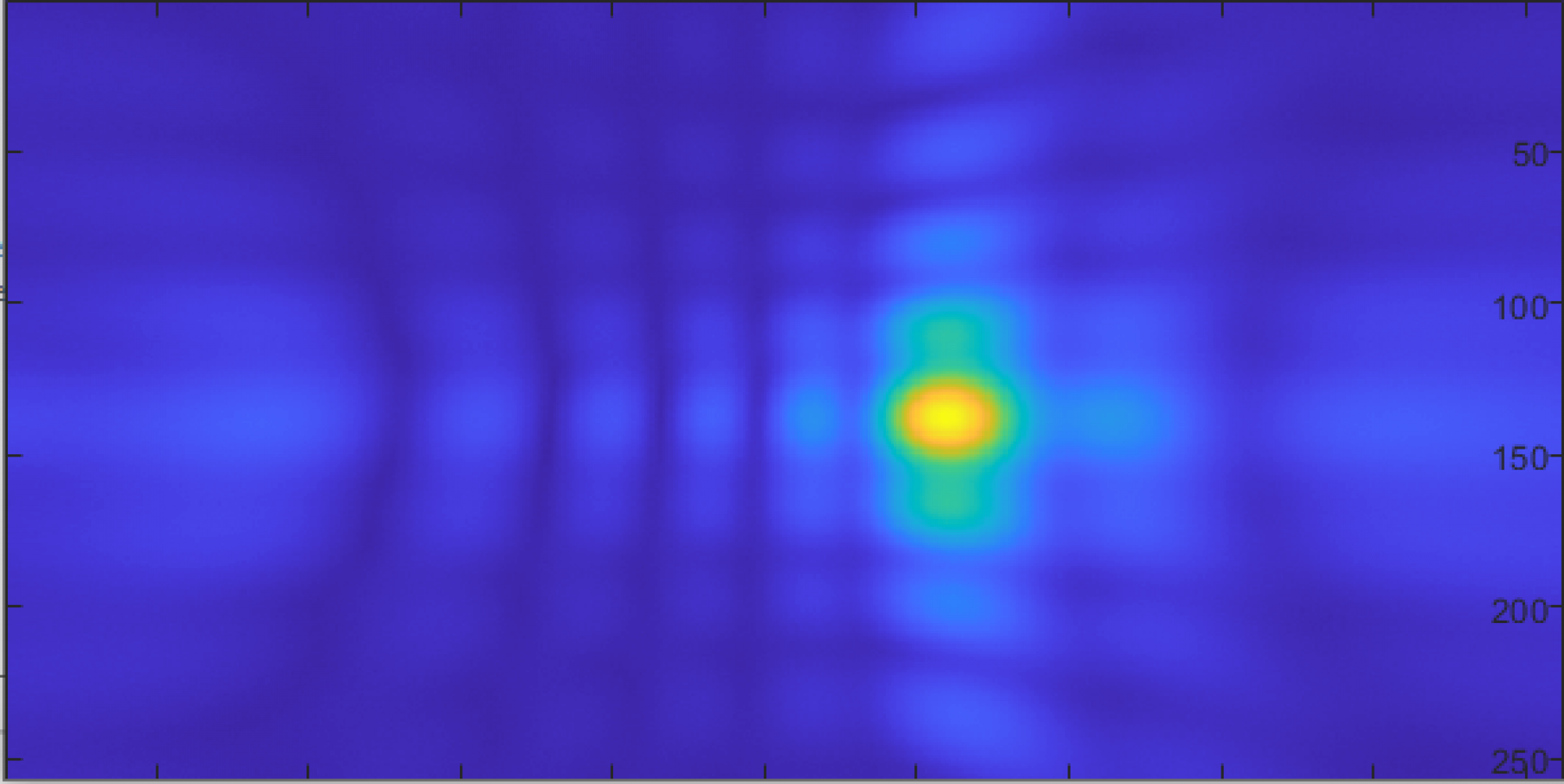

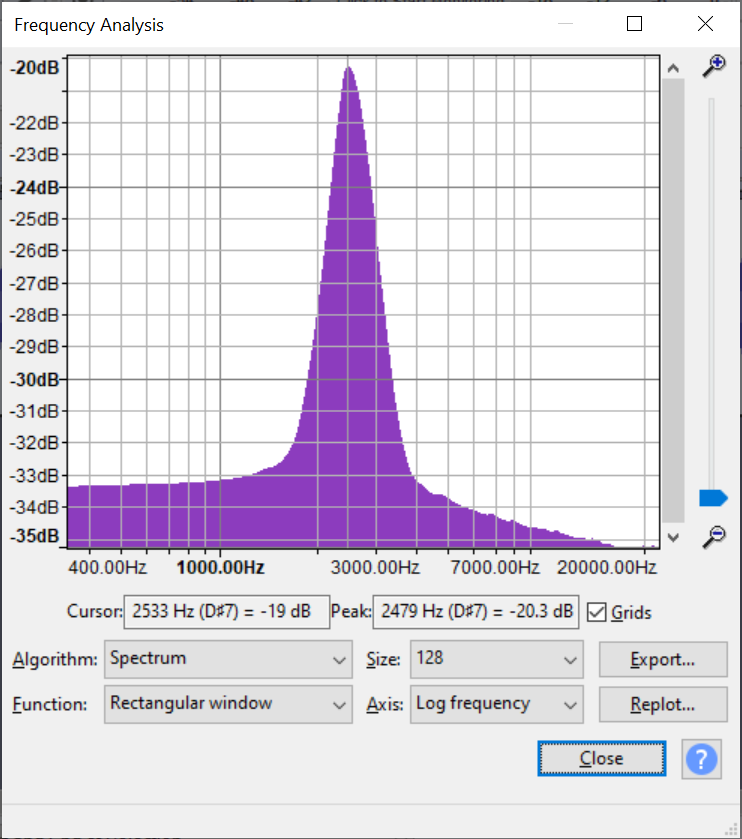

source aligned at 90 degrees to the pair of elements (white line peaks near 90 degrees, as it should)

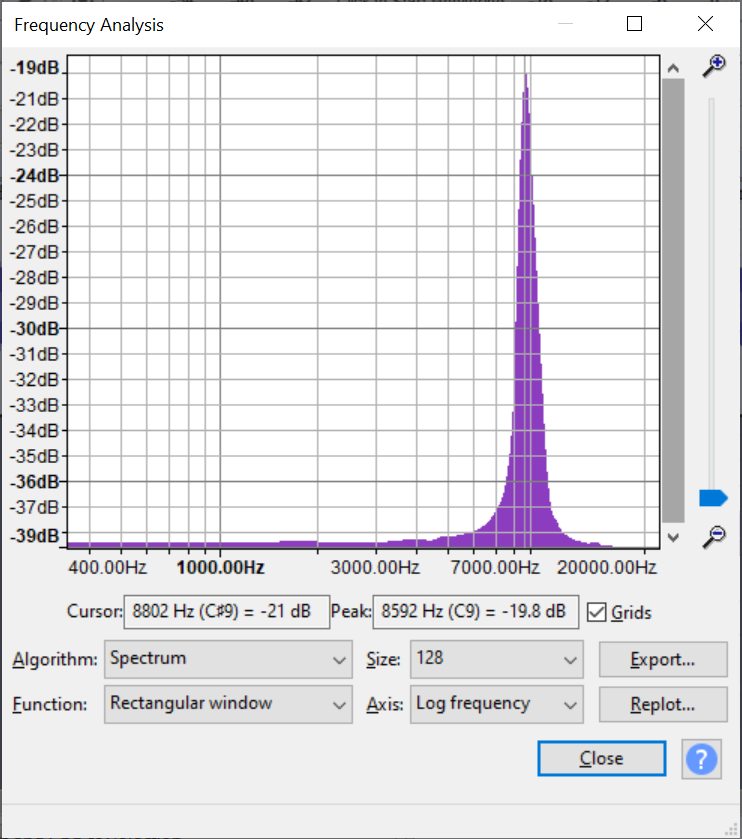

source aligned at 0 degrees to the pair of elements (white line has a minimum near 90 degrees, again as it should)

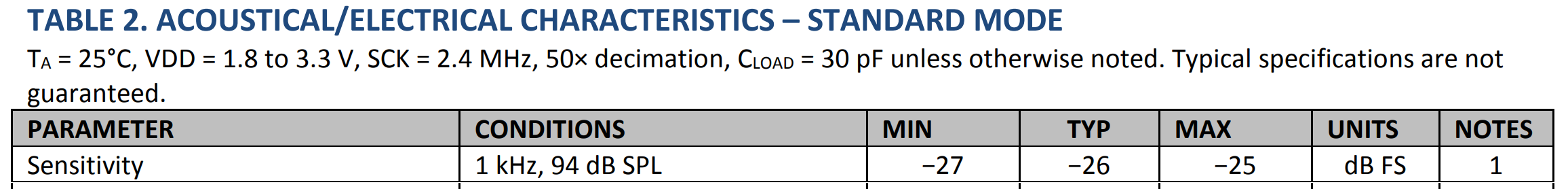

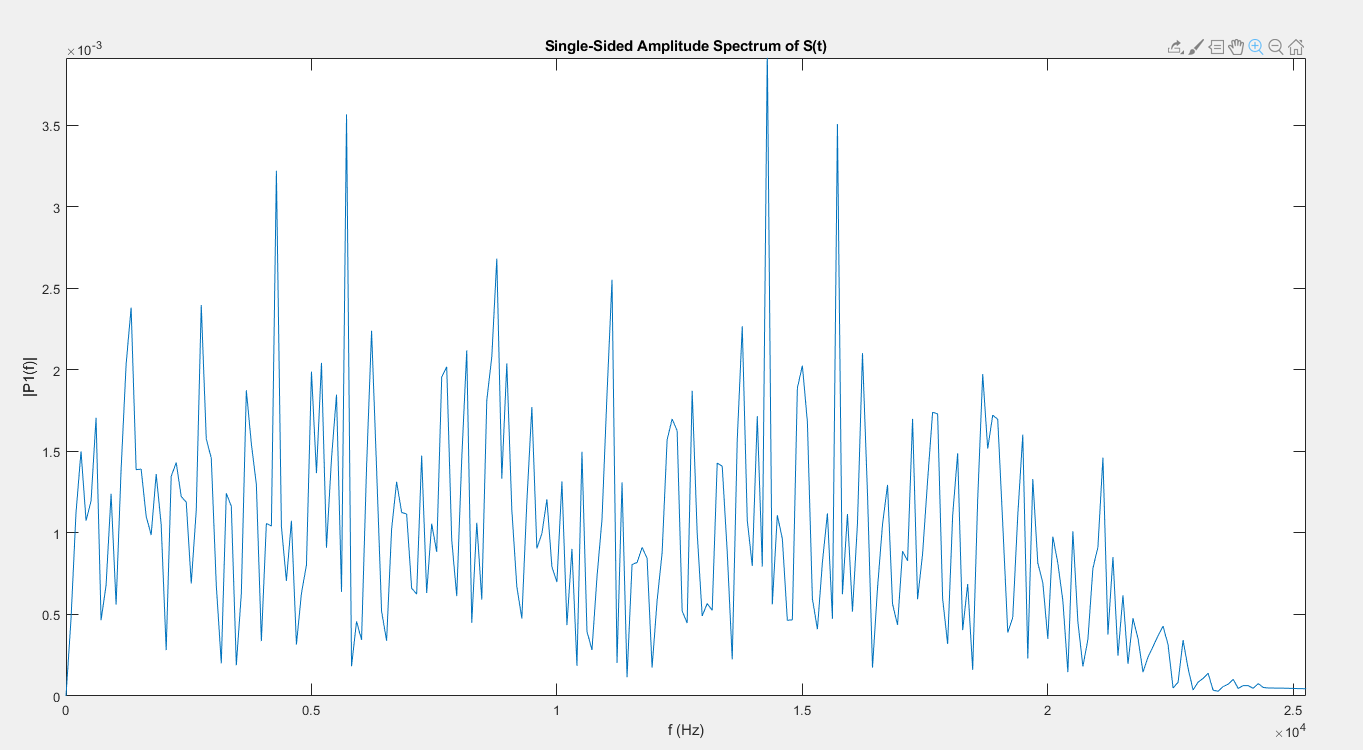

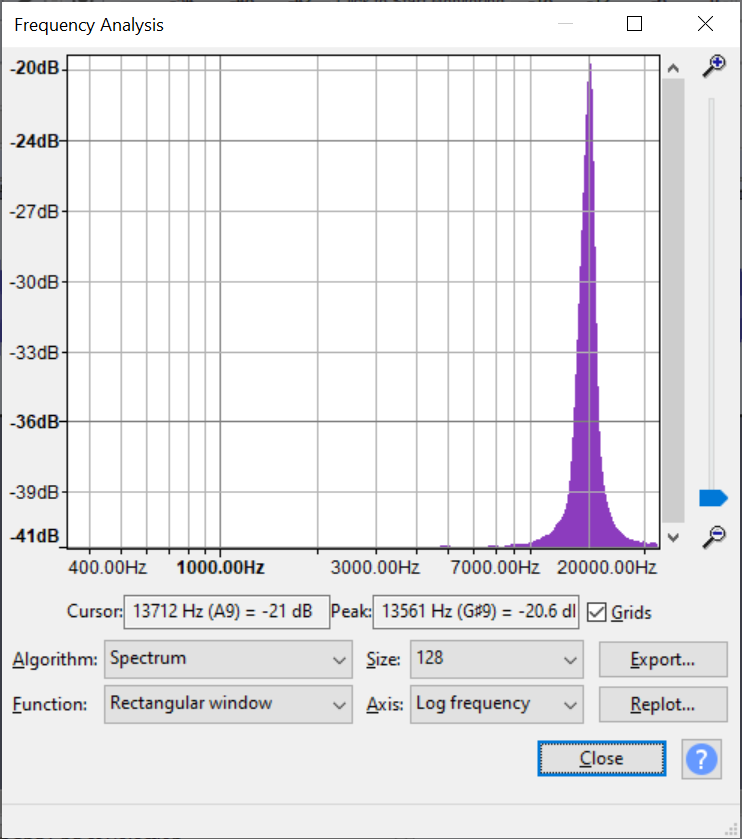

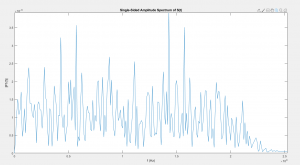

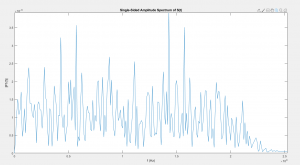

However, these early tests did not reveal a problem in the network driver. Initially, the only data transmitted was the PDM signal, which varies essentially randomly over time, so as long as some data is getting through, so it is very difficult to see any problems in the data without processing it first. Several days later when testing some of the processing algorithms (see team and Sarahs updates), it quickly became apparent that something in the pipeline was not working. After checking that the code for reading logfiles worked, I tried graphing an FFT of the audio signal from one microphone. It should have had a single, very strong peak at 5KHz, but instead, had peaks and noise all over the spectrum:

I eventually replaced some of the unused microphone data channels with timestamps, and tracked the problem down to the network driver dropping almost 40% of the packets. While Wireshark verified that the computer was able to receive them just fine, there was some problem in the java network libraries I had been using. I’ve started working on a driver written in C using the standard network libraries, but haven’t had time to complete it yet.

Part of the solution may be to decrease the frequency of packets by increasing their size. While the ethernet standard essentially arbitrarily limits the size of packets to be under 1500 bytes, most network hardware also supports “jumbo frames” of up to 9000 bytes. According to this : https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=6&ved=2ahUKEwjrx8zAvM_oAhUvhXIEHcBHDmYQFjAFegQIBxAB&url=https%3A%2F%2Farxiv.org%2Fpdf%2F1706.00333&usg=AOvVaw1OaEu0ozlfTlaN1ZTfb-IW paper, increasing the packet size above about 2000 bytes should substantially lower the error rate. So far I’ve been able to get the packet size up to 2400 bytes using jumbo frames, but I have not finished the network driver in order to test it.

Next week I mainly plan to focus on hardware, and possibly finish the network driver. As a stop-gap, I’ve been able to capture data using wireshark and write a short program to translate those files to the logfile format we’ve been using.