This week was a great iteration on last week’s design focussed work. Previously, what packages and what components are involved were starting to come together, but this week they were decided. Furthermore, detailed discussions over how these players speak to each other both in protocol and general work flow was ironed out. These were big next steps in creating the clarity needed to create a performant and scalable system.

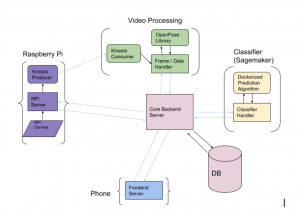

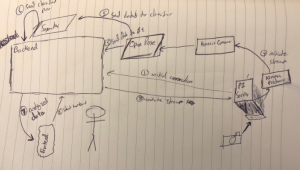

A big stress of this week was communication protocols, and in our current design (diagram below) we plan to use HTTP Requests and Responses to accomplish most of the intra-system messaging. This well established and flexible protocol seems ideal for keeping our communication straightforward. All arrows in blue are those that we believe to be HTTP communications and all in black we believe can be handled through internal calls.

Throughout this detailed design process we also managed to get an idea as for what servers we need to be live as well as what those servers must do. The Raspberry Pi server must be able to initiate a connection with the backend as well as stream content to Kinesis. The Frame / Data handler must be able to take a Kinesis video stream, utilize the OpenPose Library, and ferry that data to the Core Backend Server. The Core Backend Server has a host of responsibilities including but not limited to: communicating with the frontend, managing a user session, prompting the classifier, and correcting form. The Classifier Server must be able to use the dockerized classification algorithm to classify OpenPose data and send that to the backend.

For next week, I hope to dig into the duties of the Core Backend Server. This means defining rigidly all we expect the backend to do. Then the next step is to create high level documentation defining all the endpoints for each subsystem. This list will be incredibly useful in terms of work delegation and scheduling.

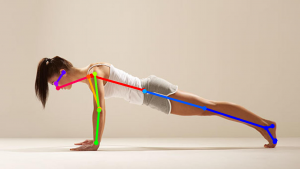

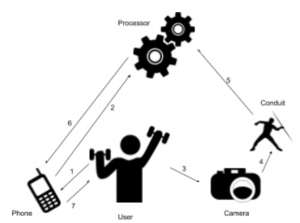

the whole team. I specifically was looking into the high level architecture of our system. This includes what components we need, how they interact, and how they communicate. We had a rough idea of the involved components coming into this week as can be seen in left figure, yet as a team we wanted to take this high-level model and start deciding what will actually fill the role of each part. I looked into how to link the Raspberry Pi to AWS Kinesis thus fulfilling the role of the conduit, and it turns out AWS provides a tutorial on how to do so. The Pi can act as a Kinesis Producer and the AWS Server as the Kinesis Consumer thus streaming our video straight into the processing. Then I made a sketch of the flow of how the components can interact for an average use case. The Pi will need to initially link with the backend and establish its connection to AWS Kinesis. Then the user will initiate a workout through the frontend which will prompt the backend to tell the Pi to initiate a stream. That stream can be sent through Kinesis running OpenPose and the data can be sent both to AWS SageMaker which we plan to use to run our

the whole team. I specifically was looking into the high level architecture of our system. This includes what components we need, how they interact, and how they communicate. We had a rough idea of the involved components coming into this week as can be seen in left figure, yet as a team we wanted to take this high-level model and start deciding what will actually fill the role of each part. I looked into how to link the Raspberry Pi to AWS Kinesis thus fulfilling the role of the conduit, and it turns out AWS provides a tutorial on how to do so. The Pi can act as a Kinesis Producer and the AWS Server as the Kinesis Consumer thus streaming our video straight into the processing. Then I made a sketch of the flow of how the components can interact for an average use case. The Pi will need to initially link with the backend and establish its connection to AWS Kinesis. Then the user will initiate a workout through the frontend which will prompt the backend to tell the Pi to initiate a stream. That stream can be sent through Kinesis running OpenPose and the data can be sent both to AWS SageMaker which we plan to use to run our classifier and the backend for user feedback. This week I spent focussing on ironing out these details with the flow to make sure that the technology we want to use can work together and that the architecture is clear.

classifier and the backend for user feedback. This week I spent focussing on ironing out these details with the flow to make sure that the technology we want to use can work together and that the architecture is clear.