This week – apart from the final presentations – I spent optimizing our pipeline so we can decrease latency (now we achieve about 25 frames per second on average), which is an important metric for our use case. Additionally, I am working with Neeraj to improve our model performance on a larger set of classes (we originally had 100 but now are pushing towards 2000). We realized a couple mistakes when training our previous model which affected the real-time performance (like training on frames which mediapipe did not recognize properly and having a static batch-size). Thus, we are changing the pre-processing of the data and retraining the model. I am on schedule for my deliverables and we hope to have a fully finished product by early next week. After I integrate the pipeline with Sandra’s LLM API code, I will work to implement the project on the Jetson.

Team’s Report for 4/20/24

Our project design has changed a lot throughout the last two weeks. Since the pose estimation model was not quantizable for the DPU, it could not be efficiently accelerated on the FPGA. Due to these reasons, even after improving the inference timings of the HPE model, it would make more sense data latency wise to actually run both the HPE and the classification model directly on the Jetson. This was one of our backup plans when we first decided on the project. There are not additional costs to this change. We are currently finishing the integration of the project and then measuring the performance of our final product. One risk is if the Llama LLM API use does not end up working then we will have to quickly switch to another LLM API such as GPT4. There is no updated schedule unless we cannot finish the final demo in the next couple of days.

Kavish’s Status Report for 4/20/24

Over the last two weeks, I worked a lot on the FPGA development, which has led significant changes on our overall project. The first thing I realized after trying a lot was that the our pose estimation model (mediapipe) is not easily quantizeable and deployable to my FPGA’s DPU. I was able to deploy another pose estimation model which had all the supported operations and functions; however, since our classification model (SPOTER) requires mediapipe landmarks from both hands and pose, this was not a feasible option to pursue anymore. Thus, I began optimizing and finalizing the inference of the mediapipe model as much as I could and then began integrating it with Neeraj’s classification model. I designed the system structure and am currently trying to finish all of the integration. I hope to have the final pipeline working by tonight, so then we can do all of the testing and measurements tomorrow. We are a little behind schedule but hopefully by putting enough time over the next couple of days we can finish the final demo.

What I have learned: I have learned a lot of new tools with regards to FPGA development. In particular, how to use Vivado and Vitis to synthesize designs and deploy them on DPU’s. I also learned a lot of basics behind machine learning models like CNNS, alongside libraries like PyTorch. In order to do so, I relied heavily on online resources like youtube or research papers. Many a times I also followed along different articles written by individuals who have tried to develop small project on Kria boards.

Kavish’s Status Report for 4/6/24

This week was our interim demo and I finish two subsystems for the demo: HPE on the FPGA and live viewer application for displaying the results. I have already discussed the live viewer application over the last couple of status reports. For the demo, I implemented a holistic mediapipe model for human pose estimation (full upper body tracking) on the programmable software (meaning the Kria SOMs). This model takes in a live video stream from a usb camera and outputs the detected vector points in json format through UDP over the ethernet. This implementation is obviously unoptimized and not accelerated on the fabric yet. Over the next two weeks I plan on accelerating this model on the fabric using the vitis and vivado tools and integrate it with the jetson. I am not behind schedule but depending on the difficulties I might have to put in more hours behind the project. By next week, I hope to have significant progress on putting the model on the dpu with maybe a few kinks to solve.

Verifying Subsystem (HPE on FPGA): Once I finish the implementation, there are two main ways I will be verifying my subsystem. The first is measuring the latency, this should be pretty simple benchmarking where I will run prerecording video streams and live video streams and measuring end to end delay till the model output. This will help me understand where to improve the speed of this subsystem. For the second part, I will be testing the accuracy of the model using PCK (percentage of correct keypoints). This means I will run a pre-recording and labeled video stream through the subsystem and ensure that the predicted and true keypoints are within certain threshold distances (50% of the head bone link).

Team’s Status Report for 4/6/24

After our demo this week, the two main risks we face are being able to accelerate the HPE on the FPGA with a low enough latency and ensuring that our classification model has a high enough accuracy. Our contingency plan for HPE remains the same, if the FPGA accelerations does not make it fast enough, we will switch to accelerating it on the GPU on our Jetson nano. As for our classification model, our contingency is retraining it on an altered dataset with better parameter tuning. Our subsystems and main idea have not changed yet. No updated schedule is necessary, the one submitted on slack channel for interim demo (seen below) is correct.

Validation We need to check for two main things when doing end to end testing: latency and accuracy. For latency, we plan to run pre-recording videos through the entire system and benchmark the time of each subsystem’s output alongside end-to-end latency. This will help us ensure that each part is within the latency limits listed in our design report and the entire project’s latency is within our set goals. For accuracy, we plan on running live stream of us signing words, phrases, and sentences and recording the given output. We will then compare our intended sentence with the output to calculate a BLUE score to ensure it is greater than or equal to 40%.

Kavish’s Status Report for 3/30/24

I have been working this week on finishing subsystems for the interim demo. I have mostly finalized the viewer and it should be good for the demo. Once Sandra gets the LLM part working, I will work with her to integrate the viewer for a better demo. I have finished booting the kria board and am currently working on integrating the camera to it. For the demo, I aim to show a processed image stream through the FPGA since we are still working on finalizing the HPE model. I have been having some trouble working through the kinks of the board and so I am a little behind schedule. I will have to alter the timeline if I am unable to integrate the camera stream by interim demo.

Kavish’s Status Report for 3/23/24

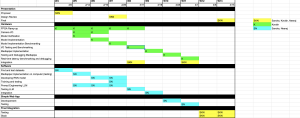

I finished up the code for the viewer and synchronizer that I described last week (corrected few errors and made it product ready). The only part left for the viewer is determine the exact json and message format which I can change and test when we begin integration. I hoped to get back on the FPGA development and make more progress on that end like I mentioned last week, but I had to spend time on coming up with a solution for the end of sentence issue. The final solution that we decided on was to run a double query to the LLM while maintaining a log of previous words given by the classification model. The first query will aim to create as many sentences as possible based on the inputted log, while the second query will determine if the current log is a complete sentence. The output of the first query will be sent as a json message to my viewer which will continuously present the words. The log will be cleared based on the response of the second query. Although I am behind on the hardware tasks, Sandra has now taken over the task of testing and determining the correct prompts for the LLM to make the previous idea work, so I will now have time next week to get the FPGA working for the interim demo. My goal is to first have a basic HPE model working for the demo (even if it does not meet the specifications) and then spend the rest of the time finalizing it. I have updated the gantt chart accordingly.

Team’s Status Report for 3/23/24

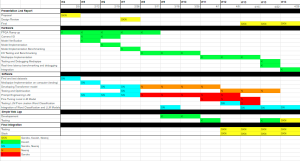

Overall, apart from the risks and mitigations discussed in the previous reports about the new spoter classification model and the FPGA integration, the new risk we found was the ability to determine the end of sentences. As of right now, our classification model simply outputs words or phrases based on the input video stream; however, it is unable to analyze the stream to determine end of sentence. In order to mitigate this problem, our current solution is to run a double query to the LLM while maintaining a log of previous responses to determine the end of sentence. If this idea does not work, we will introduce timeouts and ask the users to take a second of pause between sentences. No big design changes have been made until now since we are still building and modifying both the pose estimation and classification models. We are a bit behind schedule due to the various problems with the model and FPGA, and have thus updated the schedule below. We hope to have at a partially integrated product by next week before our interim demo.

Team’s Report for 3/16/24

Apart from the risks and contingencies mentioned from our previous status reports, the latest risk is with out Muse-RNN model. The github model we found was developed in Matlab and we planned on translating and re-adopting that code to python for our use. However, the good part of the code is p-code (encrypted). Thus, we have two options: go forward with our current RNN and plans or try a new model we found called spoter. Assuming that there are problems with our current plan, our contingency is to explore this new model of spoter. The other risks of FPGA and LLM remain the same (since development is ongoing) and our contingencies also remain the same.

There have been no major updates to the design just yet and we are still mostly on schedule. Although we might change it next week because Neeraj might finish development of LLM first before we finalize our RNN.

Kavish’s Status Report for 3/16/24

This week I split my time working on the FPGA and developing a C++ project which will be useful for our testing and demo purposes. For the FPGA application, I currently in the process of booting PetaLinux 2022. Once that process is finished, I will connect our usb camera and try use my C++ project for a very basic demo. This demo is mainly to finalize the setup of FPGA (before I develop the HPE model) and test the communication bandwidth across all the ports. The C++ project is an application to receive two streams of data (a thread to receive images and another thread to receive json data) from an ethernet connection as UDP packets. The images and corresponding data must be pre-tagged with ID in the transmitting end so that I can match them on my receiving application. A third thread pops an image from the input buffer queue and matches its ID with corresponding json data (which is stored in a dictionary). It writes the data onto the image (could be words, bounding boxes, instance segmentation etc.) and saves the updated image to an output queue. A fourth thread then gets the data from the output queue and displays it. This project mainly relies on OpenCV for image processing and a github library (nlohmann) for json parsing in C++. I do not think I am behind schedule just yet and should be able to stick to the development cycle as planned. Over the next week I plan to finish booting the FPGA and then test running an image stream and across to the Jetson.

Kavish’s Status Report for 3/9/24

My last two weeks were spent working mainly on the design report. This process made me look a little bit deeper into the system implementation component of our project compared to the design presentation. Since there were numerous ways to achieve the same goal of implementation, we analyzed the different methods to understand the advantages in terms of functional efficiency and developmental efficiencies. For the FPGA development, we will be highly reliant on the Vitis AI tool and its model zoo and libraries. This decision was made for fastest development time in mind instead of focusing on optimizations from the get-go. Similarly for the web application, we decided that we should initially just use the OpenCV library for viewing and debugging purposes and then transition to a more mature display platform later in the project. As for my progress on the HPE development on FPGA, I am still running into a couple of roadblocks to fully compile the project on the board. I am referring to other works online who have attempted similar projects and will reach out for additional help as required. As for the progress, I am on the border of on-track or a little behind schedule due to the time spent on the design report. I do not need to alter the schedule as of right now, but will update it next week if required.

Team’s Status Report for 2/24/24

Our risks still remain the same as last week. The primary ones being the porting of MediaPipe onto FPGA and the performance of our self-trained RNN. If we are unable to port the MediaPipe, we will simply implement it on the Jetson. Although it is undesirable, it should still be possible to make our MVP. If we are not able to get the required accuracy from the GRU RNN model, we will look into either LSTM or a transformer as a plan B.

There were no major design changes made this week. After more benchmarking and testing, we might have to make more changes.

Updated Gantt chart is attached below.

Kavish’s Status Report 2/24/24

Most of this week was spent on preparing the design presentation and then translating that presentation into report. Me and Neeraj discussed our design quite extensively for the presentation and report. We looked at different libraries for RNN and whether to use GRU or LSTM. I also spent time learning about the MediaPipe model as I am quite weak in the Machine Learning aspects. Unfortunately I was unable to work more on the FPGA development and still have to port a pre-trained model onto it for testing purposes. Although this does not put me behind the new schedule that we developed for design presentation, I will need to put in some extra hours next week to be ahead of schedule. I plan on implementing a basic pose estimation model from the Vitis model zoo and then benchmarking its performance to fully validate our idea.

Kavish’s Status Report for 2/17/24

Over the last week, I got access to the canvas and piazza for 18643 class. Using that, I have followed along and finished the first few labs to understand Vitis tool flow and software emulation steps for testing. I also researched about the current confusion we are having – whether doing pose estimation on FPGA is worth it or not. Looking online, I found evidence that many pose estimation models have been ported onto our FPGA (KV260) and have achieved comparable throughput to many Jetsons. Although it is a positive response, it is not conclusive evidence that our current plan is fully feasible. Thus, I am currently working on trying to port pre-trained models from Vitis Model Zoo to self-measure the feasibility of the project. I am running into some roadblocks on some development steps on the tool side (trying to find or build the correct configuration files and booting the kernel on the SD card), which I am trying to resolve. Although I am a little behind schedule, it is not too far off just yet to change anything on our project timeline. I should be on schedule if I can make significant progress in trying to get the FPGA running with a pre-trained pose estimation model from the model zoo. Not only will this help to confirm if our current design is feasible, but it will also put us on track to get MediaPipe or OpenPose running later down the line.

Kavish’s Status Report for 2/10/24

This week we finished and presented our proposals to the class and analyzed the feedback from the TAs and Professors. Apart from that I downloaded Vivado and Vitis tools on my computer to start ramping up the FPGA development. I started exploring the basic steps of understanding the block diagrams and stepping through the synthesis tool flow. I have also been working with Neeraj to understand different Human Pose Estimation models (OpenPose and MediaPipe) and the feasibility of porting them onto the FPGA. I found a detailed resource that has implemented ICAIPose network onto the DPU, and will try to replicate their steps this week to understand feasibility and performance. As long as I can get the board and tools set up by next week, I should be on schedule and maintain progress according to Gantt Chart.

Team’s Status Report for 2/10/24

As we analyze the project right now, the most significant risks that could jeopardize the success of the project are not being able to port our HPE model onto FPGA, not having enough data to train a proper RNN, getting inconsistent response when working in Real-Time, and having communication failures. If there are any major problems with our FPGA, our contingency is to reduce our MVP and port all parts of our pipeline directly onto a Jetson. Although we seem to have enough data right now, we have found a couple of datasets which we could try to combine with some additional pre-processing if they are needed for training. Finally, if there are any failures due to latency requirement, we have prepared enough slack to increase latency if necessary. Compared to our original design, we are now considering an augmented version of the pipeline. We plan to run Human Pose Estimation and use the outputs from that model to train our ASL classification RNN. We are still exploring this concept but we hope that will reduce our model size. We are also considering moving the RNN from the computer and running on the Jetson. This will allow use to develop a more compact final product. Of course, this adds another part to our parts list; however, we will discuss this idea more with faculty before implementing this solution. We are still on schedule and no updates are necessary as of right now.