One of the most significant risks is the performance of the ML model given the set of sensors. While collecting data this week, we were concerned about the large set of letters in the BSL alphabet that have extremely similar hand positions to each other but vary based on touch which our gloves cannot detect. We have not performed the development of the model so we are unsure if this will be a problem. If it is, we will look to test a variety of ML models to see if we can boost performance. Worst case, we will look to shrink the set of letters we hope to detect to something that our gloves can more feasibly distinguish.

As mentioned above, we will evaluate the performance of the ML model into next week and make changes to the vocabulary requirement based on feasible performance. We also added a calibration phase into the software before the ML model to help with adaptability to different users. In addition, we are in the process of ordering a PCB for the circuitry component. We will integrate that if possible during the last week.

Please look here for updated Gantt Chart: Gantt

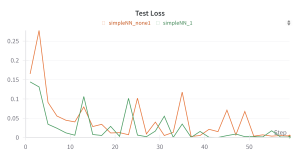

In terms of verification of the sensor integration, we have added a calibration phase at the start of the data collection for each letter. What this entails is the user having their hands at their most flexed state and most relaxed state for five seconds. We then calculate the maximum and minimum data points from the sensor values collected during this phase, and check that they appear as expected. This forms a delta that is then used to normalize future data to be in the range of 0-1, normalized by the calibrated delta. In terms of verification of the ML model, we will look into the performance of ML models on various test data in offline settings. We will be able to collect relevant data on accuracy and speed of inference. In terms of verification of the circuit that integrates the sensors together with the compute unit, we take a look at the sensor/IMU data that is collected as the person is signing, and sometimes we will notice discrepancies that cause us to reexamine the connections on our circuit. For example, one time we had to change the pin one of the V_out wires was in because it was rather loose and affecting the data we were getting.

With regard to overall validation, we will look to test real-time performance of the glove. For accuracy, we will have the user sign out a comprehensive list of the letters and evaluate the performance based on accuracy. We will also measure the time from signing to speaker output using Python’s timing modules.

We also plan on conducting user testing. We will have several other people wear the gloves and then complete a survey that evaluates their comfort, the visual appeal, and the weight of the gloves. They will also be allowed to make any additional comments about the design of it as well.