This week I worked on the physical design and enclosure for our PCB and general glove design. This involved 3D printing a case which took a bit of trial and error to get just right. Based on our use case requirements, we were aiming for something that would be just a bit larger than the PCB, allow the battery to be secure, make it easy to plug things in and out of the PCB, and something that looks decent. In the first design that I printed, it was a box that looked quite bulky. I made some tweaks and printed a much nicer version with a case that says “EchoSign” as well as mounting holes and a slight curve to fit the hand a bit better. This made our design look much more sleek. We also fried one of our boards so I had to resolder headers onto another board we had and I ordered some spare parts leading up to the demo.

Somya’s Status Report for 4/27/2024

This past week I gave the final presentation, so a good portion of my time was spent working on the slides and preparing for the talk itself. In addition, I’ve finished the haptic unit tests and have made the edits to Ricky’s Bluetooth code. Tomorrow when we are done we will verify that the haptic pulses are synchronized with real-time sign detection when Ricky updates his code with my edits. We decided to implement the haptic feedback this way, because it wouldn’t have made sense for me to download all the ML packages to test this feature that is ideologically separate from the ML detection. This decision saved us time which is good. One issue I had to debug was that when sending a byte signal from the computer, the byte would send over and over again (as verified by debug statements in the Arduino code) and the pulse would happen repeatedly instead of just once. To fix this I had to send an ACK back from the Arduino to signal to the Python code to stop signaling.

My progress is on schedule.

This week, I hope to hash out the script/layout of the final video as well as complete my action items for the final report. We also plan on meeting tomorrow to do a final full system test now that all parts of our final product deliverable are in place.

Team Status Report for 4/27/2024

The most significant risk is the integration of the PCB. Because we collected training data from the glove when the ARDUINO was attached to the protoboard, some of the IMU data was adjusted for data similar to that. Right now, we are hoping that by fixing the PCB case more securely on the glove, we can hopefully fix accuracy issues. The contingency plan is to reattach the protoboard which we are sure works.

There are no major changes at this stage.

There are no schedule changes at this stage.

The ML model had a unit test where we evaluated performance by seeing the accuracy on a separate test set. We were content with the performance as the model always had a performance of above 97% on different subsets of the data.

The ML model also had a unit test for latency using Python’s timing module. This timing system almost always reported an infinitesimal amount of time, so we were happy with the performance.

We also had some unit tests for the data that entailed looking over the flex sensor readings and comparing them to what we expected. If they deviated too much, which was the case for a few words, we took the time to recollect the data for that word or simply removed outlier points.

Overall accuracy was tested by having us sign each word in our selected vocabulary 12 times each in random order and evaluating the number of times that it was correct. Initially, performance was rather poor at around 60% accuracy. However, after looking at the mistakes and developing a smaller model for easily confused words, we were able to bump performance up to around 89%.

Overall latency was tested at the same time by calculating the time difference from the initial sensor reading until the system prediction. With our original classification heuristic, the time it took was around 2 seconds per word which was way too slow. By changing the length of predictions it needs, we were able to bring overall latency down to around 0.7 seconds which is closer to our use-case requirement.

As for sensor testing, we would take a look at the word prior to data collection, and then manually inspect the data values we were getting to ensure they corresponded with what we expected based on the handshape. For example, if the sign required the index finger and thumb to be the only two fingers bent, but the data vector showed the middle finger’s flex sensor value was significantly low, we would stop data collection and inspect the circuit connections. Oftentimes a flex sensor had come a little loose and we would have to stick it back in to get normal readings. In addition, post data collection we would compare the feature plots for all three of us for each sign, and make note of any significant discrepancies and why we were getting them. Most often it would be due to our hand sizes and finger lengths being different, which is to be expected, but occasionally there would be feature discrepancies that indicate someone was moving too much or too little during data collection, which would then let us know that we should recollect data for that person.

In terms of haptic feedback unit testing, we wrote a simple Python script and Arduino script to test sending a byte from the computer to the Arduino over Bluetooth, and whether the Arduino was able to read that byte and create a haptic pulse. Once we confirmed this behavior worked through this simple unit test, it was then easy to integrate this into our actual system code.

Ricky’s Status Report for 4/27/2024

This week, I did some brief work in finalizing some metrics for the ML model. This included some rigorous testing into the real-time performance and the test-time performance. I was also able to develop confusion matrices on some data to get a better picture for how the model is working. I am also pretty confident in my final ML model design with the addition of some more words into the second model to allow for more refined predictions. I also briefly tested the model with the PCB as made by Ria. However, the performance of the model is inconsistent, which I believe is due to the fact that the PCB is currently being secured by tape. I will hope to continue testing once the PCB is more reliably secured, but we always have the original protoboard as a backup plan which I will propose if needed. I also spent a decent chunk of time working on the poster. This tied nicely with the metrics that I found. I also wrote out the start of the final report which will hopefully have us finishing before demo day.

My progress is on schedule with the slight caveat that I am waiting for the PCB design to be finalized. I foresee that minimal adjustments will need to be made once that is attached but we will have to wait and see.

Next week, I hope to quickly finalize our demo day product. Finish the report and video and present on demo day.

Ria’s Status Report for 4/20/2024

This week I worked on testing the PCB. I started by checking that the dimensions of the pins were accurate to plug the Arduino and sensors into. After noticing that the current draw for the DC DC step up motor caused the batteries to die really fast, we decided to scrap that idea and just put two 3.7V batteries in series to power the Arduino. Since we decided not to use a step up motor, I desoldered the mounting pins for that and that opened up space to mount the batteries.

I then worked on testing that haptic feedback would work on the Arduino. Somya worked on getting a signal from the computer via bluetooth that could trigger feedback. I worked on testing that the I2C circuit on the PCB with the haptic motor driver worked. I used an Adafruit example library and found that it worked fine.

I then made a 3D CAD model for a case for the PCB, batteries, and motor driver that will be secured to the glove with velcro and some adhesive on the back of the hand. I plan on finalizing this look by the end of next week.

Somya’s Status Report for 4/20/2024

This week I got the haptic feedback to work. Now, I am able to send a signal via bluetooth from a Python script to the Arduino at the start and end of calibration, as well as when a signed word/letter has been spoken out. The Arduino is able to case on the type of signal it receives and produces an apt haptic feedback pulse. My next steps would be to measure latency, experiment with which type of signal is most user-friendly, and see how far away the sender and receiver have to be in order for this mechanism to work in a timely manner.

My progress is on schedule.

This week I am giving the final presentation, so my main goal is focusing on that. In addition, we have our poster and final paper deliverable deadlines quickly approaching, so I plan to dedicate a significant amount of time to that. I also want to help with the overall look of what we will showcase on demo day, as one of our focus points from really early on was try to make sure our gloves are as user-friendly and not clunky as possible, which is why we’re designing a case to hold the battery and PCB, as well as experimenting with different gloves.

Ricky’s Status Report for 4/20/2024

This week, my main task was debugging our issues with the machine learning model. During the carnival, I trained some preliminary models, but their performance was not that good in real time. To compensate for this issue, I tested a variety of architectures as well as new activation functions. I also manually selected the new words that I determined were more easily mixed up and created a smaller simpler model for just those words. This allowed me to develop a two-model approach to classification that leveraged the original model’s strength at half of the vocab and the more narrow model’s strength for the rest. I also developed a model that handled time series data by stitching in the previous five frames of data as one input into the NN model. Armed with the variety of models, I entered this weekend with the plan of testing them all. I was able to determine that the two-model approach performed the best, being able to determine 10/11 of the words consistently. This was not a 100% rigorous set of tests, but it gave me enough metrics to assist with our final presentation slides, so I was content with that performance as of now.

My progress is on schedule.

Next week, I will narrow down which model I will use for our final demo with a more rigorous and formal testing of the two approaches. I will also assist in the development of the poster by adding additional pictures from the ML side. I will also assist with the video and the report.

Addressing the additional question, I definitely needed to relearn how to work with ARDUINOs and learn about how to interface between ARDUINO and Python. Since I handled the bulk of the Bluetooth and the machine learning side, I did need to handle a lot of the communication between the two platforms. This meant that I had to consult many pages of ARDUINO documentation as well as perform extensive research into compatible Python libraries. For learning strategies, most of it was on reading documentation and reading StackOverflow. I did watch a few videos of other example projects for assistance as well.

Team Status Report for 4/20/2024

The most significant challenge we are facing in our project is the ML algorithm’s detection of our chosen set of words. Currently we have okay accuracy, but prediction is inconsistent, in that depending on the user the accuracy changes, or the model is able to recognize the word, but not enough times in a row to meet the criteria for the classification heuristic. In addition, it is common for the double-handed words in ASL to incorporate some type of motion. This is significant because currently, our model relies on prediction based on a single frame, and not a windowing of the frames. This is probably affecting accuracy, so we are looking into mitigating this by incorporating some type of sampling of the frame data, but the tradeoff to this may be a decrease in latency.

In terms of changes to the existing design of the system, we have decided to nix the idea of using the 26 letters in the BSL alphabet. This change was necessary because in the BSL alphabet, for a significant portion of the letters, the determining factor is based on touch, and our glove doesn’t have any touch sensors. So instead, we found a 2017 research paper that found the top 50 most commonly used ASL words and split them into five categories: pronoun, noun, verb, adjective, and adverb. From each of these categories, we picked 2-3 double-handed words to create a set of 10 words.

No changes have been made to our schedule.

Somya’s Status Report for 4/6/2024

This week I finished collecting data for all BSL letters. We found out that USB collection was much faster than bluetooth, so initially this process was proving to be quite a bit of a time sink until we made the switch halfway through. In addition, I looked into the haptic feedback circuit and will place an order for the linear vibration motor on Tuesday. The circuitry doesn’t look too complicated, the only thing I’m worried about is defining what haptic feedback even means in the context of our product. Ideally, we would want a different feedback to be generated based on how certain the word the user signed was transmitted, so something along the lines of if >90% a certain type of pulse, <30% another, and a maybe range as well, but I’m not sure if this is even feasible so plan to bring this up next meeting.

My progress is on schedule.

This upcoming week, in addition to implementing haptic feedback, I want to look into how we can augment our data as we transition to BSL. After this initial round of data collection, we are finding that a lot of the signs have very similar degrees of bending, and since we have decided not to go with any additional type of sensors, e.g. touch sensors, this will likely lead to a lower accuracy than ideal. This might involve going through the individual csv files for letters that are very similar and quantifying just how similar/different they are, and manipulating the data post-collection in some way to make them more distinct. Once Ricky trains the model over the weekend, I’ll have a better idea on how to more specifically accomplish this.

Ria’s Status Report for 4/6/2024

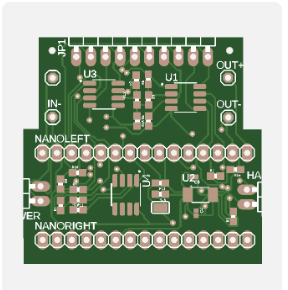

This week I worked on finalizing the PCB, creating the documents to submit the order (bill of materials as well as file detailing part placement). I am in contact with the vendor and we will hopefully get our PCB delivered very soon with no issues. I followed best practices when creating this design, an image of it is below. The PCB will be appx. 1.5″x1.5″.

This week I also worked on testing the calibration program I wrote that will gather data on a user’s max and min flex amount and map their flex value to the range from their own min to max. This will allow the glove to work for people who flex different amounts. We will use this feature for our double glove design.

Finally, I worked on battery supply. I decided that getting a compact battery was best, so I got a 500 mAh LiPo battery that supplies 3.7 V. I got a small DC to DC step up converter to give us a voltage value comfortably within 4.5V-21V which is the Vin range for the Arduino Nano BLE Sense Rev2.