This week, the team made significant progress on both the web app and machine learning model integration. We addressed several backend issues, including package dependencies and storage for user-uploaded files, and resolved integration challenges. Enhancements to the microgrid visualizer now include a slider for hourly predictions and expanded input fields for user uploads. Despite these advancements, issues with the parser persist and will require further attention.

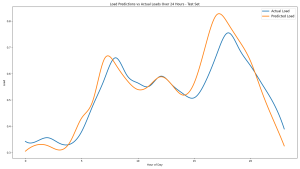

On the machine learning front, efforts focused on fully integrating forecasting models and enhancing the web app’s interactivity and design. New demo features, such as preset scenarios for different weather conditions and holidays, are being developed to showcase the capabilities of our tool in various environments.

Moving forward, the team will continue refining the front and backend, conduct extensive testing across different browsers and devices, and prepare materials such as a poster, video, and final report. Additional enhancements will be made to the forecasting statistics tab to incorporate more dynamic visualizations.

List all unit tests and overall system test carried out for experimentation of the system. List any findings and design changes made from your analysis of test results and other data obtained from the experimentation.

- Unit Tests

- Web Application:

- Test to ensure all forms on the web application validate input correctly and handle errors gracefully.

- Test the file upload functionality to ensure only the correct file types and sizes are accepted.

- Optimizer:

- Test the optimizer with various input ranges to ensure it handles all expected inputs without errors.

- Test specific algorithms within the optimizer to verify that they return expected results for given inputs.

- Machine Learning Model:

- Test the preprocessing pipeline to ensure that data is cleaned and transformed correctly.

- Test the training process to ensure the model fits without errors and handles overfitting.

- Web Application:

- System Tests

- Integration Testing:

- Test the complete flow of data from the web app through the optimizer to the ML model to ensure that data passes through the system correctly and triggers the appropriate actions.

- Test integration with API to ensure that the system interacts with them as expected.

- End-to-End Testing:

- Simulate complete user scenarios from end to end, including logging in, uploading a file, receiving optimization and model predictions, and logging out.

- Integration Testing:

- Change:

- To enhance the system’s performance and user experience, several key improvements were made based on testing feedback. The optimizer’s algorithms were refined and additional checks were implemented to ensure robustness. User feedback indicated that the file upload interface was confusing, prompting a redesign to improve usability and provide clearer instructions. Additionally, the machine learning model’s variable accuracy was addressed by adopting a more dynamic training approach to better adapt to data fluctuations throughout the day. Finally, to handle increased user demand and prevent delays, third-party API interactions were optimized and service plans were upgraded as needed.