This week, I got the GitHub repository working for my teammates and I by setting up Git LFS. I got a basic feed switching algorithm up and running which worked by storing the bounding box sizes returned by a combination of the Canny edge object detection and the GOTURN tracking algorithms and switching to a camera if the bounding box sizes increased for 3 consecutive frames. It wasn’t working very well when I tested it though. When the object detection algorithm moved to color-based detection, I merged my feed selection code with the new object detection code and updated it to support 2 USB cameras instead of just 1 USB camera. This allowed me to make a testing setup that was close to what we would have for the interim demo, except the cameras were stationary instead of tracking the car as it moved. The resulting livestream feed was still unsatisfactory because it either seemed to switch randomly or not switch when it was supposed to, depending on the number of consecutive frames I set for a switch to occur. However it was still progress. Next I moved on to updating my feed selection algorithm to calculate a simple moving average over the past 3 frames for each camera, displaying the camera feed with the greatest moving average. I also added debug statements printing to a text file which allowed me to see what the bounding box sizes were for each camera for each frame, and the frame and time at which the camera feed being displayed was switched. This new algorithm for switching performed much better, being able to consistently capture the front of the car as it raced down the track with 2 cameras pointed in opposite directions at opposite corners of the rectangular track, which was a good improvement from the previous algorithm. However, when the cameras were brought into more overlapping fields of view over the track the switching appeared to switch too quickly and randomly, so there is more improvement needed.

I am on schedule, having a working algorithm for interim demo along with a testing framework that can help me improve the switching algorithm through looking at the logs and comparing them to test cases. A reach goal is for interim demo to show some of these test cases, but for now the focus is the standard rectangular track.

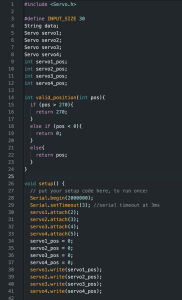

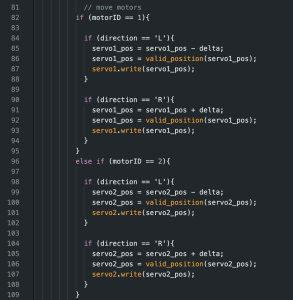

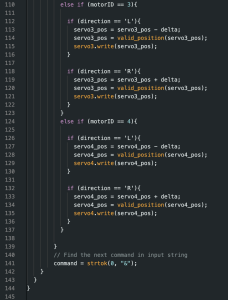

Next steps are to merge with the updated color detection algorithm and integrate the motor control code allowing the cameras to automatically track the cars. I need to identify specific track & camera configurations for testing next week that can show how our product meets its use case requirements. These testing configurations will also be important in helping me identify where I need to improve with regards to my simple moving average algorithm. I need to test integration with the motor control code and ensure the switching algorithm works consistently even with auto-tracking cameras that may sometimes have overlap in seeing the car.