What I did the week before

My last week was spent on getting started on developing the mapping software.

What I did this week

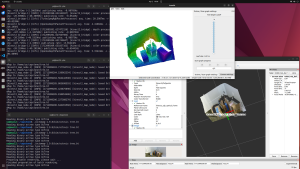

I finished implementing the mapping from 3d point cloud to 3d voxels. Here’s an example:

The mapping software is able to map a 2m x 2m x 2m scene to a voxel grid precisely (+/- 1cm).

The mapping module is named kinect2_map and will be made publicly available as an open-source ROS 2 node.

I then looked into calibrating the camera. So far everything is manual, especially the part that transforms the mapped grid into the RRT* world coordinates. So we need to come up with a solution that can do that semi-automatically (since we aim to support various scenes, not just a static one, it will require a human operator to guide the mapping module).

What I plan to be doing next week

I’ve already started implementing calibration. I expect to get a prototype of it done within the next week. Then the perception module is complete and I could help with porting software RRT to FPGA and optimizations.

Testing and Verification

I have been testing the perception module when developing it and plan to keep on doing it as I add more features to it. The verification is done by measuring the dimensions of the real-world objects and comparing that to the voxelized mapping. Currently, each voxel has a dimension of 1cm x 1cm x 1cm so it is relatively easy to manually verify by using a tape measure and comparing the measured dimensions of the real-world object and the mapped object. The same applies to verification of mapping calibration.