What I did the week before

ROS integration and perception mapping calibration.

What I did this week

Since we ditched the Kria board, the setup now includes a laptop that needs to talk to the Ultra96 board. So having the laptop talking to Ultra96 in addition to all ROS communications became our top priority last week.

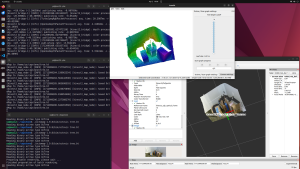

I wrote a RRTComms ROS node that sends 3d grid perception data to peripheral via UART for this purpose. It is able to subscribe to the perception node’s output channels, convert perception data to integer arrays, and send that out via UART.

As for perception — I 3D printed a 1cmx1cmx1cm calibration block for calibrating camera mapping. I have also finished coding the whole perception front end. The only thing left to do is to tune the parameters of the perception module once we have a fully set-up test scene.

What I plan to be doing next week

Since perception and RRT comms are both ROS nodes and they are already working and talking to each other, I don’t expect much to do to integrate them with the whole system. However, one uncertainty is getting back the RRT output from Ultra96 and sending that to the kinematics module. Since the kinematics module is not yet packaged as a ROS node, I have yet to code the interface for kinematics yet. So this will be the top priority for next week.

One other thing I want to do is to improve the RRT algorithm’s searching for the nearest neighbor.

Extra reflections

As you’ve designed, implemented, and debugged your project, what new tools or new knowledge did you find it necessary to learn to be able to accomplish these tasks? What learning strategies did you use to acquire this new knowledge?

Well, I’ve learned quite a few things.

ROS, for one, since I have never used it before and it is necessary for our robotic project. It was much harder than I initially anticipated to learn to use others’ ancient ROS code, port it to ROS 2, and write my own ROS code that works with it. This involved many hours of reading ROS/ROS 2 documentation and searching on GitHub/Stackoverflow/ROS Dev Google Groups.

There are also some other things that I learned and I appreciate. For example, I learned about octomap, a robust and commonly used mapping library. I also learned more about FPGAs and HLS by reading 18-643 slides and setting up a Xilinx Vitis development environment on my local machine and playing with it.

During the journey, I found that the most helpful learning strategy is actually to not get deep into the rabbit holes I encounter. Rather, getting a high-level idea of what a thing is and starting to build a prototype to see if it works as soon as possible proved to be a good idea.