Risks:

The most significant risk to our project is the potential for inaccuracies in the room scan due to obstacles obstructing the LiDAR sensor’s view. To mitigate this risk, we plan to implement a system where users are able to move the stand to obtain additional scans if tall objects obstruct the view.

Design Changes:

A significant change to our initial design involves the implementation of a stand to capture sensor data points at various heights. While the stand would be stationary and set to a certain height, the scanner would move up and down along it on an elevator type mechanism. This decision to incorporate a stationary stand stems from the RPLiDAR sensor’s ability to capture data in vertical slices as it rotates and easier and more cost effective for us to build.

Schedule Changes and Updates:

We don’t really have any big schedule changes.

Weekly Questions:

Part A was written by Grace, Part B was written by Zuhieb and Part C was written by Alana

Part A:

Our product solution addresses public, health, and welfare concerns by providing a convenient means for home planning and redesign, thereby reducing the time spent physically visiting stores and the physical labor associated with rearranging furniture. By allowing users to visualize and plan their living spaces virtually, TailorBot promotes psychological well-being by alleviating the stress and physical strain typically associated with traditional home design processes. Additionally, by reducing physical labor, the product contributes to the safety of users, minimizing the risk of injuries or accidents that may occur during furniture rearrangements. Overall, Tailorbot enhances the overall well-being and safety of individuals by providing an accessible and efficient solution for home redesign.

Part B:

Our product will allow users to visualize and customize their living spaces, which will facilitate a method to bring together many different social groups. Through the virtualization of the living space, our product allows for collaboration between many individuals regarding planning out a space, a task that only a few could participate in. Through our time and cost-effective solution, Tailorbot enables people of many social classes to have access to the product. Tailorbot allows for any space to become a more inclusive space.

Part C:

Our product would be an incredibly useful tool for interior home designers, both professional or hobbyist. The product could save them a lot of time on having to go back and forth from moving furniture to see if it matches the visualization in the designer’s head or redrawing 2D sketches to take adjustments into account. It could make coordinating with home movers more efficient by giving them a better idea how furniture could be placed. This in turn could save clients money and time when collaborating with them. Should the project’s scope expand outside of our current timeline, furniture companies especially could collaborate with our project by scanning in their own products. This could potentially allow users to see how said products might look in their home and make them interested in purchasing them, like a 3D buyers catalog.

Developments:

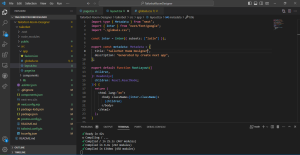

While there were no changes to our schedule going forward, we all worked together to get started on implementing the data output from the rplidar scanner earlier than expected. This will hopefully make the integration and prototyping stages faster but only time will tell. Aside from that, we all spent a lot of time researching various things that like making integrating the data easier and would inform future aspects of the design, such as the change from a remote controlled scanner stand to a stationary one.

Here’s a picture of output from the rplidar scanner as its activate: Rplidar Scanner Output