This week was spent working through some of the final design choices for the stand. We have chosen to continue with the scissor lift, as the rotating mount proves to complicate the calculation (and we have been able to process a slice of a room from the scanner and generate a 3d rendering of it). Therefore, I have been looking for ways to build this, speaking with people from robotics and MechE. I have been told laser cutting the lift would be best, so I made a file ready to be printed and then assembled. I have also worked on getting the Raspberry Pi to work for our project. I feel overall we are on schedule, but I might need to put in extra work during spring break regarding the lift. I hope to have tested the laser-cut scissor lift by next week so I can modify and upgrade the design if needed.

Alana’s Status Report for 2/24/24

Personal Accomplishments:

I spent a lot of this week building up the web app, integrating the output from the lidar, and researching into Three.js as Grace has been able to get 3D models from PyVista with said output. I don’t have anything too exciting to show visually as most of the work is just me getting more familiar with the systems or in the code rather than the UI itself.

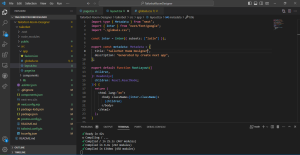

Though for big changes, I decided to switch over component libraries. At first, I was using a Next.js library called Shadecn as it was very popular and Next.js has a lot of nice backend features right out of the box compared to regular React that I wanted to have just in case they became useful later. However, I found Next.js and Shadecn more difficult to work with than regular React. It was the first time I had worked with either and while they were both very similar to React and the previous component library I’ve used, MUI, I wasn’t happy with the speed of my work. As such, I’ve decided to go with MUI and regular React due to my familiarity with it. Next.js and Shadecn weren’t impossible to work with but I hope the change may help me make faster progress in the future.

Progress:

Currently, I am on schedule. I’ll keep at it.

Schedule Status: On time.

Next Week’s Deliverables:

Next week I hope to have Three.js implemented into the web app. Maybe it won’t be working perfectly, but I at least want it to get to a state where it can interact with the web app code I have installed.

Grace’s Status Report 2/24/2024

This week I continued working with the LiDAR data to generate a mesh 3D model for our project. I developed code to filter the data by removing points that are outside of bounds to improve the accuracy of the resulting model. Additionally, I immplemented code to simulate the process of taking slices of a room at different heights. To test the functionality, I captured a horizontal slice of my room using the LiDAR and replicated it used various z values to generate a a point cloud. I used PyVista to create a triangulated surface from the points and saved the resulting mesh to an STL file, which can be used for integration with our web application. This week, I also worked with my team to start the design report.

The progress made in processing data and generating the mesh is currently on schedule. Next week, I aim to improve the model by using code to fill in gaps present in the data and refine the mesh using PyVista to achieve a smoother surface. I also hope to find a way to combine data from the LiDAR when it is placed at different locations in the room.

Team Status Report for 2/24/24

Risks:

Using a laser-cut scissor lift that’s controlled electrically over a rotating stand allows for an easier time processing the readings from the scanner, as there would be minimal calculations to figure out the z-position of the scan. However, moving to this design, we run the risk of relying on the stability of the scissor lift to accurately move vertically. To manage the risk, we would need to test the sturdiness of the material we would use for the lift. Should we not get the lift working, we would mechanically lift the scanner.

Design Changes:

We have decided to go back to the idea of having a scissor lift to move our scanner to gather data. We received some feedback that it would be easier to implement and work with than the rotation method. While we haven’t pinpoint the exact cost yet, we hope to lower costs by taking advantage of school resources from classes we are in. Though should that fail, the supplies overall should not be too costly for our budget.

Schedule Changes and Updates:

We adjusted our schedule to include a section for lidar data processing so that we could include a clearer timeline for this component of our project. The next week will be focused on making changes to the code to generate a smoother, more defined model.

Developments:

This week’s progress was a lot of getting the important parts of our project to work and laying the groundwork for integrating them together moving forward. Creating a 3D point cloud is the most visually exciting things we have to offer this week, but the rest of the work is going towards making future stages run smoother.

Alana’s Status Report for 2/17/24

Personal Accomplishments:

This week the group as a whole received feedback on actually integrating the data collection from our rplidar scanner into the system as soon as possible so we’d have a clearer idea of how everything would work together for design review. We got this pretty early on this week. My plan for the week was to make the sketches of the UI to start prototyping next week. However, I decided that my time would be better spent helping the others with getting the rplidar scanner output. After that, I spent the rest of the week just researching the best ways of implementing that data into the UI. I looked into packages like PyVista and Three.js, open sources packages designed to display 3D data. I hope to use this to research to help with the prototyping phase coming up. I did manage to pick Next.js as my web app base and used the React component library Shadecn to get it up and running.

Progress:

I plan to use the Shadecn to easily build a presentable interface that I can go back and edit as needed. UI is built around function as much as form. While having detailed plans for the UI would have been nice, but with us still being in the early stages of our project, I realized the exact design specs I needed the UI to account for kept changing, making designing for it difficult. I’ve decided to keep the UI as basic as possible so I can easily change it as the needs from the hardware parts of the design change. I plan to make a more cohesive look once all the my frontend and backend integration is done.

Despite this, I’m actually still on schedule as this week was my time for planning and research. I just focused more on the research part. As I move into making the prototype, I hope to start actually building the basic functionality of the UI (going to doing to different pages, getting buttons to work, etc.) and see if I can get it to integrate with the rplidar scanner’s output data.

Schedule Status: On time.

Next Week’s Deliverables:

Next week I hope to start actually building the basic functionality of the website and establish the connection between it and the rplidar scanner so the website can access that data. That way we can build off it for the 3D models of the room later on.

Zuhieb’s Status Report for 2/17/2024

I spent the first portion of this week researching the modules and applications we would use to build the project. I followed a pipeline we agreed to during one of our meetings.

I was able to find libraries (PyVsta and ThreeJS) to help go from the 3d mapper module to the interactive rendering in the web application. Once we showed these systems would work, I shifted my focus to researching a mechanism to lift the stand of the scanner. We may decide to shift to another system that doesn’t involve lifting the scanner, saving costs. We are on track according to our schedule. By next week I hope to start implementing the mechanism to move the scanner as well as the implementation of creating the 3d mesh from the gathered data from the scanner.

Grace’s Status Report 2/17/2024

This week, I focused on initiating the development of the codebase to process data from the LiDAR sensor. As part of this process, I began writing code to convert the data received from the sensor, which includes angles and distance for each sample, into points that form a point cloud. For this process, I used the RPLidar-roboticia module, which is a module designed for working with the RPLidar laser scanner. I successfully collected data from the lidar sensor and converted it into a set of 3D points in an array.

In the next week, I hope to finalize the code for generating the point cloud from the LiDAR sensor data. I also hope to delve into testing the creation of a 3D mesh using PyVista.

Team Status Report for 2/17/24

Risks:

The most significant risk to our project is the potential for inaccuracies in the room scan due to obstacles obstructing the LiDAR sensor’s view. To mitigate this risk, we plan to implement a system where users are able to move the stand to obtain additional scans if tall objects obstruct the view.

Design Changes:

A significant change to our initial design involves the implementation of a stand to capture sensor data points at various heights. While the stand would be stationary and set to a certain height, the scanner would move up and down along it on an elevator type mechanism. This decision to incorporate a stationary stand stems from the RPLiDAR sensor’s ability to capture data in vertical slices as it rotates and easier and more cost effective for us to build.

Schedule Changes and Updates:

We don’t really have any big schedule changes.

Weekly Questions:

Part A was written by Grace, Part B was written by Zuhieb and Part C was written by Alana

Part A:

Our product solution addresses public, health, and welfare concerns by providing a convenient means for home planning and redesign, thereby reducing the time spent physically visiting stores and the physical labor associated with rearranging furniture. By allowing users to visualize and plan their living spaces virtually, TailorBot promotes psychological well-being by alleviating the stress and physical strain typically associated with traditional home design processes. Additionally, by reducing physical labor, the product contributes to the safety of users, minimizing the risk of injuries or accidents that may occur during furniture rearrangements. Overall, Tailorbot enhances the overall well-being and safety of individuals by providing an accessible and efficient solution for home redesign.

Part B:

Our product will allow users to visualize and customize their living spaces, which will facilitate a method to bring together many different social groups. Through the virtualization of the living space, our product allows for collaboration between many individuals regarding planning out a space, a task that only a few could participate in. Through our time and cost-effective solution, Tailorbot enables people of many social classes to have access to the product. Tailorbot allows for any space to become a more inclusive space.

Part C:

Our product would be an incredibly useful tool for interior home designers, both professional or hobbyist. The product could save them a lot of time on having to go back and forth from moving furniture to see if it matches the visualization in the designer’s head or redrawing 2D sketches to take adjustments into account. It could make coordinating with home movers more efficient by giving them a better idea how furniture could be placed. This in turn could save clients money and time when collaborating with them. Should the project’s scope expand outside of our current timeline, furniture companies especially could collaborate with our project by scanning in their own products. This could potentially allow users to see how said products might look in their home and make them interested in purchasing them, like a 3D buyers catalog.

Developments:

While there were no changes to our schedule going forward, we all worked together to get started on implementing the data output from the rplidar scanner earlier than expected. This will hopefully make the integration and prototyping stages faster but only time will tell. Aside from that, we all spent a lot of time researching various things that like making integrating the data easier and would inform future aspects of the design, such as the change from a remote controlled scanner stand to a stationary one.

Here’s a picture of output from the rplidar scanner as its activate: Rplidar Scanner Output

Zuhieb’s Status Report for 2/10/2024

I spent the majority of the week researching ways to implement a remote-controlled mounting system for our camera and sensor. However, with the presentation feedback, my team and I have decided to pivot from that and go towards a simpler stand that can be vertically adjusted. This will be sufficient to get a scan of the room to be able to render it on a web application. I feel I am on schedule, as this is the week we plan to heavily focus on building the product. By early next week, I hope to have a set list of components to be ordered and I hope to have a planned circuit for how the mount would work.

Alana’s Status Report for 2/10/24

Personal Accomplishments:

Most of my time this week has been spent researching the best way to implement the web app part of our project. For this, I’ve decided to go with React, a user interface library that uses Javascript code to easily implement UI components like buttons, dashboards, video players among other things. I feel confident in this as React is a very common tool in web development and I have a lot of experience with it due to using it for a past project.

I took the time to set up a GitHub Repository for the project to save different versions of the website as I work and already set up a React environment in VSCode to program everything in HTML5.

Progress:

Initially, our schedule gave me 2 weeks to work on UI. That schedule was mainly a rough draft and after talking with my partners, we made some adjustments to it. Part of that meant fleshing out what I was doing and I got more time to plan things out. Because of that, I’m on schedule.

Schedule Status: On time.

Next Week’s Deliverables:

Next week I hope to get some more finalized sketches or drafts for the pages to take into the prototyping stage. I plan to sketch things out with the use of Figma, a website often used for application design. I’ve used that in conjunction with React before so I feel confident in using it.