The most significant risks that could jeopardize the success of the project are to do with the environment of the setup during demo day. Specifically, our system relies on consistent, not-too-bright lighting in order to function optimally. Non-ideal lighting conditions would result in faulty detection of the cue ball or other objects. To manage and mitigate this risk, we made very specific requests for the area we want for our project to be located in and modified our code to make sure that we are able to make on-the-fly parameter adjustments based on the given lighting conditions. No large changes were made to the existing design of the system; most of what we did this week was testing, verification, tweaking + small optimizations, cleanup. No change to the schedule is needed – we’re on track and proceeding as planned.

Unit Tests:

Cue Stick Detection System:

- Cartesian-to-polar

- Checking image similarity (pixel overlap)

- Frame history queue insertion, deletion, dequeuing

- Computing slope

- Polar-to-cartesian point

- Polar-to-cartesian line

- Walls and pool table masking

- Extracting point pairs (rectangle vertices) from boxPoints

- Primary findCueStick function

Ball Detection System:

- getCueBall function

- Point within wall bounds

- Green masking of pool table

- Creating mask colors

- getBallsHSV

- Finding contours of balls after HSV mask applied

- Remove balls within pockets

- Point-to-line distance

- Ball collision/adjacent to walls

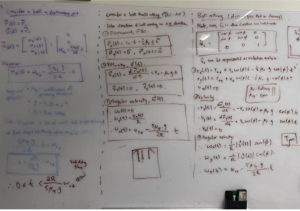

Physics Engine/System:

- Normal slope

- Point-to-line distance

- Checking if point out of pool table bounds

- Line/trajectory intersection with wall

- Reflected points, aim at wall

- Finding intersection points of two lines

- Extrapolate output line of trajectory

- Point along trajectory line (or within some distance d of it)

- Find new collision point on trajectory line

- Intersection of trajectory line and ball

- Main run_physics

System Tests:

Cue Stick Detection System:

- Isolated stick, no balls

- Stick close, next to cue ball

- Stick among multiple balls

- Random configurations (10x)

- Full-length stick

- Front of stick, at table edges (5x)

- Different lighting conditions

- IMU accelerometer request-response

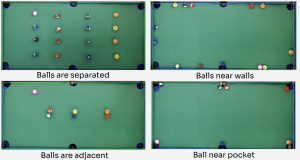

Ball Detection System:

- Random ball configurations (20x)

- Similar colored balls close together (e.g. cue ball + yellow stripe + yellow ball)

- Balls near pockets

- Balls near walls

- Different lighting conditions

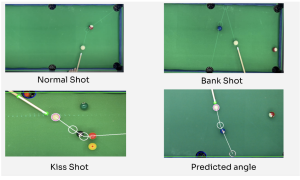

Physics Engine/System:

- Kiss-shot: cue ball – ball – ball – pocket (20x)

- Bank shot: cue ball – ball – wall (20x)

- Normal shot: cue-ball – ball – pocket (20x)

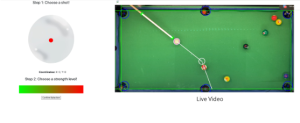

Webapp System:

- Spin shot location request-response

- End-to-end latency (processing each frame)

Findings:

- We were well under the latency requirement (22ms << 100ms), allowed us to take more liberties in increasing quality of detection at the cost of being computationally inefficient.

- Testing under lighting conditions forced us to change how detection was done (used to heavily rely on color detection, now much more using contours, polygon approximation, thresholding, masking, etc). This also made us parametrize a lot of our detection settings that did rely on color detection, so we could modify it on the fly if needed.

- Utilized “frame averaging” and/or remembering past frames. Due to detection happening quickly (22ms), we were able to save a few frames from the past and do computation on those to “fill in gaps”. Also helped us calibrate setup as well as make improvements to all our detection systems by considering both current and past frame data in discerning objects, tracking, etc.