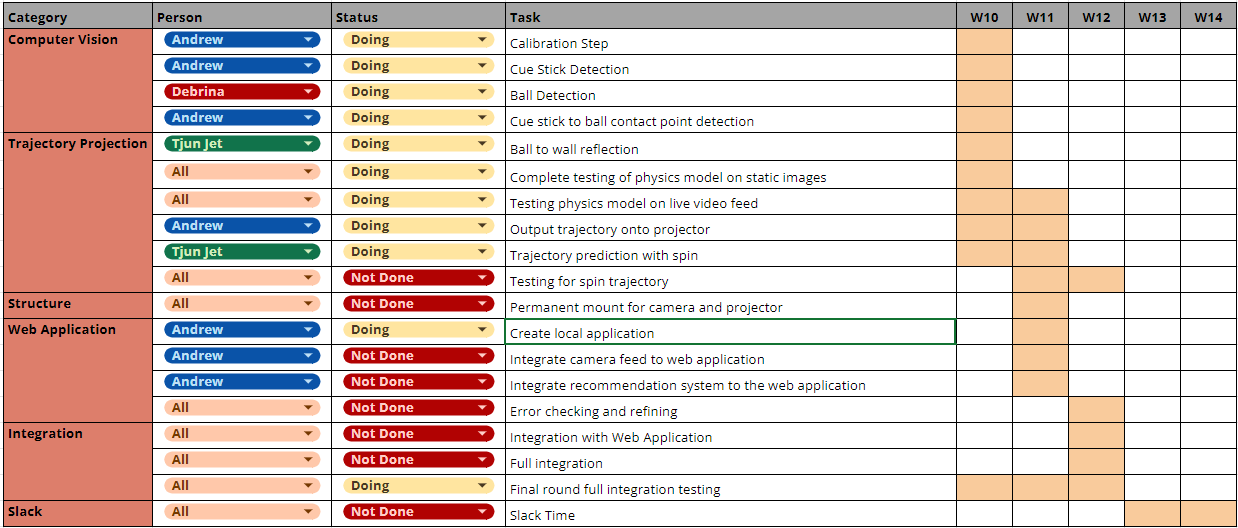

This week, our team is on schedule. We had our initial project demo and received some helpful feedback regarding some improvements we could make in our system. Furthermore, our team also managed to get the projection running and output the trajectories on the table. The main focus of this week, however, was the testing and continued efforts for the full integration of our system. We continued to run tests and simulate different cases to identify any that may yield undesired behavior in our system. This week we also planned out a more formal approach to testing and verification of our system that is more rigorous and aligns well with our initial design plans. These plans will be outlined below.

The most significant risks we face now would be if our trajectory predictions are not as accurate as we hope for them to be. If this were to happen, it is likely that the inaccuracies are a result of our ‘perfect’ assumptions when implementing our physics model. Hence, we would need to modify our physics model to account for some imperfections such as imperfect collisions or losses due to friction.

This week, we have not made any modifications to our system’s existing design.

In the following week, we plan to conduct more rigorous testing of our system. Furthermore, we plan to do some woodworking to create a permanent mount for our camera and projector after we do some manual calibration to determine an ideal spot for their position.

The following is our outlined plan for testing:

Latency

For testing the latency, we plan on timing the code execution for each frame programmatically. We will time the code by logging the begin and end times of each iteration of our backend loop using Python’s time module. As soon as a frame comes in from the camera via OpenCV, we will log the time returned by time.clock into a log. When the frame finishes processing, we will again take the time when the predictions are generated and append this to the log. We intend to keep this running while we test out different possible cases for collisions (ball to ball, ball to wall, and ball to pocket). Next, we will find the difference (in milliseconds) between the start and end times of each iteration. Our use case requirement regarding latency was that end-to-end processing would be within 100ms. By ensuring that each individual frame takes less than 100ms to be processed through the entire software pipeline, we ensure that the user perceives changes to the projected predictions within 100ms which has the appearance of the predictions being instantaneous.

Trajectory Prediction Accuracy

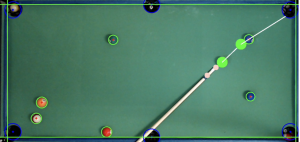

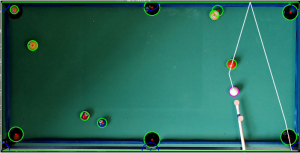

For testing the trajectory prediction accuracy, we plan to manually verify this. The testing plan is as follows: we will take 10 shots straight-on, and we will use the mounted camera to record the shots. In order to ensure that the shots are straight, we will be using a pool cue mount to hold the stick steady. Then, we will manually look through the footage and trace out the actual trajectory of each ball struck and compare it to the predicted trajectory. The angle between these two trajectories will be the deviation. Angle deviation from all 10 shots will be averaged, and this will be our final value to compare against the use-case specification of <= 2 degrees error.

Object Detection Accuracy

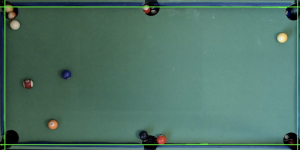

For object detection accuracy, the test we have planned will measure the offset between the projected object and the real object on the pool table. Since the entire image will be scaled the same, we will use projections of the pool balls in order to test the object detection accuracy. The test will be conducted as follows: we will set up the pool table with multiple ball configurations, then take in a frame and run our computer vision system on it. Then, we will project the modified image with objects detected over the actual pool table. We will measure the distance between the real pool balls and projected pool balls (relative to each ball’s center) by taking a picture and measuring the difference manually. This will ensure that our object detection model is accurate and correctly captures the state of the pool table.