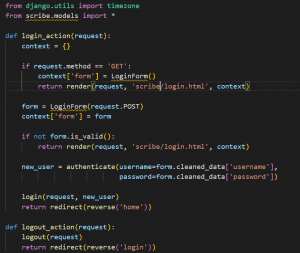

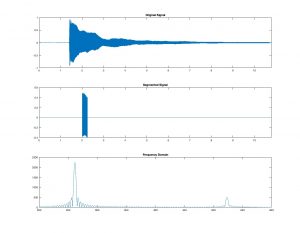

This week I started implementing Vexflow in the front-end of our web-app. My team already had the format of the HTTP Response containing the note information planned out, so I was able to work on this concurrently with my teammates working on the back-end integration of the frequency and rhythm processors.

I used the API found at https://github.com/0xfe/vexflow to implement this. I experimented with downloading the library manually or via npm, but I decided the simplest way would be to access the library via a <script> tag including a link to the unpkg source code provided in the Vexflow tutorial.

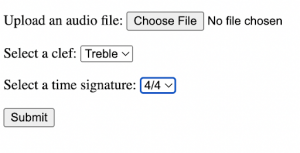

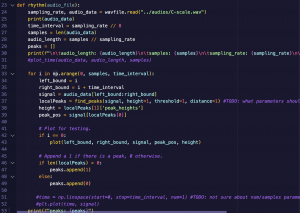

The plan is for the back-end to send a list of Note objects containing the pitch and duration data for each note. I started with just the pitch information, automatically assigning each note a duration of a quarter-note to minimize the errors. This was successful, but I found that I ran into an obstacle because Vexflow requires us to manually create each new “Stave” aka a set of 4 beats within a signal. Because of that, the app is currently limited to only transcribing 4 notes at a time. My plan for next week is to write a program to determine how many Staves will be needed based on how many beats are in the input signal.

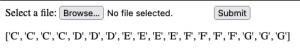

I also managed to fix a major bug with the pitch processor, where the math code used to determine which note was played often caused rounding errors resulting in an output that was one half-step off of the desired note; for example, reading a D note as a D#.