Kelly’s Status Report for 3/18

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week, I focused on the ethics assignment, pivoting the pitch detection algorithm to read a .wav file as input, and testing the new pitch tracking algorithm.

This week, we were given an ethics assignment and came together as a team to discuss a potential worst use case of our project and how to go about fixing it.

Additionally, I created a new pitch tracking algorithm that intakes a .wav file instead of registering input directly from a live microphone stream. As our team pivoted to using MediaStream on our web application to record audio rather than PyAudio, we needed a way to communicate between MediaStream (in js) and our pitch tracker in Aubio (in python). Luckily, MediaStream can output a .wav file and Aubio can take in a .wav file, so I made a new Aubio only demo that taken in a .wav file and outputs pitches in Hz. The new algorithm can be found on our github here.

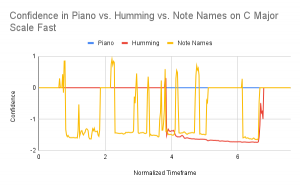

I then tested this new algorithm by recording several .wav files on my ‘C Major Scale Fast’ and ‘C Major Scale Slow’ files. I made 3 .wav files per C Major scale version (6 .wav files total): only the piano notes, myself humming to the file, and myself singing note names (i.e. Do Re Mi Fa …) to the file. I then put these .wav files through the new pitch tracker and graphed my results.

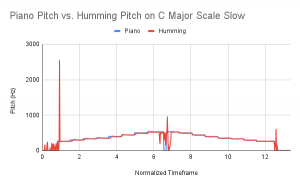

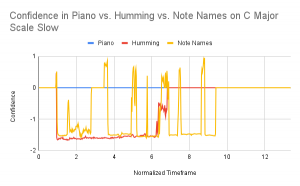

We begin with the C Major Scale Slow results:

As seen, the humming did a remarkable job of resembling the straight piano input signal. However, as soon as consonants via note names were added, we had far more outlier peaks due to the excess expended air. This can also been in the confidence as the humming stays relatively the same confidence with few rapid changes while the note names confidence jumps all over the place. These results don’t surprise me and keep me relatively hopeful for singing actual words as the relative shape of the note names pitches with the consonant peaks taken out hits the correct pitch range of the piano.

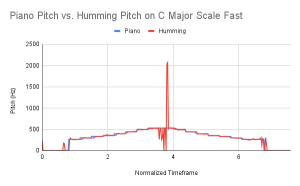

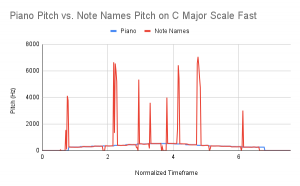

We now look at the C Major Scale Fast results:

Once again, we have very similar results to the C Major Slow outputs. I will say that I expected the pitch tracker to not register the note changes fast enough, but was pleasantly surprised with the pitches of the signals that had been picked up.

Overall, these graphs seem to suggest that if we throw out the consonant outliers in the pitch graphs, we’ll be able to have a remarkably noiseless signal with our current microphone and interface setup in a relatively quiet environment.

The .txt outputs from all of these .wav files detailing pitch and confidence can be found on our github here along with the .wav files the outputs correspond to. Also a link to the full spreadsheet with datapoints and graphs can be found here.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

This week, I wanted to make my new pitch detection algorithm, test it, and ensure that it would be able to perform appropriately when given user input. I would say that all of this was accomplished this week. As we had to pivot our project to using MediaStream, we got a little backtracked with the pitch detection algorithm being attached to the web application frontend. However, now that we’ve flushed out the new algorithm, I’m hopeful that this integration will happen soon.

What deliverables do you hope to complete in the next week?

Next week I hope to integrate my new algorithm with Anna and/or Anita’s portions of the project.