What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

There was a lot of feedback given from peers and the instructors on the design review presentation that highlighted potential risks. We’ll address feedback and potential risk mitigation strategies in this section. There were three main categories of feedback—testing, hardware, and pitch detection method.

Testing

- How will latency be determined?

- “I think that they should also consider testing how quickly the audio will be processed and how quickly the feedback is given so that they are not being told [incorrect information]“

Faulty tests can jeopardize this project, as these tests are what guide us to make modifications and define our definition of “success.” Thus, it is imperative that we create robust tests for our most important use-case requirements. Latency testing for such a quick interval proves to be rather difficult, as even the process of testing can slow down and impact the accuracy of these tests. For example, displaying output in a terminal continuously drastically slows down the program, so we must avoid that. Thus, we must rely on a testing method that is lightweight and does not slow down our program too much.

We have ideated a more robust latency testing plan after hearing this feedback. Python has a time module that allows us to determine the exact time, down to the millisecond. Similarly, in JS, we can use the Date object. In the python backend, we will scatter benchmarks where we collect data about when a certain operation was performed. Examples of such benchmarks include: @input audio is received, @audio is processed, @feedback is generated, @information is sent to server.

After collecting the timestamps, we will stop the program and do some post-processing of the data to determine latency. This data will inform us whether we are hitting our latency use-case requirement for real-time feedback, in addition to determining whether we are giving the user correct feedback about their note.

- “In regard to testing metrics, one potential modification is being too high [large] of a range for a user to receive feedback—though I was hoping there is some data or justification out there as to why 5% is realistic—and also as to why 0.25 seconds for note detection is a fast enough turnaround time, and if so, why it can be achieved (might it take longer?)”

- “The test metrics should also look at the user experience.”

- “User satisfaction and improvement in singing abilities/confidence can be assessed to determine whether the app is actually beneficial.”

We have justified the 250 milliseconds latency in our design review report. However, we do agree with the feedback that it will take more than just scientific and literary evidence to justify this threshold—we need actual users’ experiences. We do plan on testing this product on users and obtain their feedback on whether the latency is too high or whether the feedback is too soft. This information has also been included in our design review report. We will do such testing as soon as we can in case we need to make potential modifications.

Hardware

- “The hardware being used is over $300 which is a lot for a student vocalist to afford. No considerations were mentioned for handling audio from different sources”

- “The only concern I have is that they require the user to wear a specific headset which seems a little unreasonable.”

- “If this is a free application, what will happen if a user can’t purchase the high quality tools being used to record audio? What if their microphones have a lot of noise? Etc.”

There were many concerns about the expensive hardware and how that relates to our goal of this app being accessible and appealing to the casual singer. We agree that the average casual user will not being willing to fork over 300 dollars for a headset and interface. However, this was a change initiated by our advisors, as they said that we should initially focus on a “proof of concept.” The hardware does a lot of noise filtering for us and preserves the quality of the input sound. We are using this expensive, high-quality hardware so that we can focus on the main meat of this project—pitch detection and feedback generation. If we hit our MVP and use-case requirements early, one of our reach goals is to see if we can modify our pitch detection algorithm to work with a regular laptop microphone—as there would be no additional expenses to the user in this case.

Pitch Detection Method

- “What would happen with people who are sopranos vs baritones?”

- “Need to come up with a solution for singers with deeper pitch.”

It would be unfair to limit our user demographic to those who can only sing in a certain range. This would put our goal of this app being accessible to casual users at risk. We plan to address this risk by allowing multiple “correct” pitches for each scale and melody that span octaves. The feedback mechanism will view a C3 equal to a C4 equal to a C5, and so on. As long as they are singing the correct relative pitch, then the feedback mechanism will not ding the user.

- “My past experience with PyAudio have not been good, so I would suggest further testing with PyAudio. My previous with PyAudio were with audio analysis on songs with multiple layers, so it may be more effective at single note pitch detection”

- “From personal experience in a project, pyaudio doesn’t work very well and seems buggy. It may require extra effort or practice to use unless someone on the team already has the experience.”

As of right now, we haven’t run into too many issues regarding pyaudio, thankfully. However, a buggy module does pose a huge risk to the success of our project, as there is no easy way to internally fix the module’s implementation of a certain feature. Our use of pyaudio mainly acts as the interface between the user’s input vocals and the pitch detection—this module is not doing the pitch detection itself. Kelly has done lots of testing regarding the input stream from pyaudio and has determined no such issues so far. Maybe it is the quality of our hardware that is mitigating this issue, but the risks and concerns brought up by our peers does not seems too relevant as of now.

To extrapolate this feedback about pyaudio to the other module that we are using, aubio, we also have mitigation strategies in case aubio is buggy. We haven’t personally encountered or seen too much discourse online about aubio being buggy, but as we are using aubio for pitch detection (a large aspect of our project), we should also consider the potential for aubio to be buggy down the line. To sum the risk mitigation plan up, it would be to either a) doing pre-processing on the input vocals, b) switching modules. More details justifying these two plans can be found in our design review report.

- “Seems a little unrealistic to be moving from Python to C[++] given the short time frame therefore it does not seem like a good mitigation strategy.”

High latency due to python’s shortcomings does prove to be a huge risk to our project. We will try to optimize the efficiency of our code (details of this included in the design report). We will attempt to move our backend to C++, but if moving to C++ is not feasible as per the peer feedback comment, we have plans of adjusting the structure of our design implementation to change where feedback is being processed and given. We will discuss and flesh this out more if latency does become a serious issue.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

As we fleshed out our design in the design review report, we made some changes to our use-case requirements.

- Pitch Detection Accuracy: 95% –> 87%

As our visual feedback consists of simply plotting their input note on a five-line staff, we have decided to aim for a 87% accuracy rate as a starting point. This means that our pitch detection algorithm correctly identifies the input note in most cases, but occasionally misidentifies a note. We believe that this visual representation of feedback gives a little more leeway, and is thus more forgiving to minor errors of pitch detection. We must strike a balance between accuracy and latency, and in this case, we decided to sacrifice and lower our required accuracy rate.

- Latency in post-song analysis: 10s –> 5s

According to the same article by the Nielsen Norman Group, a 10 second delay is the upper limit to how long a user will stay engaged with an app. We believe that 10 seconds is more than enough time to process any post-song statistics, so we have lowered the latency requirement to be 5 seconds.

- User Interface Requirements: new!

We have added use-case requirements for our user interface. This was necessary so that we can measure how seamlessly the user is able to use our app. Requirements included task time, completion rate, and task satisfaction.

This is also the place to put some photos of your progress or to brag about a component you got working.

We all mainly worked on the design review report this week. You can find the design review report under “Design Review.”

As you’ve now established a set of sub-systems necessary to implement your project, what new tools have your team determined will be necessary for you to learn to be able to accomplish these tasks?

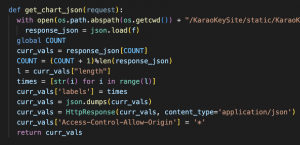

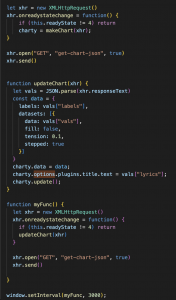

There have been no changes to the backend tools. In the front end, we are now using chart.js to create our visual feedback.