Kelly’s Status Report for 2/25

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week, I looked into midi files for all of our songs, started preliminary testing of pitch detection algorithms, and began thinking about the design report.

First and foremost, I scoured the web for midi files that would both provide a melody line for us to compare to as well as provide a proper instrumental for a user to sing to. This proved more difficult than I had anticipated as most of the midi files that I was able to find ran into one of the following issues:

- Too complex of an instrumental

- Strange interpretation of the melody

- No separation between the melody and the backing

As I am getting these midi files for free on the web, I suppose I should have expected the quality to not be up to my standards and therefore decided to cut my losses and revise the free tracks into what I wanted. Through this process, I was able to scrape together both backing tracks and vocal only tracks for the following:

- Itsy Bitsy Spider

- Happy Birthday

- Twinkle Twinkle Little Star

- I’m Yours

Additionally, I made a fast and slow recording of the C major scale to test out our pitch tracking algorithms with. All of these mp3 files will be attached in this post.

The order is as follows: Twinkle Twinkle Little Star – Vocals, Twinkle Twinkle Little Star, Itsy Bitsy Spider – Vocals, Itsy Bitsy Spider, I’m Yours – Vocals, I’m Yours, Happy Birthday – Vocals, Happy Birthday, C Major Scale – Slow, C Major Scale – Fast.

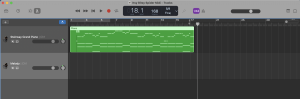

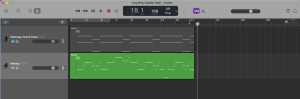

The midi files “Twinkle Twinkle Little Star”, “Itsy Bitsy Spider”, and “Happy Birthday” were quite easy to convert as I only had to duplicate the recorded track and manually separate out the melody from the backing track. Attached below is an example of this process:

Here we have the track as it came with the melody attached to the rest of the track.

Here we have both the melody and the rest of the track separated out. This is more convenient as now I am able to mute the rest of the track and only listen to the melody, providing me with a vocal only track that I can use on the pitch detection algorithm for testing. This separation of melody and vocal only tracks is crucial for testing our pitch detection algorithm as it gets rid of any background noise and provides us with the most accurate signal we could hope to measure.

The file “I’m Yours” was a bit more challenging, as one might imagine, as it’s a much longer song and includes quite a few vocal runs, which are quite hard to mimic on an instrument while still providing a sharp signal. Therefore, this track took a lot of liberties with the melody, especially near the end where Jason Mraz is mostly ad-libbing and not necessarily following a strict melody. While I have separated out the vocals of this track, there are a few problems that I have not yet tackled. As these problems involve me manually going through the track and replacing the notes to better fit the actual melody, it will be quite an arduous task and I’m aiming to complete it within the next week. Another problem I have focuses around the fact that the melody was recorded on a flute rather than a piano, but this is just personal preference, so I’m willing to give up on this dream if I must.

Finally, I hand crafted the C Major Scale audio tracks in a fast and slow version. With the slow version, I’m hoping to calibrate the pitch detection algorithm on slow notes to see how well it fares when it’s given a signal for an extended duration and expect the confidence to go up. With the fast version, I’m hoping to capture more of these quick note changes that happen in songs as well as make sure that the pitch detection algorithm doesn’t drop too low in confidence while the speed of note changes increases.

Next, I started some preliminary pitch algorithm testing and quickly found a pitfall I wasn’t expecting. First, I recorded some dummy vocals on a garageband track, just to hear the quality of the audio as well as see how the computer was interpreting the signal and realized one key fact that I was not testing for: mouth breathing. As I grew up participating in choir as well as musical theatre, I have been trained to not breathe through my mouth at all costs and when I must, it better be silent. However, our webapp is marketed to beginners who more than likely have not gone through this same training and so upon confirming with Anita and Anna that they mouth breathe when they sing, I knew I had to test the audio peaking. As the headset microphone is right in front of the mouth and the recorded audio from it is also being played back through the headphones, I wanted to make sure that our user would not be scared by their own breaths as this could definitely mess up their user experience. Thus, I set out to sing a whole bunch until I was out of breath and audibly gasped for air to see just how loud I would be hearing myself back. At the volume I was singing, even with a gasping breath rather than a normal breath, I was not able to jumpscare myself, but I’ll have people who aren’t used to hearing themselves test it next.

Next, I proceeded to use my aubio pitch tracking code to test different pitch tracking algorithms under more or less the same conditions. I would wear the headset microphone and have the interface plugged into my computer, then I would do the following:

- Play the “C Major Scale – Slow” on my computer (so the microphone doesn’t pick it up) and sing along with it

- Be quiet and hold my phone playing the “C Major Scale – Slow” next to my microphone

- Repeat 1 + 2 with “C Major Scale – Fast”

The algorithms I tested were “YIN”, “YINFFT”, “fcomb”, and “mcomb”. I pasted the results of these pitch tracking in a spreadsheet and compared them side by side. The spreadsheet is linked here.

Generally, I think the “fcomb”, “mcomb”, and “YIN” algorithms have spatterings of unreliable values (i.e. a 1000 amidst 260) and thus are not fit for our project. The “YINFFT” algorithm reported the most stable numbers and detected pitches within the correct ranges as well (200-600 Hz, give or take). Therefore, I will be moving forward with the “YINFFT” algorithm.

In terms of the design report, I’ve read the feedback presented to us by our peers as well as our fellow section D peers and started zoning in on what people have questions about in an effort to clarify these for our report.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

According to the Gantt chart, this week I am meant to test out pitch tracking algorithms, work on the UI, and put some thought into the design report. I did all of these things except for UI. In between lectures this week, I plan to get several peoples’ opinions on our UI mockups and refine them from there based on their suggestions.

What deliverables do you hope to complete in the next week?

Next week I hope to have refined UI mockups, preliminary testing done in a noisy environment, report sections written, and a start on pitch tracking for a song.