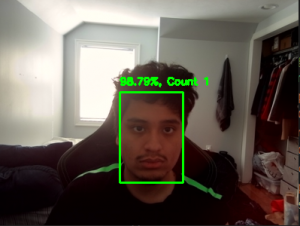

As of right now, the most significant risks that could jeopardize the success of the project are making sure that the algorithms used for eye tracking and facial detection are able to be completed on time and can be done with the appropriate frame rate. This is imperative to get done as soon as possible because many of the other features cannot be completed until that part is done. We have been working to constantly test the algorithms to ensure that they are working as expected, but in the event that they aren’t, we have a backup plan of switching from OpenCV DNN to Dlib.

We were looking into changing the model of the NVIDIA Jetson because the one we currently have could possibly utilize more power than what a car can provide. If this change needs to happen, it won’t incur any extra costs because there are other models in inventory and the other hardware components that we have are compatible with the other models. Also, in between the design presentation and report, we have added back the feature of the device working in non ideal conditions (low lighting and potential obstruction of the eyes by sunglasses or a hat). This was done based on feedback by faculty, but at the moment we are still unsure if we will for sure add back non ideal conditions because other faculty/TA feedback mentioned that we shouldn’t add it back. If it is added back, the cost incurred will be having to spend time working on the algorithm with non ideal conditions. This will lead to less time perfecting the accuracy of the ideal conditions algorithm. We are also changing the design slightly to account for edge cases in which the driver would be looking away from the windshield or mirrors while the car is moving but still not considered distracted. This would occur when the driver is looking left or right while making a turn or looking over their shoulder while reversing. For now, we will limit audio feedback for signs of distraction to be given only when the car is above a threshold speed (tentatively 5mph), replacing our previous condition of the car being stationary. This mph will be adjusted based on recorded speeds of turns and reversing during user testing. If we have extra time, we are considering detecting whether the turn signal is on or car is in reverse to more accurately detect these edge conditions.

After our meeting with the professor and TA this week, we are changing the deadline for the completion of the eye tracking and the head pose estimation tracking algorithms to be next week. We made this change because the CV/ML aspects of our project are the most critical to achieve our goals and having the algorithms completed earlier will allow us to have more time for testing/tuning to create a more robust system. Also, we have shifted the task of assembling the device and testing individual components, specifically the accelerometer and camera, to next week after those components have been delivered. Otherwise, we are on track with our schedule.