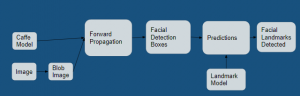

I spent the majority of my time this week working on aspects of our upcoming design presentation. After discussing and deciding the design/implementation of our project with my teammates, I put together the system block diagram for our presentation. Sirisha and I also worked together to make more fleshed-out UI designs for the web application aspect of our project with Google Slides. We designed the login, registration, and main statistics (home) pages.

Capstone UI Design as of 2_18_23

Outside of the presentation, I spent some time looking into how to send the driver inattention data from the Jetson (where CV and classification is taking place) to our web application while in a moving car. It looks like adding a wifi card to the Jetson with bluetooth capabilities will work for our purposes, but I want to talk more with one of the TAs/professors with more experience working with Jetsons on Monday to confirm this before submitting an order for the part. I also submitted orders for our speaker and a cable to connect the audio jack of the speaker to the USB-C port on the Jetson.

My progress is behind schedule according to our Gantt chart. Specifically the “Device Schematic/Parts Selection” and “Testing Each Component Individually” tasks will need to be pushed to next week when we finish submitting our parts orders and receive the parts that we want to test individually. When setting our original deadlines, we did not take into consideration the time it would take to decide, approve/order, and receive each of our individual components. We do have our borrowed Jetson, but all of our other components are either ordered and not yet received or will be ordered on Monday. I updated our Gantt chart to reflect this.

In the next week, I hope to finish submitting all of our part orders with Yasser and Sirisha and test out playing sounds from code on the Jetson to the external speaker that we ordered. I will also work with Sirisha to implement the initial setup of our web application (creating the initial pages and some basic components in React.js) according to our UI design. Additionally, I want to measure the power consumption of the Jetson while running basic code (likely just the code sending audio data to the speaker) to see how feasible it will be for us to fully power the whole device with the 12V power outlet in a car.

The particular ECE courses I’ve taken that cover the engineering principles our team used to develop our design are: 10-301, 18-220, 18-341/18-447, 17-437 (Web App Development), and 05-391 (Designing Human-Centered Software).