Earlier this week, I accomplished wrapping up the design presentation slides, specifically with the block diagrams regarding the facial and landmark detection algorithms, as well as the mouth and eye detection. After the presentation, I accomplished researching the frameworks and models needed for head pose estimation. After speaking with the professor after the design presentation, he suggested we circumvent the sunglasses issue on the driver with measuring head movement to see if a driver is distracted/drowsy. A Tensorflow Model and Caffe based OpenCV DNN is needed, both of which are being used for the facial detection and landmark algorithms we intend to use in this project. Besides researching about head pose detection, I started coding the facial detection algorithm using OpenCV and the Caffe facial detector model.

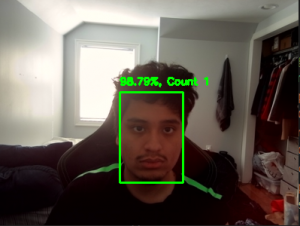

My progress ahead. By the end of this week I was tasked with having all of the algorithms needed researched. Since I had that done earlier in the week, I decided to move on with next week’s task of writing the code for the facial and landmark detector. As shown in the image, the facial detection code is done.

By the end of next week, I expect to have the landmark detector code done, as well as the mouth and eye detection code and the design document as well.

Some team shortfalls this week was not having my team members in pittsburgh when the design presentation slides were due. I especially needed help with writing the speaker notes on the slide with the hardware block diagram as I had very little knowledge on the hardware used for this project, as well as the UI and the servers where our webapp will be hosted on. However, we got through this obstacle with constant communication.