https://drive.google.com/file/d/1_BjkbpvtRzIS9crKos-OMVkS1A6vNcDw/view?usp=sharing

Angie's Status Reports

Angie’s Status Report for 4/29

What did you personally accomplish this week on the project?

- This week, I worked with Linsey and Ayesha to integrate the GPS with the Raspberry Pi. We could not get the GPS to fix on satellites indoors, so the GPS was able to be fixed by placing it outdoors for nearly an hour. The initial results are that the GPS data is precise (majority of locations are within 2 meters of the average location, and the standard deviation is 1.5m), but inaccurate, being about 20 miles away from the actual location. To maximize the location accuracy, the location shift will be compensated for, and the latitudes and longitudes will be filtered over time to reduce the influence of outliers.

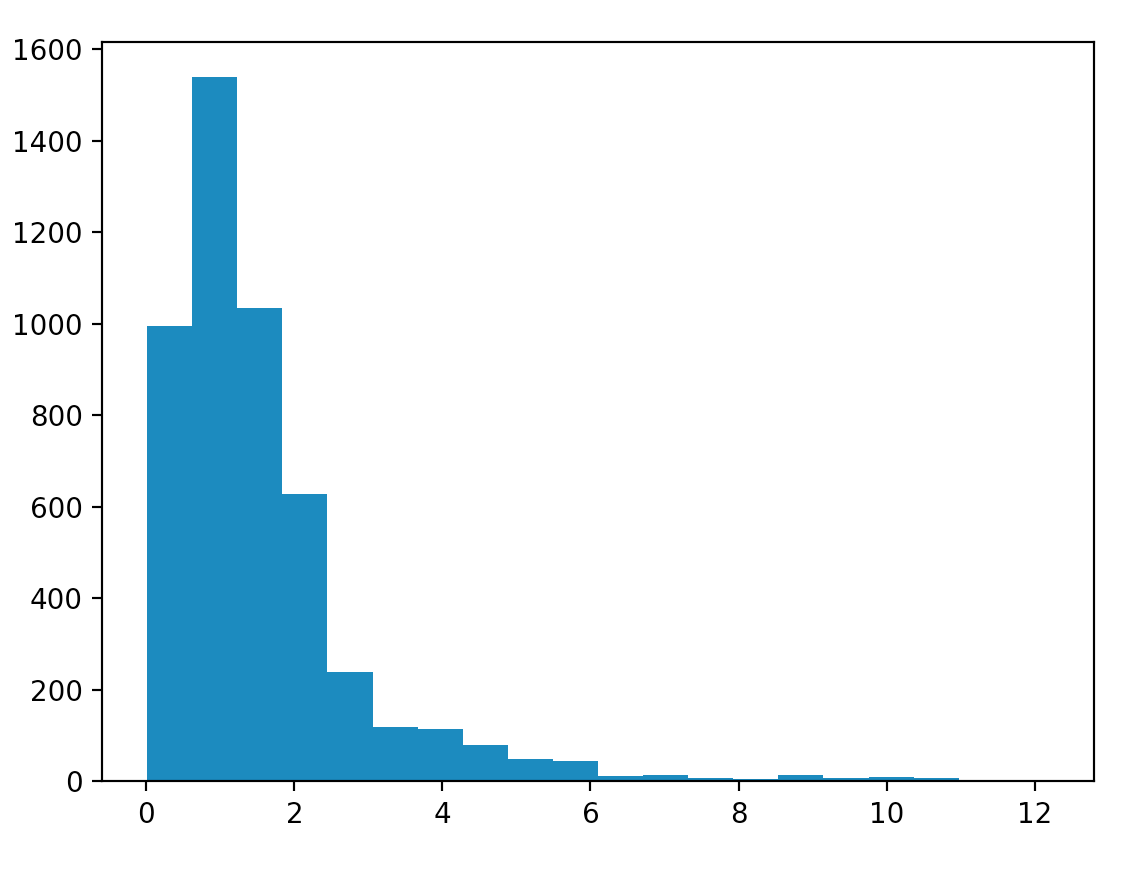

- I collected 3600 more samples of data to train the neural network in hopes of increasing the F1 score. Heeding feedback from the final presentation, I recorded the movement of non-human animals such as a wasp. The human data contained more representation of relatively stationary movements such as deep breathing, which decreased the F1 score to 0.33 again, so I collected data where the humans are dramatically moving (including behind barriers) such as arm-waving.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

Progress is behind on GPS integration due to lack of connection with CMU-DEVICE and trouble getting GPS fix, and I will catch up by resolving errors specific to slow GPS fix time and not remembering its position between reboots.

What deliverables do you hope to complete in the next week?

- Resolve problems with getting a GPS fix quickly (such as downloading almanacs from internet)

- Test system on CMU-DEVICE

- 3D print the chassis and put all parts in chassis

Team Status Reports

Team Status Report for 4/22

What are the most significant risks that could jeopardize the success of the

project? How are these risks being managed? What contingency plans are ready?

The most significant risks that could jeopardize the success of the project are :

- If the system is not effective at distinguishing humans from nonhumans. This manifests as a high rate of false positives and false negatives. This risk can be managed by changing the type of data that is inputted and how the data is preprocessed. In previous weeks, both have been changed, especially increasing the velocity resolution.

- High latency of transmitting system data wirelessly to web app. Although it is crucial to have full-resolution radar data during each transmission, the data rate of the GPS and temperature data can be reduced to reduce latency, and the location is estimated using a Kalman filter.

Were any changes made to the existing design of the system (requirements,

block diagram, system spec, etc)? Why was this change necessary, what costs

does the change incur, and how will these costs be mitigated going forward?

- The dimensions of the input data were changed so that the doppler resolution was doubled and the range was halved. This change was necessary to reduce latency while providing higher quality data, as the time waiting to collect data beyond 5m reduces the frequency of data that can be sent and is extraneous anyway.

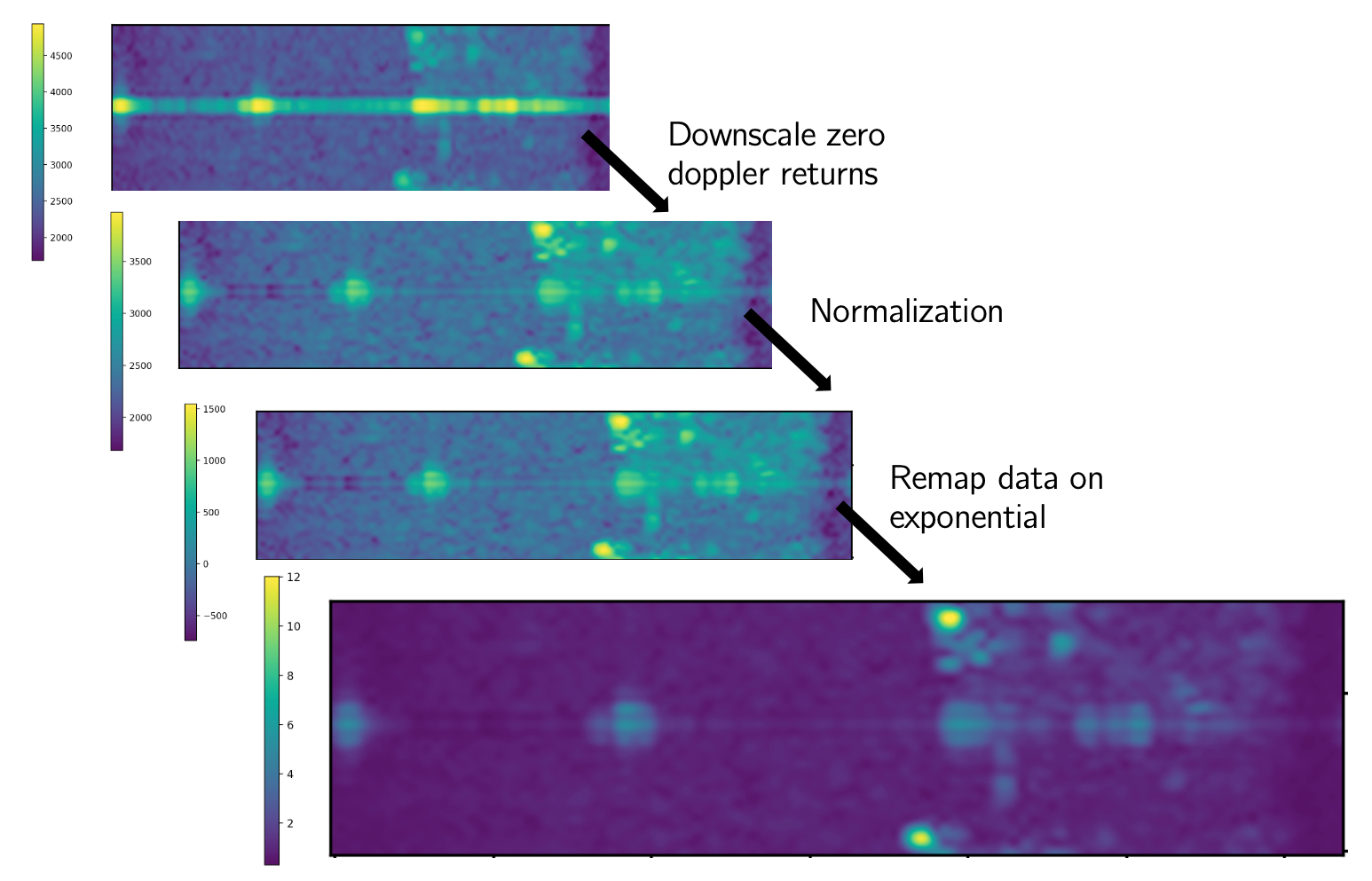

- Additionally, the doubled doppler resolution provides more fine details that can help identify a human (such as the strong returns from movement of hands and weaker returns from individual fingers). Additionally, the input data is preprocessed to reduce noise in the range-doppler map which is expected to improve the accuracy of the neural network since the noise is less likely to erroneously be identified as a human.

Angie's Status Reports

Angie’s Status Report for 4/22

What did you personally accomplish this week on the project?

- This week, I collected a new dataset of 3600 samples (1800 with humans, 1800 without humans) which was used to train the neural network. Compared to the old dataset, the new dataset has doubled velocity resolution and halved range to 5 meters, which is advantageous for our use case since the data beyond 5 meters is superfluous and increases latency.

- I collected 600 samples of test data (300 with humans, 300 without humans) which is not used to train the neural network but to gauge its performance with data that was never used to train it.

- The above test data, along with the real-time data, is preprocessed as shown in the picture below:

- Ayesha and I wrote code to send radar, GPS, and IMU data from the Raspberry Pi through an http request.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

Progress is on schedule

What deliverables do you hope to complete in the next week?

- Work with Ayesha to write code to send temperature data

- 3D print the chassis to contain the system

- Collect metrics on latency for the integrated system

Angie's Status Reports

Angie’s Status Report for 4/8

What did you personally accomplish this week on the project?

- During the interim demo, I demonstrated the radar subsystem collecting range-doppler data in real time.

- Together with Linsey and Ayesha, we collected and labeled radar data consisting of indoor scenes with or without humans in different orientations at different positions in different poses (standing, sitting, lying on ground) performing different tasks (hand waving, jumping, deep breathing). In total, we collected 25 ten-second long scenes at a rate of four frames per second, leaving us with 1000 labeled scenes.

- Ayesha and I started integrating the GPS and IMU data with the Raspberry Pi by writing and testing socket code for the Raspberry Pi to send and the web app to receive data over WiFi.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

Progress is on schedule, according to the updated schedule presented at the interim demo.

What deliverables do you hope to complete in the next week?

- Integrate web app with radar, location, and temperature data and conduct corresponding integration tests for latency, data rate, etc.

- Train neural network on our own dataset

- Continue exploring radar preprocessing such as denoising and mitigation of multipath returns

What tests have you run and are planning to run?

- Radar subsystem testing: The radar meets our use case requirements, being able to clearly register a human with moving arms from within 5 meters. The radar is able to do so even when the human is obstructed by glass and plastic or partially obstructed by metal chairs. However, it would be good for us to collect more radar data of moving non-human objects to test how well the radar can specifically distinguish humans from non-humans, measured by its accuracy and F1 score.

- Integration testing: The radar and GPS modules are able to stream data directly to the Raspberry Pi via UART at 4 frames per second. To meet the use case requirements, the web app must be able to receive all that data at the same rate via WiFi within 3 seconds of collection. This metric will be tested after the server is written to receive data from our system.

Uncategorized

Angie’s Status Report for 4/1

What did you personally accomplish this week on the project?

This week, I tested integration of the Raspberry Pi with the radar and using the OpenRadar project code to stream data from the green board without using Texas Instruments’ mmwave studio which is only available on Windows.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

Schedule is behind because we met to collect data containing all of us but were not able to collect the data since the AWR1642 could not connect. After swapping out with the AWR1843, we will collect data on Sunday.

What deliverables do you hope to complete in the next week?

- Interim demo of real-time data collection

- Test sending data to web app via wifi

- Test sending alert to web app that system is not stationary or is too hot, based on IMU and temperature data

Team Status Reports

Team Status Report for 3/25

What are the most significant risks that could jeopardize the success of the

project? How are these risks being managed? What contingency plans are ready?

As we begin to test our system more extensively, significant risks that could jeopardize the success of the project include damage to the system during testing, which is especially risky for our project since we use borrowed components that cost over $1000 in total. Even if the components are not exposed to high temperatures except the temperature sensor, environmental conditions may hasten damage such as corrosion to the antenna, which is visible on the AWR1642 radar module that came with the green board. To prevent the same from happening to the AWR1843, we have chosen to enclose the system with a radome when testing in high-moisture conditions such as fog. The contingency plan is that we have two radar modules available in case one fails.

Another risk is the dataset not being sufficient to train the neural network to detect a human. Right now, the neural network has only been trained on the publicly available Smart Robot dataset that detects a corner reflector, which has a very different radar signature compared to a human. To mitigate this risk, our contingency plan is to train the neural network on our own dataset of 3D range-doppler-azimuth data of actual humans that is continually collected throughout the course of the semester.

Were any changes made to the existing design of the system (requirements,

block diagram, system spec, etc)?

No changes were made this week to the existing design of the system.

Angie's Status Reports

Angie’s Status Report for 3/25

What did you personally accomplish this week on the project?

This week, I tested integration of the Raspberry Pi with the other peripheral sensors separately: the GPS, IMU, and temperature sensor by connecting them and collecting data. I also began training to fly the drone.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

Progress mostly on schedule except I tested the other sensors separately without sensor fusion with the radar data, which I will do next week.

What deliverables do you hope to complete in the next week?

- Decide on Monday what the interim demo will encompass

- Make sure the system is able to detect humans for the interim demo

- Collect more 3D range-doppler-azimuth data in different scenarios for more training

Angie's Status Reports

Angie’s Status Report for 3/18

What did you personally accomplish this week on the project?

This week, I acquired the DCA1000EVM board and tested it with the AWR1843 radar, allowing real-time ADC samples to be collected easily and more versatilely processed into 3D range-azimuth-doppler maps that fit in with our ML architecture. After passing an exam, I also obtained a part 107 license to fly the drone. Although our system can be tested without a drone, our project is designed for drones and we would like to use drones for the demo. It is also important that the system can detect humans from the perspective of a drone (although stationary, it experiences more perturbations in motion even when hovering, compared to testing without a drone) looking downward at humans from several meters above, along with Doppler noise from the wind and moving objects in the environment that are not available at ground level.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

Progress is on schedule after receiving the green board.

What deliverables do you hope to complete in the next week?

- Sensor fusion of GPS and IMU data with radar data

- Test methods of increasing resolution of radar data

- Begin integrating radar with drone