What did you personally accomplish this week on the project?

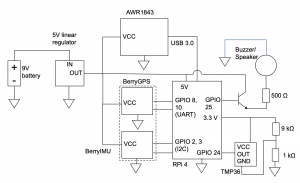

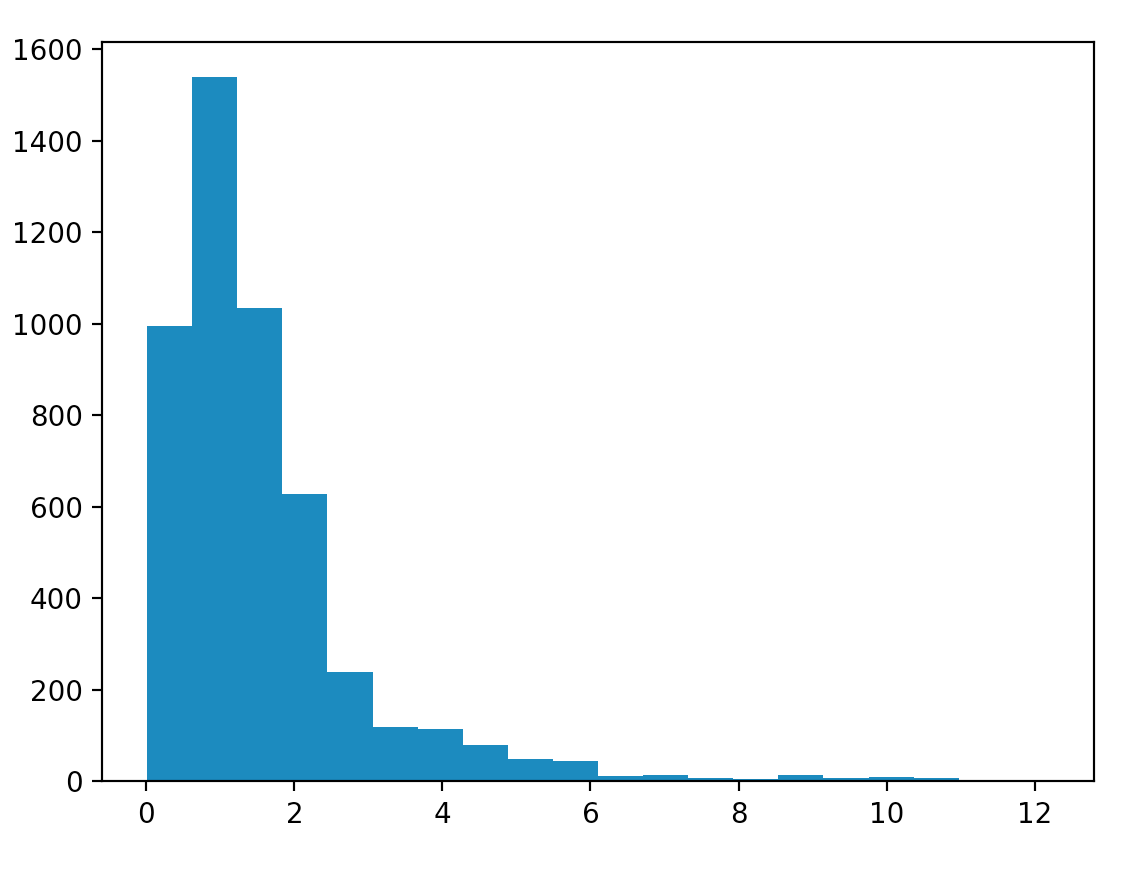

- This week, I worked with Linsey and Ayesha to integrate the GPS with the Raspberry Pi. We could not get the GPS to fix on satellites indoors, so the GPS was able to be fixed by placing it outdoors for nearly an hour. The initial results are that the GPS data is precise (majority of locations are within 2 meters of the average location, and the standard deviation is 1.5m), but inaccurate, being about 20 miles away from the actual location. To maximize the location accuracy, the location shift will be compensated for, and the latitudes and longitudes will be filtered over time to reduce the influence of outliers.

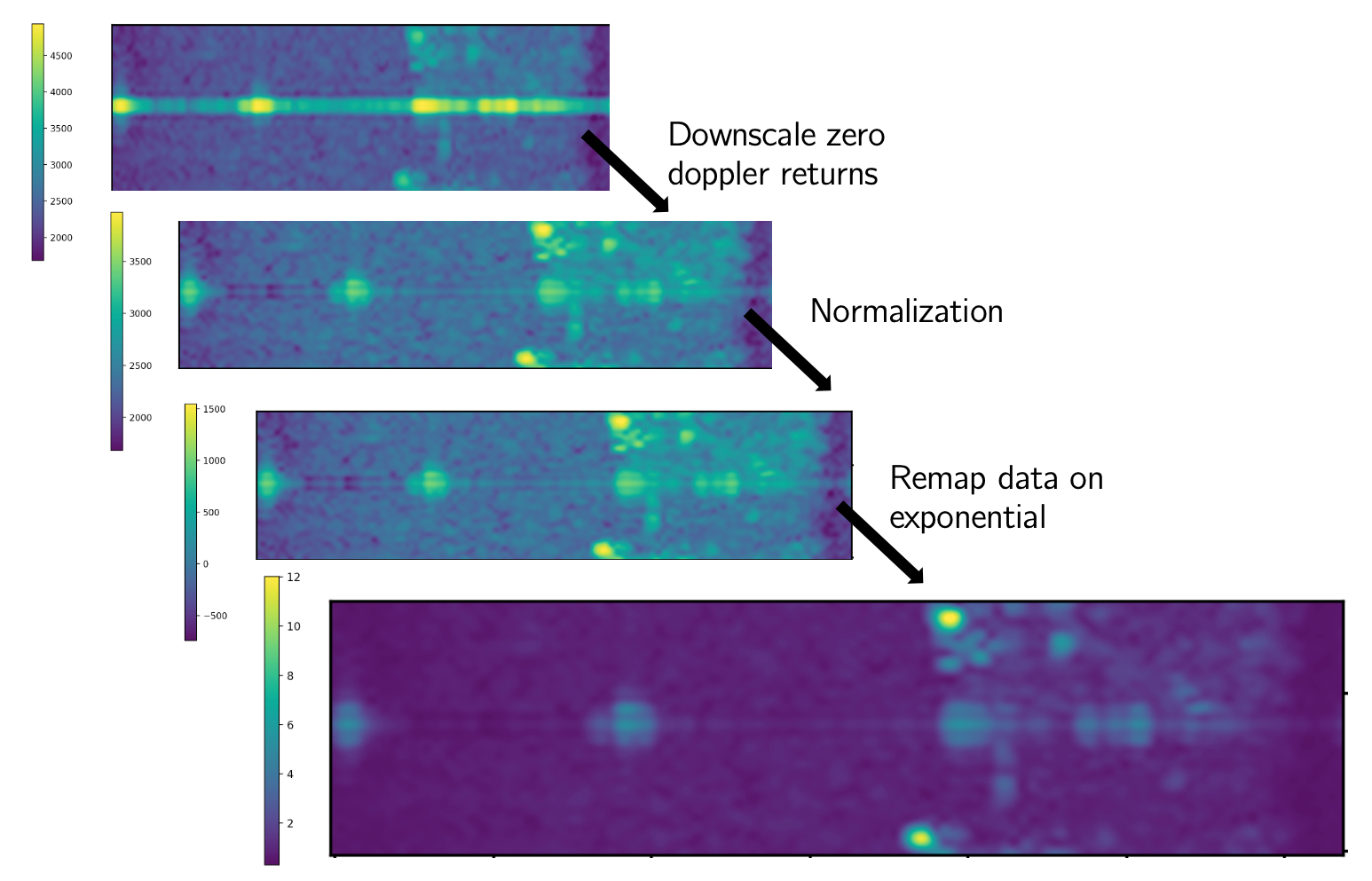

- I collected 3600 more samples of data to train the neural network in hopes of increasing the F1 score. Heeding feedback from the final presentation, I recorded the movement of non-human animals such as a wasp. The human data contained more representation of relatively stationary movements such as deep breathing, which decreased the F1 score to 0.33 again, so I collected data where the humans are dramatically moving (including behind barriers) such as arm-waving.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

Progress is behind on GPS integration due to lack of connection with CMU-DEVICE and trouble getting GPS fix, and I will catch up by resolving errors specific to slow GPS fix time and not remembering its position between reboots.

What deliverables do you hope to complete in the next week?

- Resolve problems with getting a GPS fix quickly (such as downloading almanacs from internet)

- Test system on CMU-DEVICE

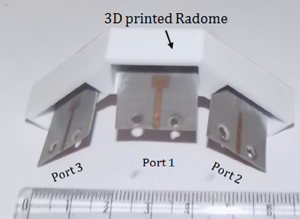

- 3D print the chassis and put all parts in chassis