Ting’s Status Report 4/29/23

This week we worked with TA Samuel to come up with multiple options for our ML model which was detecting everything as trash. The options are listed in our team status report. As of right now, we are choosing the path of combining the old drinkables dataset into the new trashnet dataset. We are cleaning up the dataset, replacing the plastics in trashnet with the old dataset. This is because according to Pittsburgh recycling rules only bottles without caps can be recycled but this isn’t reflected in the new dataset. Over the weekend I will work on cleaning up the datasets so that next week we can train. If we can get this option to work early next week we will be ok, but otherwise we will be behind schedule.

The mechanics are on track to be finished, we just have to install the side lock servos and redo some of the legs. Vasudha and I cut the wood for the backboard and screwed it into the frame with the main servo. The frame of the whole system is now integrated together and we can test with the jetson. We discovered that angle brackets are needed on the legs and everything else we screw at a 90 degree angle because the legs started to warp in on itself. Next week we will work on these adjustments and finish up servo locks, and the mechanics will be finished.

Ting’s Status Report 4/22/23

This week I worked on both the mechanic and software portion. On the mechanic side, I helped Vasudha with constructing the swinging door, we discovered that attaching the servos with the wood first may not have been the best idea, since the legs are curved in and we probably have to redo it when we attach the shaft.I think we should work with the lid and the bin directly instead of working with the legs for now. The platform itself seems too heavy, we are thinking of switching to plywood. Also the clamps we ordered were too big and we have to order smaller ones for the shaft to fit better. Schedule wise we probably have to figure out the swinging mechanism by the end of this week to be comfortable.

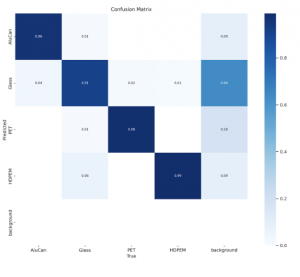

On the software side, I trained a trash dataset on top of our original model. Our class names were different for the same object for some of them, so the model was finding minute differences that it wasn’t supposed to learn. To fix this, Aichen wrote a script to change classes into 4 classes: trash class, 3 recyclable classes. Using this script, I retrained the trash dataset on top of our original model. We are testing this now, and currently it’s having trouble classifying recyclables as the right class. We will have to look into this after final presentations.

Team Status Report 4/22/23

This week on the software side, we were able to train a trash dataset containing 58 classes on top of our original model. When we tested it, it was giving us “no detections”, which is when we realized that we needed to change the class names to be both the same between both trash sets, and also we needed less classes since we really eventually only need two classes in the end, recyclable and non-recyclable. When there were two different names for the same thing, the model was learning minute differences that it should not have been. Aichen wrote a script that would change the label txt files that we were training with to have only 4 classes, trash and 3 types of of recyclables.

On the mechanical side, we started building a major portion of the structure, cutting out the door and making holes in the acrylic using the laser cutting machine, screwing in the wooden legs and frame together, then to the acrylic, installing the servo and the axle, and attempting to connect the door to the axle.

While doing this, we ended up facing a few issues. For one, the spots where we needed to screw overlapped, resulting in somewhat uneven placements of pieces. Additionally, when attempting to use the larger clamps to hold our acrylic door, it was hard to stabilize them, and from a first glance the door seems too heavy for the servo to handle. Therefore, as a first step we ordered new clamps. If after this change, adding the locks, and powering the servo it is still too heavy, we will order a new servo (by the end of next week). Due to all these uncertainties, the mechanical portion is once again one of our biggest risks. To overcome the stability portion, we are also planning on removing the somewhat unevenly installed legs, as the door can stand on the bin without warping. Once everything on the main frame is installed, we will attempt to put the legs back.

Currently, the ML performance also poses a risk for the whole classification system. As we added the new datasets and took the “normalized classes” approach trying to make the model not too fine-grained on bottles, the performance it has is quite unsteady on various classes of items. For items that it is identifying correctly, its confidence value is not as high as our 0.85 threshold. The mapping between the numbers and words (both to label classes) is also somewhat skewed. However, we believe this is still the move to take and we’ll dive deep into it right after the presentation. The good thing is that the model seems to do well at classifying trash vs. recyclables even though it might not always get the actual recyclable class right. (there are multiple recyclable classes and a single trash class in the training dataset)

We are somewhat behind on schedule, since our respective parts are not working as well as intended after some initial testing, and need more development work. Our plan is to scale back on the mechanical building, installing in terms of minimum priority to get the device working, then go from there, specifically having the door portion done by the end of next week, and start installing the rest of the hardware parts that weekend. On the ML side we are also somewhat behind schedule, since we are not exactly sure what the next steps are for fixing the classification situation, and we’ll have to take a look closer after the presentation is finished.

Ting’s Status Report 4/8/23

This week we had our interim demo, and we showed our system which is a camera that captures the object, shows the classification on the monitor, then decides whether it sends a 1 or 0 to the Arduino which then turns servos and lights/sounds up accordingly. From the feedback we received, we worked on refining the ML as well as adding a CV script to check for object detection separate of YOLO. So our system was classifying bottles very well, but it had trouble detecting anything that wasn’t a bottle. This is because the model was only trained on the four types of drinking waste, and if it doesn’t consider an object a bottle then it won’t detect it at all. So I worked on finding a trash dataset that I could further train the model on. It was more difficult than expected, since we were looking for a very specific file structure for the images and labels to be in. So far I was almost able to integrate a trash dataset I found to start training, but all the labels are in json form and the code that is supposed to convert them to txt works in recreating the file but leaves the files empty, which is something that I will work on further to figure out. After this is fixed though I will be able to start training on the trash dataset. We will use this in adjacent to the CV script that Aichen is writing, which will give a score based on how different two images are.

This is an example of a classification instance that sends false to the arduino.

Next week I will keep working on training the model with the trash dataset and integrate it with the CV portion, which will be on schedule if i finish within the week.

Weekly question:

We did integration testing by using the hand santitizer bottles in Techspark, It worked well, but not when we tested a piece of supposed trash, like a plastic wrapper or a phone. We will continue testing with different kinds of bottles and common pieces to trash, to make sure that the dataset can handle more different kinds of waste.

Team Status Report 4/1/23

Our biggest risk, unable to set up the MIPI camera, from last week is resolved by switching to a usb camera. After some cross debugging, we decided to use an external usb camera instead. After the switch on Sunday, we have been able to take pictures and run YOLO inference on those pictures in real time. Our software progress with respect to integrating camera capture and triggering detect.py (inference) has been pretty smooth and we are almost caught up with the schedule. With most of the mechanical parts here, we are able to do some physical measurements to determine the height and angle that the camera should be placed. The mechanical building is now our biggest risk, as despite our rigorous planning there are some issues that could occur during implementation, which will force us to tweak our design as we go.

One example of such a situation was seen this past week, after the acrylic piece arrived. After comparing this to the plywood in techspark and the wood pieces the professor provided us with, we decided to go with the acrylic as it was more fitting in terms of size and thickness for our bin. To reduce the amount of risk, we will be testing on scrap pieces before cutting into our acrylic piece, and if something still goes wrong in this process, our backup plan will be to glue the plywood pieces in techspark to make it thicker and go with that.

Updated Design:

https://drive.google.com/file/d/1wiW573DBv5wk_PY8Wq1K_Vxar4fNopX1/view?usp=sharing

As of now, since the materials from our original order will be used, we do not have any additional costs except for the axle, shaft coupler, and clamps mentioned in previous updates. A big thanks to the professor for giving us some extra materials for our frame!

In terms of design, switching to a usb camera did not change other components involved.

We talked to Techspark workers, and they said it would be possible to CAD up the design for us if we gave them a rough sketch, and they could also help us cut using the laser cutters. Next week, we will work with them to start CADing up the swinging lid design.

Here is a picture of our new camera running the detection program. Next week we’ll have to fine tune the model some more, since here this is obviously not a glass bottle.

For the demo we will show the hardware components (servos, lights, speaker) as well as the webcam classification from a sample bottle .

Ting’s Status Report 4/1/23

This week after the CSI camera struggles, we were successfully able to start the camera webstream using the USB camera. We were also able to run the YOLOv5 detection script using the webcam stream as the input, where the script would do the detection and draw bounding boxes in real time. Prof. Fedder brought in the wood that he cut for us, so we were able to test the camera height against the wood backing and see how big the platform would have to be to have an appropriate field of view. We found that after the script identifies an object, it would save an image of the captured object with the bounding box and label. Turns out we just had to add a –save-txt flag to have the results saved, but it only saved the label and not the confidence value. Upon further inspection though it turns out there is a confidence-threshold parameter that we can set, so anything that classifies under that threshold doesn’t get a bounding box at all. We are pretty on schedule in terms of the software, we just have to train the model slightly further next week since right now it is classifying a plastic hand sanitizer bottle as glass with 0.85 confidence. We will work with techspark workers next week or after the interim demo to CAD up our lid design, and they also said that they could help us use the laser cutter.

Ting’s Status Report for 3/25/23

This week, we were able to successfully migrate our scripts that were running on Colab to run on the Jetson. We tested with some random water bottle images, and the results were okay, but not great. They would serve the purpose of our classification since our threshold is 0.85. We spent a lot of time after this focusing on getting the camera set up. It’s not going as well as expected, we kept getting a black screen when we booted up the camera stream. We tried multiple different commands, but even though the Jetson recognized the camera none of the commands could get the stream to show up. I also tried running a script for using CV2, but that wasn’t working either. I met with Samuel to debug, but he thought that we would be better off shifting tracks and using a USB camera instead. We will be trying that next week. We are on schedule with the hardware, all the servos and lights and speaker are coded up and linked to the Arduino. We drew out specific dimensions for the mechanical part, and Prof. Fedder will cut the wood for us. If we get the camera stream working next week and get it integrated with the detect script of YOLOv5 we will be on track with the software.

Team Status Report for 3/25/23

Team Status Report for 3/25/2023

This week our team got together to discuss the ethics of our project with other teams. The biggest ethical issue that our team came up with was that if users were to hack the Jetson, they could alter the built in rules and it could misinform children as well as contaminate recycling. During the discussion, the only concern that was brought up was that our idea would just not be effective, so it would teach children a false sense of hope when the outlook of recycling is so bleak. Other than the ethics, we worked on the hardware and Jetson camera integration.

On the hardware side, we got the physical circuit built, working, and able to accept serial input from a usb connected computer (a set up that we will migrate to the Jetson).

In terms of mechanical design, a major change we made after talking to the TA and professor was deciding to make the entire frame and door out of wood to avoid the risk of structural integrity that came with the acrylic. We also decided to use a flexible coupling shaft between the arduino and the axle for better movement.

Aichen and Ting struggled to get the camera working with the Jetson. We got the Jetson to recognize that the camera was present, but when the streaming screen popped up, it would just be black and there would be errors. Ting worked with Samuel to debug, but found that it would be better to just try a USB camera instead. Samuel lent the camera that his team used with their capstone, and Aichen is working on testing it with Jetson to see if this is the correct path to go down.

Our biggest risk now lies in the camera subsystem. Without the camera working and being able to capture, we cannot test our detection and YOLO classification algorithm on real time pictures. Because we are still not sure what the roots of the problem are (broken Jetson or unsuitable camera type), debugging and setting up the camera may take some time. Other than that, another risk lies in the sizing of the inner door. However, this part should not be too concerning as we could always start with extra space and make adjustments after experimentations.

A great thing for us, the hardware work is caught up and the whole hardware circuit, as well as (serial) communication between Jetson and Arduino is both done. We are a little behind on mechanics as there are still more parts to be ordered and it is hard to finalize measurements without physical experimentation. The ML system alone is on track as training seemed to return great results and the inference code could be run on Jetson as expected. Soon, we will start fine tuning. As mentioned in risks, the camera is taking us much longer than expected and it is hard to do live testing of detection & classification without that set up well.

Looking ahead, our goal for this next week is to catch up as much as we can in terms of our schedule so that we are prepared enough for the interim demo. For the demo itself, as a minimum we hope to have the Jetson classification + hardware operation working, however, our goal by this time is to have everything besides the mechanical portion somewhat integrated and working together. This is essentially to buy time for our mechanical parts to be completely ordered and arrive, and after the demo we can spend the final three weeks fine tuning and connecting our hardware to the mechanical parts to the best of our abilities.

Ting’s Status Report for 3/18/23

This week I got the training to run on GCP. I created a non-CMU account, and got $300 of free credit on GCP which I used to train 10, and then 20 epochs. The code runs much faster, with 10 epochs taking only 5 minutes. The training accuracy after 20 epochs is over 90% for all four types of drinking waste.

I also started setting up the Jetson. I worked on setting up an instance to be able to use with colab. I was able to connect colab with the local GPU. But after some roadblocks that came when I tried to run inference on a random picture of a bottle, and a conversation w Prof. Tamal, we realized that we can just run on terminal, which we will try next week. We also connected the camera to the Jetson, and will work on the code to have the snapshots from live camera stream be the source to the ML inference.

I believe in terms of the software and hardware coding, we are on track. We are slightly behind on the Jetson and camera integration, having it working this week would have been better. We are definitely behind on the mechanical portion, as our materials have not arrived yet. We plan to digitalize a detailed sketch-up with measurements to send to Prof. Fedder.

Our accuracy is >90% for all 4 types of drinking waste after training for 20 epochs

Our accuracy is >90% for all 4 types of drinking waste after training for 20 epochs

example of yolov5 doing labeling and giving confidence values. they are all mostly 90%, which is past our agreed threshold