This week, the main tasks we focused on were prepping a basic set up for our demo, starting the mechanical building, further training our model, and improving our detection algorithm.

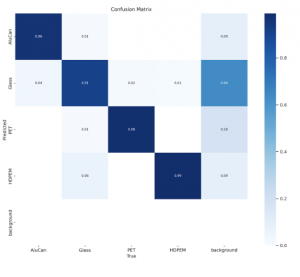

For our demo, we presented the overhead camera capturing images, our model detecting and classifying the objects in the image and then sending a value that the hardware circuit then acted upon. The hardware circuit included all the parts for the baseline, the neopixel strip, speaker, and servos. Some issues that we had during the demo was the Aruduino controlling the hardware kept switching ports, stopping the process midway, and the model not detecting anything that was not “recyclable” according to the model. Our plan for the next few weeks is to fix these two major issues by writing a script for the port switching issue and further training our model with a trash dataset so that we can detect both. On the side, we will start developing the mechanical building so that everything is hopefully built by the deadline.

Our biggest risk is the mechanical portion once again. This is mainly due to the fact that all of the other requirements for our baseline have already been somewhat developed/have a basic implementation currently running, while the mechanical aspect has yet to be physically developed. However we started working on this later this past week once all the parts arrived, and our plan to deal with this risk is to in parallel develop the different remaining parts so that our overall integrated system operates better than during our internim demo.

In regards to our schedule, we realized that we needed to train our model more and improve our detection algorithm, and so will need to allocate more time for that this week. However, in regards to the hardware, we are pretty much on track. We are on track for the mechanical building as per our latest schedule, and will need to make significant progress in the next one or two weeks to have a working system.

Next week, our plan is to have our model detecting both recyclables and waste, and have the basic mechanical frame start taking shape so that we can start integrating the hw to it the following week. Integration of the FSM part, before running YOLO inference, is under testing now and will be working by next week.

While we did complete some basic tests and integration for the internim demo, as we enter the verification and validation phase of the project, we are planning to complete some more individual tests on each of our subsystems, and then test the overall integration.