What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

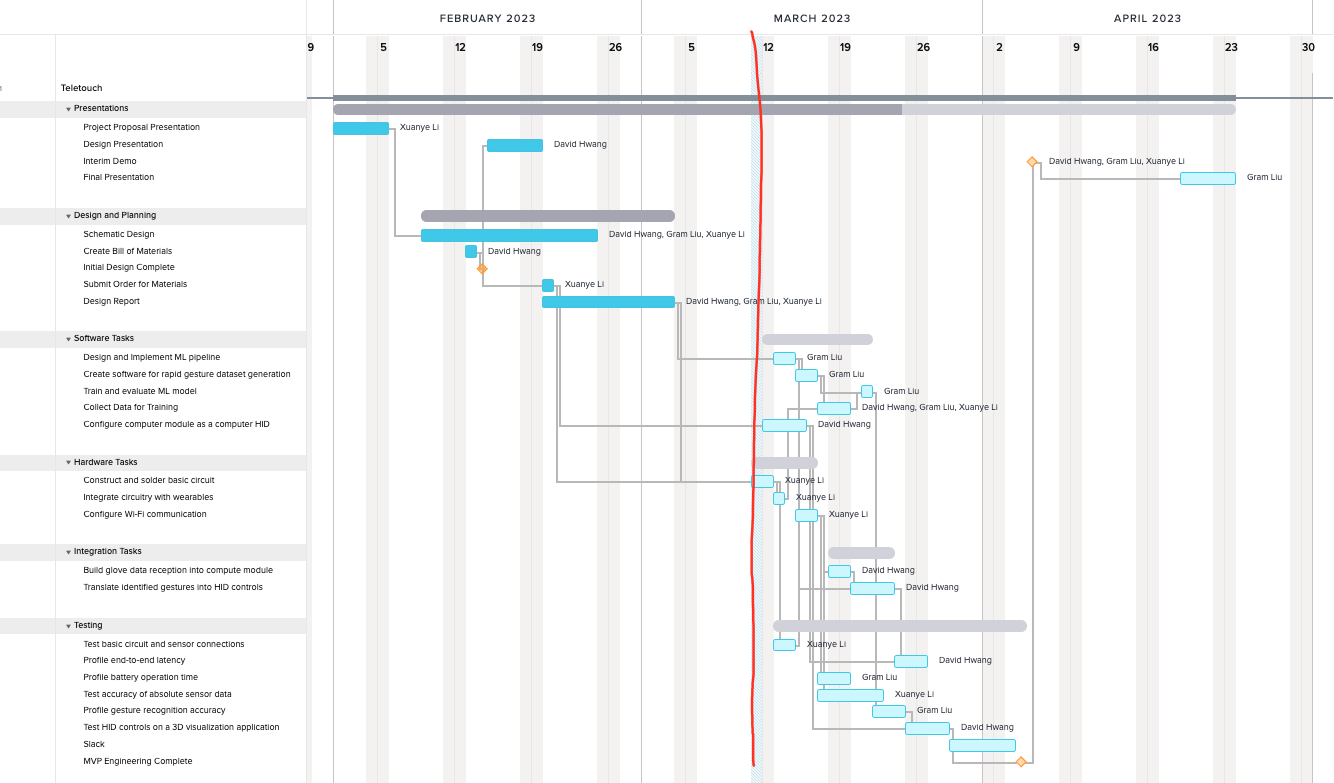

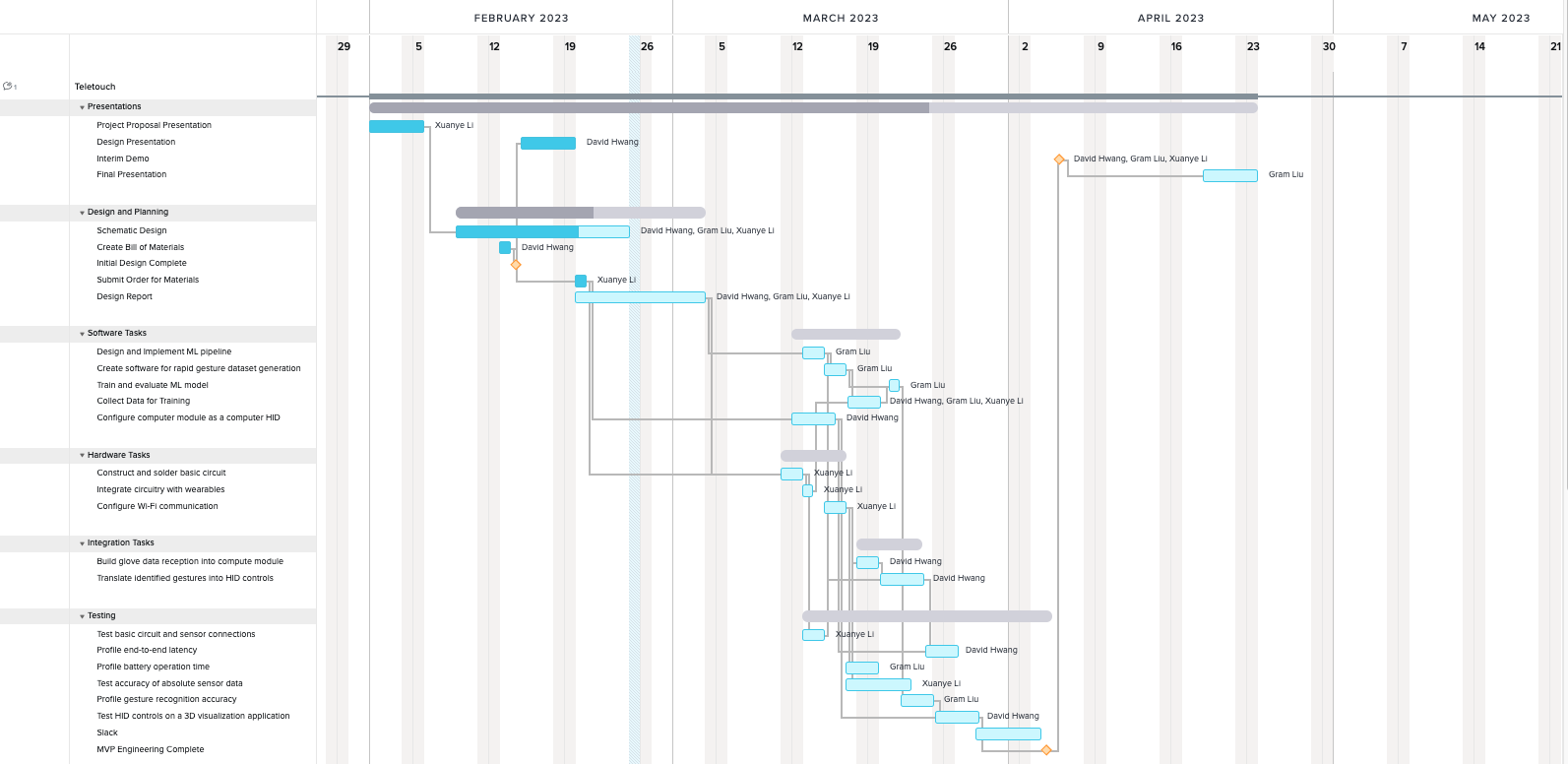

The past week, I worked on writing the design report. Specifically, I worked on the Introduction, Use Case Requirements, parts of the Design Trade Studies, System Implementation, and Project Management sections, Related Work, and Summary.

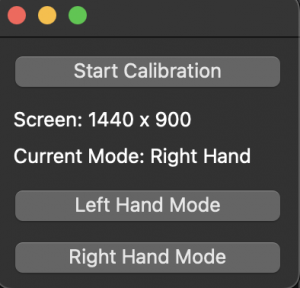

I also began writing the controller code for the compute module. I setup the Mosquitto (MQTT) broker on the Raspberry Pi so that it can listen for incoming messages from a connected glove. I also wrote a Python program that subscribed to the data packets that would be broadcasted by the glove. Since the glove MQTT client is still in progress, I tested this functionality by writing a dummy MQTT client in Python to publish messages.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Progress is still on schedule.

What deliverables do you hope to complete in the next week?

I plan to finish writing the controller code this week. I will need to write the logic for aggregating received data packets into windows that will be fed into the decision tree. Depending on how quickly the system can process incoming packets, I may also need to implement some sort of buffer queue to handle the case of data packets arriving faster than the system can process them.

Furthermore, I will also finish implementing the decision tree. I will try to implement the logic for classifying what gesture a user is doing without necessarily correctly identifying the degree/intensity of the action yet. If the decision tree logic is finished quickly, I hope to implement the degree/intensity determination logic as well.

As you’ve now established a set of sub-systems necessary to implement your project, what new tools have your team determined will be necessary for you to learn to be able to accomplish these tasks?

I will need to learn more about windowed analysis algorithms over time series data because I need to identify what optimal window size I should use. Moreover, I will likely need to learn more about data smoothing algorithms to prevent jittering. Furthermore, I will need to learn how to effectively remove the gravity vector from the accelerometer data. This will be challenging since the gravity vector may be split up across the three axes, depending on the orientation of the hand.