This week, our team presented our demo, worked on the component housing for the iRobot, and began more work on the OpenCV. In the demo, we showed the progress we had made throughout the semester, The housing is being transitioned from a few plates of acrylic to being a custom CAD model. Finally, the OpenCV beacon detection ROS package development has begun, and is shaping up nicely. As a team, we are definitely on track with our work.

Keshav Sangam’s Status Report for 4/10

This week, I focused on preparing for the demo and starting to explore OpenCV with ROS. The basic architecture of how we plan on using webcams to detect beacons is to create an OpenCV based ROS package. This takes in webcam input, feeds it through a beacon detection algorithm, and estimates the pose and distance for a detected beacon. From here, we then use ROS python libraries to publish to a ROS topic detailing the estimated offset of the beacon from the robot’s current position. Finally, we can detect visualize the beacon within ROS, and can thus inform our pathplanning algorithm. We are working on camera calibration and the beacon detection algorithm, and we plan to be able to get the estimated pose/distance of the beacon by the end of the week.

Jai Madisetty’s Status Report for 4/10

This week, I focused on preparing proper housing for the different components. We are planning on sticking with the same design as the barebones acrylic and tape design we had previously. We are still waiting on the standoffs so we have not yet started fixing components into place. I predict that CADing will be the most time consuming this week.

While waiting for the standoffs to arrive, we are working on getting our webcam integrated and calibrated with the NVIDIA Jetson. We are also planning on starting the path planning implementation this week. I began researching different algorithms that work and integrate well with ROS.

Raymond Xiao’s status report for 04/10/2022

This week, I am helping to set up the webcam. Specifically, on calibrating the camera (estimating parameters about it). The camera model we are using is just a standard pinhole webcam. We are currently planning on calibrating the camera on our local machine and then using it on the Jetson. This is a reference to a page that we plan on using to help us in calibration: https://www.mathworks.com/help/vision/camera-calibration.html.

We are also setting up our initial testing environment right now. As of right now, we are going with cardboard boxes since they are not expensive and easy to setup. Depending on how much time is left, we may move to more secure ones.

Team status report for 04/02/2022

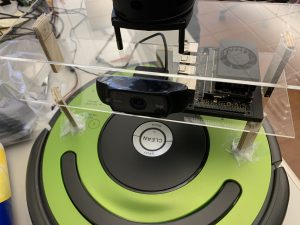

This week, our team worked on setting up the acrylic stand that the sensors as well as Jetson will be mounted on. The supports as of right now are wooden supports, but we plan to use standoffs in the future. We have most of our initial system components set up right now for the demo which is great. As a team, we are all pretty on track with our work. In terms of our plans for next week, overall, we will continue working on the iRobot programming and implementing a module for cv2 ArUco tag recognition.

Raymond Xiao’s status report for 04/02/2022

This week, I worked on helping set up for the demo this upcoming week with my team. I specifically worked on helping to set up the iRobot sensor mounting system with Jai. We used two levels of acrylic along with wooden supports for now (we have ordered standoffs for further support). As of right now, the first level will house the Xavier and webcam while the upper level will house the LIDAR sensor. I have also been reading the iRobot specification document in more detail since there are some components of it that are not supported in the pycreate2 module. For this week, I plan on helping set up the rest of the system for the demo as well as a wrapper module for ArUco code that we will be using for human detection.

Keshav Sangam’s Status Report for 4/2

This week, I worked on getting the Jetson to send instructions (in the form of opcodes) to the iRobot in order to get it to move. To do this, we have to publish linear and angular speed messages to the command velocity ROS topic. From there, we build a driver to convert these speed commands into opcodes that tell the iRobot how to move. I forgot to take a video, but this will be demo’d on Monday. The next step is creating the path planning algorithm so that these command velocity messages can be published by the path planning (and hence become autonomous), rather than the current method of a keyboard-teleoperated iRobot.

Jai Madisetty’s Status Report for 4/2

This week, our main goal was to get a working demo ready for the coming week. My job for this week was constructing the mounting for all the devices on the iRobot. We decided we needed 2 levels of mounting, since the LIDAR would need its own level since it operates in a 360 degree space. Thus, I set the LIDAR on the very top and then set the NVIDIA Jetson Xavier and the webcam on the 2nd level. Due to delays in ordering standoffs and the battery pack, we have a very barebones structure of the mounting. Here’s a picture:

Once the standoffs arrive, we will modify the laser-printed acrylic to include screw holes so the mounting will be sturdy and legitimate. This week, I plan to get path-planning working with CV and object recognition.