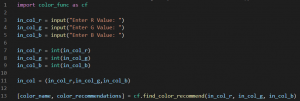

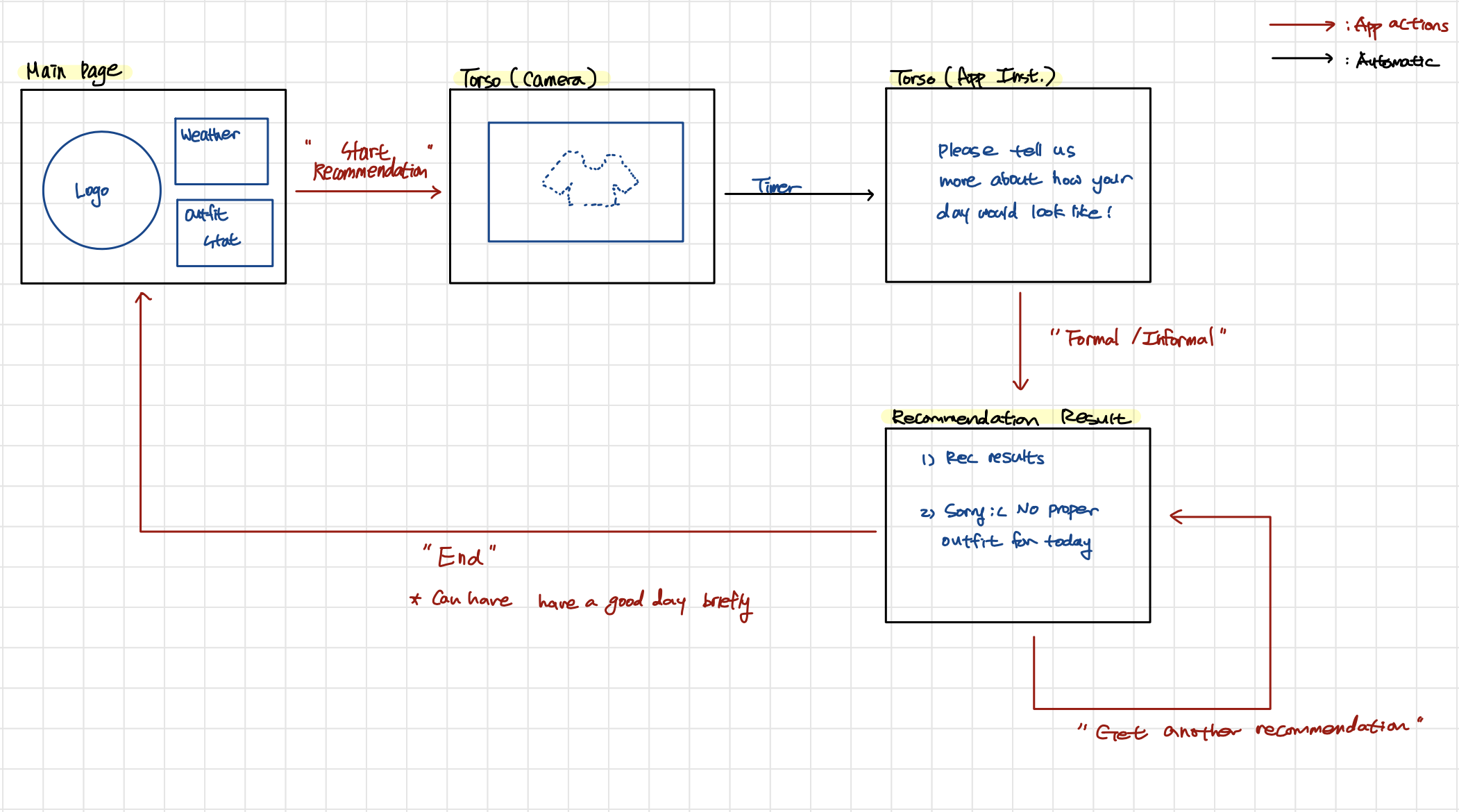

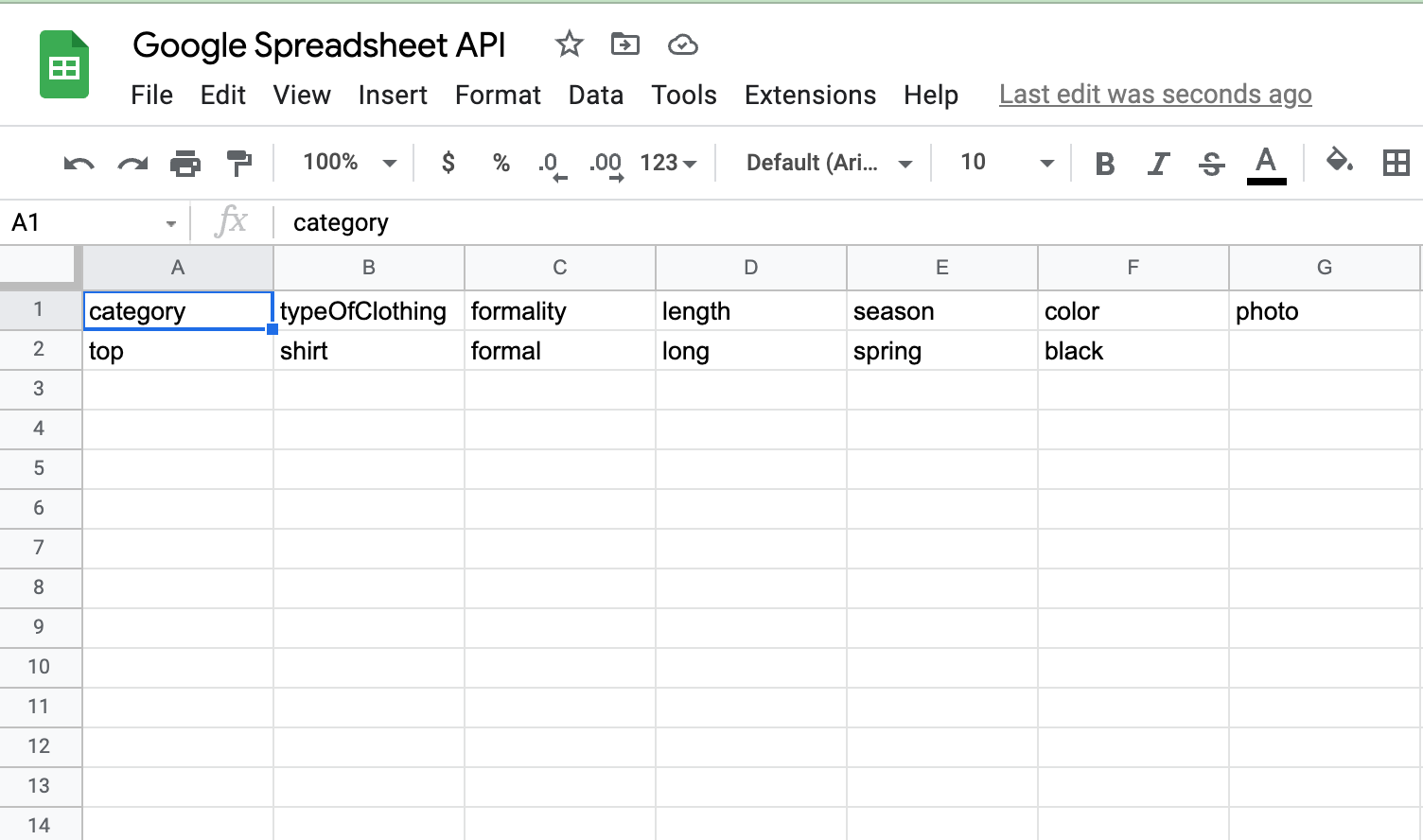

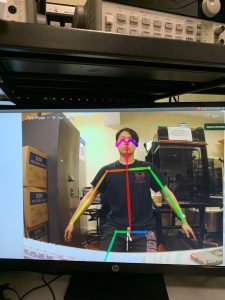

This week I focused on creating a cropping script for the image as well as creating a shell automation script to allow the code to run automatically. For the cropping script, it takes in the info from the JSON output file produced by Open Pose and crops the image taken by the arducam based on the body key points labeled in the JSON file. The cropping allows the image to be focused on the upper body to make it easier for Color Thief to detect the upper torso color. Then it will automatically saved that image in an easily accessible location in Jetson Xavier.

For the shell script, it ties together the components for first part of our recommendation process. It automatically takes 20 pictures through the arducam and selects the most well lit (the 20th picture taken) picture and runs Open Pose only on that one. This reduces our run time since OpenPose takes a while. Then it will take that image and run it through the cropping script I wrote this week and it will save that image. Then the script takes that image and runs it through the Color Thief API that Yun organized and produces an output.txt file with the dominant color of the image.

Our individual chunks are pretty much done and we have started to work on integration of those parts today so if we work hard this week, our team should be able to finish on schedule. Next week I will work on connecting my part done this week with Ramzi’s recommendation algorithm.