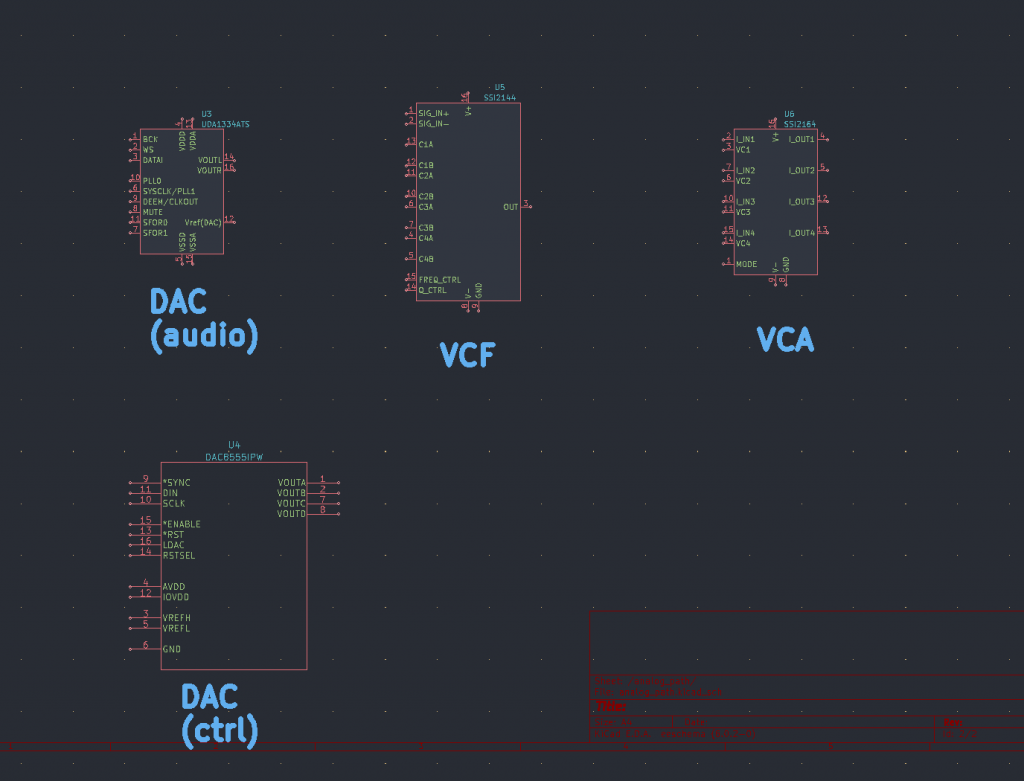

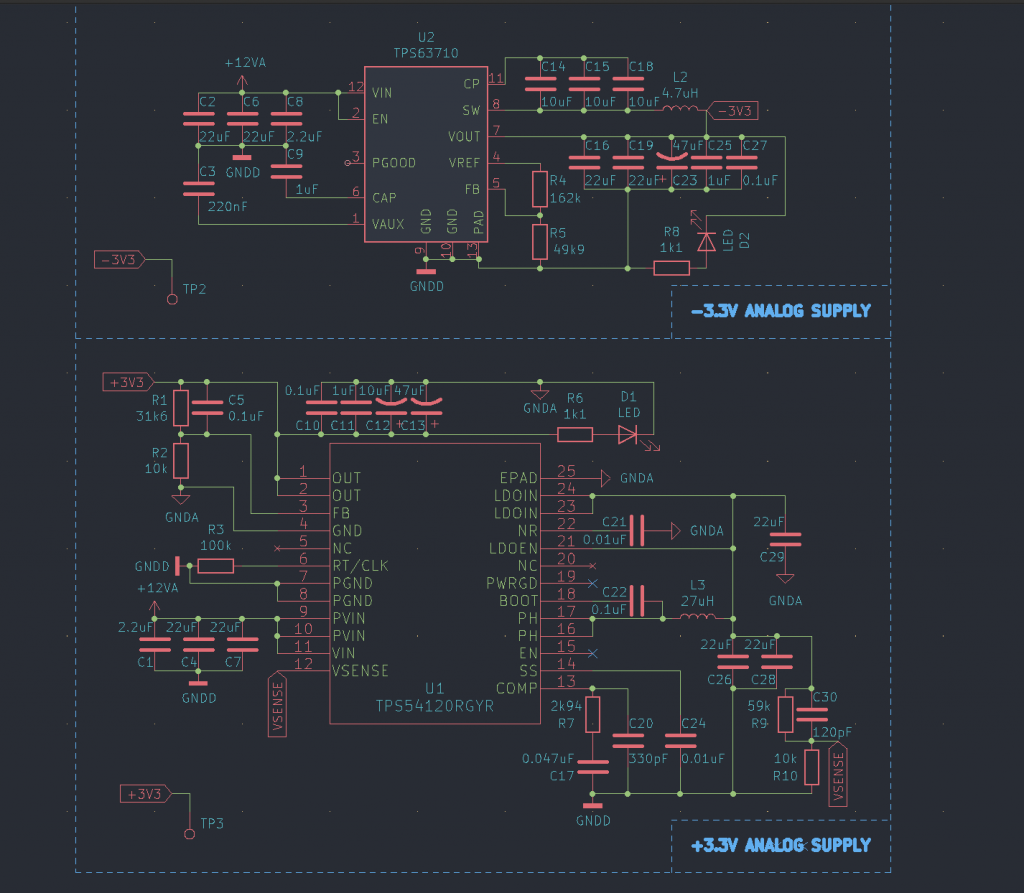

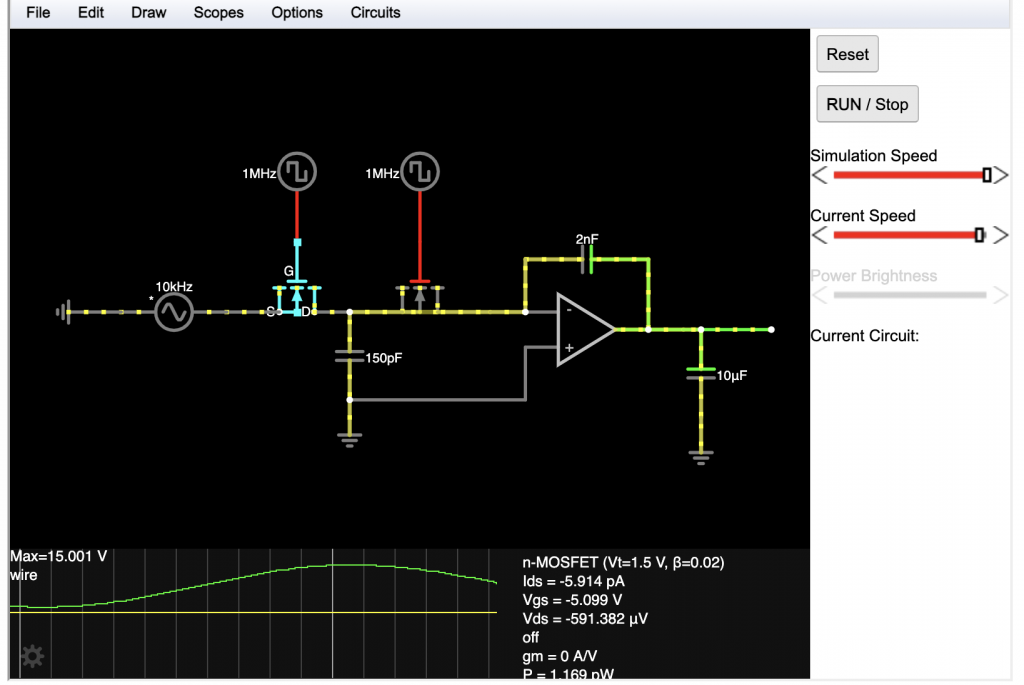

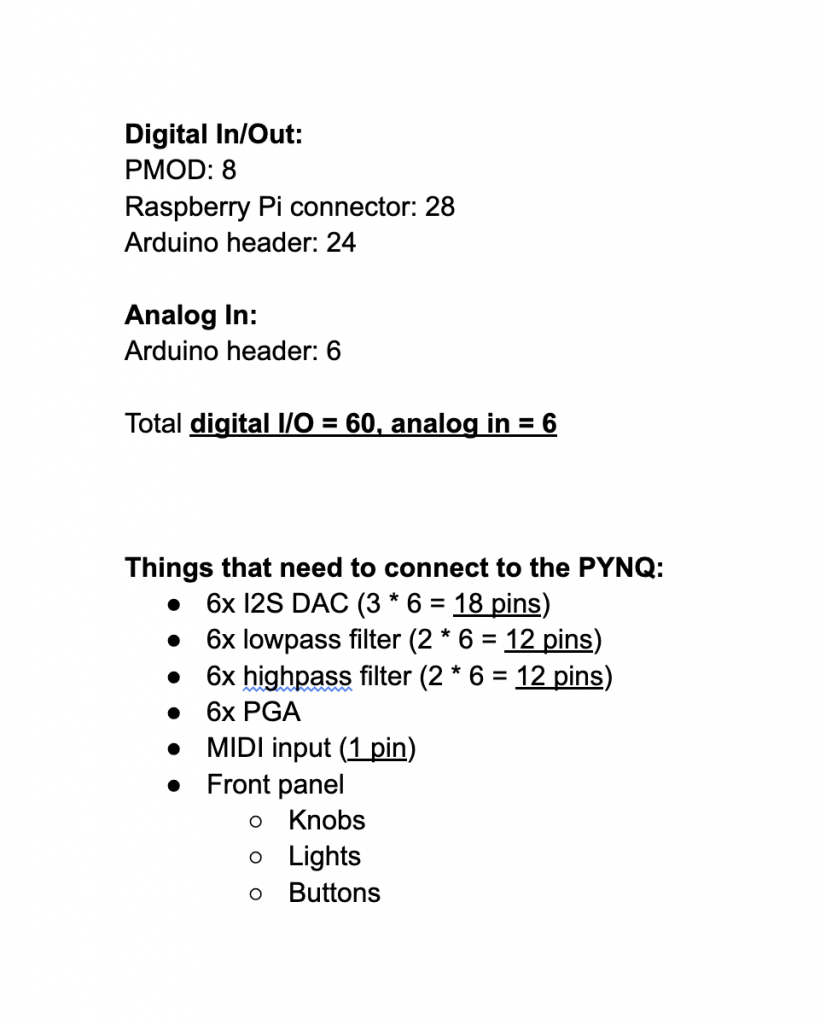

At this time, we have a clear path ahead of us for creating the PROGNOSTICATOR-6. We made changes to the original design last week, but we have not altered it again. Since simplifying our design, our greatest challenges seem to be integration and implementing some complex aspects of synthesis. The analog path may be simpler now, but as Sam discussed in the design review presentation, we still must be cautious of generating noise.

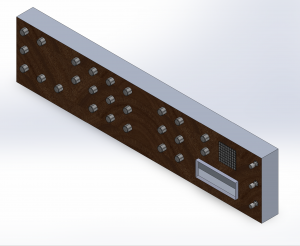

It may be risky to put too much emphasis on the user interface and aesthetics when we have many other elements of the project to consider. What use is a synthesizer that looks pretty, but does not sound good! If there is an overwhelming amount of work on the FPGA, Sam and Graham will need to spend more time on that to ensure that the essentials are completed in time.