For the deliverables I promised last week, I am not able to provide them because the schedule timing has been tighter than I anticipated, and as a result I was not able to properly tune the parameters for the blob detection. The most it could do was to detect the shadow in the corner. Ideally, a blob detector with better parameters is the deliverable by the deadline next week.

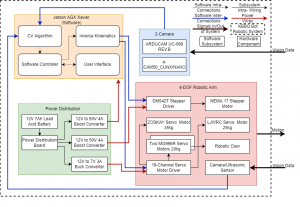

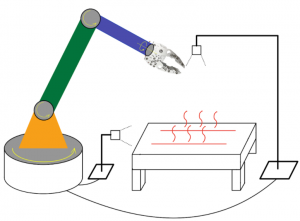

The main reason was the tight schedule between the presentation and the design report. During the past week (from last Saturday to 2/26), a lot of work was done on the design presentation, mainly having to communicate between the team members on the exact design requirements of the project. Questions involving the discovery of a temperature probe that could withstand 500F or higher popped up, as well as questions on the best type of object detection. Furthermore, as I was the one presenting the design slides, it was my responsibility and work to practice every point in the presentation, and to make sure everyone’s slides and information was aligned. In addition, as it was not I but Joseph who possessed robotics knowledge, I had to ask him and do my own research on what the specific design requirements are.

This week, I also conducted research on other classification systems after the feedback from our presentation. Our two main issues are the lack of enough images to form a coherent dataset, hence our lower classification accuracy metrics, and a selection of using a neural network to classify images ahead of other classification systems. One possible risk mitigation took I found was using a different system to identify objects, perhaps using SIFT. That, however, would require telling the user to leave food in a predetermined position (for example, specifically not having thicker slabs of meat rolled up).

We are on schedule for class assignments, but I am a bit behind in configuring the blob detection algorithm. I am personally not too worried about this development because blob detection algorithm is actually me working ahead of schedule anyways.