This week, progress was a bit slow, due to lots of work from other classes and other interference from life. Additionally, I am not as familiar with python as I previously thought I was. I have been using the Python library tkinter, and have something basic, but it still doesn’t look great. I am confident that it will be fine by the time we have our interim demo, though. I will have to put in a bit of extra work this upcoming week, but it is nothing that I won’t be able to handle.

Joseph Jang’s Status Report for 3/26

This week, I was able to set up the Jetson Agx Xavier. I have started reviewing the inverse kinematics for the robotic arm. I am going through the tutorials for using Python with ROS to get a better understanding of robot simulation. I am also looking through the lecture notes of the robotics course 16-384 called Robot Kinematics and Dynamics. There are many resources available, but I am currently leaning towards using a MATLAB & Simulink library called Robotic Systems Toolbox. I have started playing around with the IK solver algorithms and simulation model for the end effector of the robotic arm. I am having some trouble understanding all the math behind a 4-DOF robotic arm. I think I will eventually get there, but for now, I will simulate the robotic arm.

Team Status Reports 3/26

The computer vision progress for blob detection seems to be progressing smoothly. There seems to be no hiccups in the production schedule for the project. Joseph has been progressing smoothly on the robotic arm, and the grill has arrived. This is a crucial step because now he can better obtain visual feedback on how the arm will interact with the grill environment. Jasper has been working hard on the UI and on some of the more fine tuned aspects of the system, such as the cooking time algorithm.

From the ethics class, one ethical issue we did not consider was the fact that a childs arm might be mistaken as one of the pieces of meat to be picked up by a robot. This is a potential issue that our team will have to look into further, but one solution is to have a manual robotic arm stop in the user interface. Note that this issue will mainly apply to people with a more reddish skin color, perhaps caucasian or sunburnt person. More testing will be needed to see the interaction between the blob detector and skin complexion

Raymond Ngo’s status report for 3/26

As promised last week, I was able to solve the issue of blob detection not working on larger blobs. As it turns out, the reason why I was unable to solve the issue earlier was because I was turning off filtering mechanisms one by one, however, I actually had to disable multiple preset parameters to get the blob detection to work on larger images. Furthermore, this past week I was working on ways to improve the blob detector and edge detector on different types of images. As seen in last week’s report, back then I was only testing on a simple slab of meat on a white background. This week, I obtained more images, especially the type of image seen below where the meat is on a tray, which is how I expect the final environment to work. And as you can see, my changes in parameters means edge and blob detection work in more scenarios accurately (note the 3 circles for the 3 trays of meat).

Next week, my 2 primary tasks involve beginning the setup of a neural network and getting the blob and edge detectors to take in a live camera feed to test its effectiveness in a more real world setting. I anticipate the collection and tagging of a dataset.

Jasper Lessiohadi’s Status Report for 3/19

We received all of our supplies that we requested, so we can now try to see how all of our parts fit together. I am still currently working on the UI because I am not yet satisfied with how everything is arranged. I am playing around with the button shapes and locations, and how to best show the sections of the grill through the camera feed. Getting the camera feed itself to show up has been a little bit of an issue as well, but I do not think it will be too complicated to fix this. I think that the main issue will be having the UI, robotic arm, and CV interact correctly with each other, but we will make it work by the time the demo comes around.

Team Status Report for 3/19

For spring break and the first-week back, we started developing each of our respective subsystems. Raymond has made progress on the CV algorithm and is currently trying to get his Blob Detection Algorithm working. Jasper has started working on the UI and deciding on what information to put. Joseph has finished the mechanical robotic arm, as well as the electrical subsystem of the arm. He has also started work on the lower embedded software control of the various stepper and servo motors. Our parts were all delivered and picked up this week. We are all at a decent pace to have the robotic arm and our respective subsystems working by the interim demo. We have not started working on the software controller, but we believe it should be done as part of the integration process, as well as after the interim demo. We have also each worked on the ethics assignment and are ready to discuss it with other groups. We will update our progress in our schedule accordingly.

Raymond Ngo’s Status Report for 3/19

I was able to modify test images to isolate red portions of the image. From there, I was also able to easily obtain the outer edges using the canny edge detector. Isolating red portions of the image is necessary for the development of the computer vision system because the meat is red and the canny edge detection on an unmodified image does not return a clean outer border around the meat. (shown below)

The main issue I need to get solved by next week to remain on track is to figure out why the blob detector cv function does not work well with the processed binary image (shown below). Otherwise, I am on track to complete a significant portion of the width detection and blob detection algorithms.

The reason preprocessing needs to be done on the image is that meat usually is not on a white background like this. Therefore, necessary distractions must be removed for a more accurate detection of the material.

By next week, some form of blob detection must work on this test image, and ideally one other more crowded image.

Joseph Jang’s Status Report for 3/19

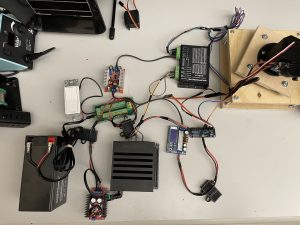

I have made a good amount of progress on the robotic arm subsystem. With the 3D printed links and wooden base I created before the start of the break, the electrical parts our team borrowed from the inventory, and the electrical parts and motors I already owned, I was able to complete about 80% of both the electrical subsystem and the mechanical parts of the robotic arm during spring break. Below are pictures of that progress.

With the arrival of most parts on Monday, I was able to complete and test the electrical subsystem on Monday and completed the mechanics and heat-proofing of the robotic arm on Tuesday. Below are pictures of the completed electrical subsystem and robotic arm.

With the arrival of most parts on Monday, I was able to complete and test the electrical subsystem on Monday and completed the mechanics and heat-proofing of the robotic arm on Tuesday. Below are pictures of the completed electrical subsystem and robotic arm.

During the rest of the week, I configured the Jetson AGX Xavier and set up the software environment. I programmed the 5 servo motors to properly turn with certain inputs, and I was also able to program the stepper motor to turn properly at the appropriate speed and direction. Below is a video of that. I will now have to work on the computer vision for the robotic arm using the camera that we bought. My plan is to now work on and complete the inverse kinematics software in two weeks at the earliest, and three weeks at the latest (which will take up one week in April). I am at a good pace to complete the robotic arm subsystem before integration.