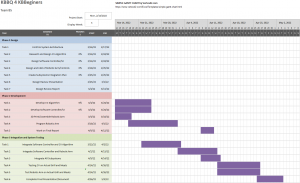

One risk we have not considered to the extent we should have until feedback from the instructors for other proposals was slack and integration time. For one, our integration between the software controller and the computer vision algorithm and the integration between the software controller and the robotic arm happen almost simultaneously, which creates the issue of integrating 3 items at the same time, greatly complicating the process of integration. Developing the Computer Vision and software interface might take less time compared to the robotic arm, so one idea proposed is to reduce the development time of computer vision and software by a few days or a week and use that extra time to integrate. Furthermore, testing the robotic arm on an actual grill should take place before the integration step, in the case of a serious misunderstanding of the heat tolerances of the arm. We also made a basic concept drawing of our robotic system. Here is our updated schedule, mostly seen at the end of March and the month of April (I’m sorry for the image quality it cannot be improved for some reason, but we have made some dates for integration longer and software and CV implementation shorter).

Raymond Ngo’s status report for 2/12

This past week I took a further look at the types of computer vision algorithms needed to complete the thickness estimation project. While initially we decided on using a neural network to determine the type of meat to help find the cooking time, we decided this would not be a good idea, owing to the different colors (from marinating) and the similarities of various types of meat. We would also have issues finding a proper data set to train on.

Instead, I looked through the different methods of finding thickness, and the best way seemed to be using the Cammy edge detector function in opencv. The challenge facing the upcoming week would be finding a way of making sure the thickness measurement (most likely in pixels) is accurate. The second issue would be making sure the meat measurement is correctly measured at a similar environment each time. This would most likely be done by having the robotic arm lift the meat to the same location each and every time, with the only variable being the position the robotic arm grabs the meat at. However, this ignores the really thin cuts of meat. In the coming week, I will discuss the possibility of removing that type of meat from our testing metric completely, given how different it will be from every other type of meat we plan on testing. Included is the image of the outlier meat cut.