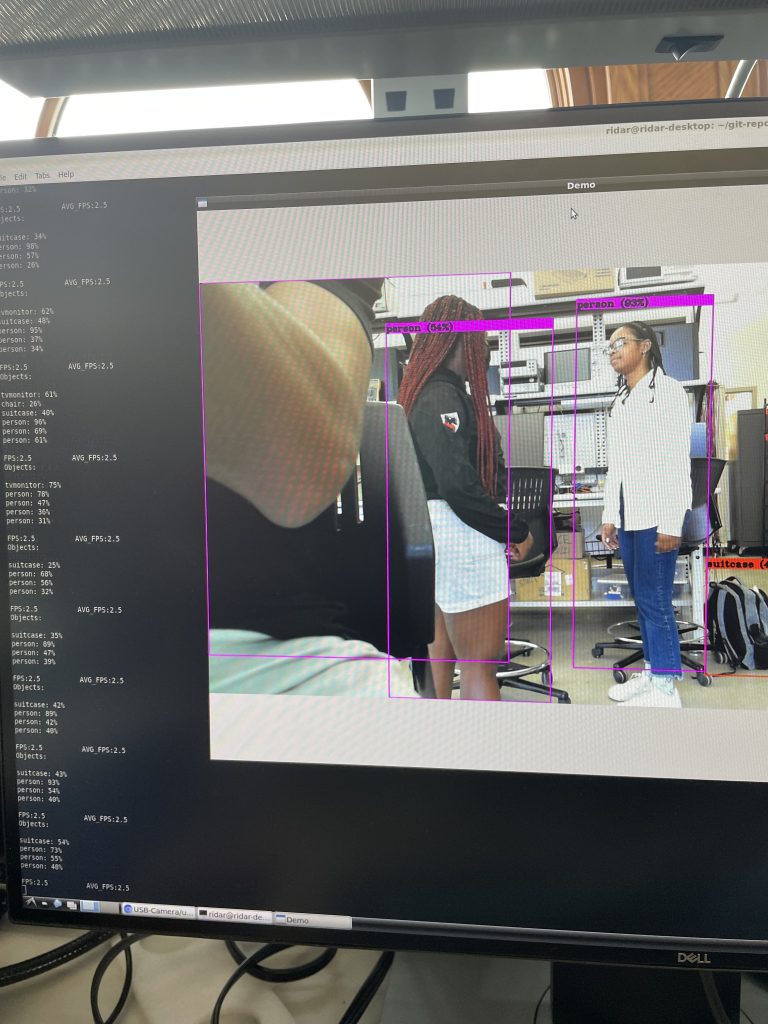

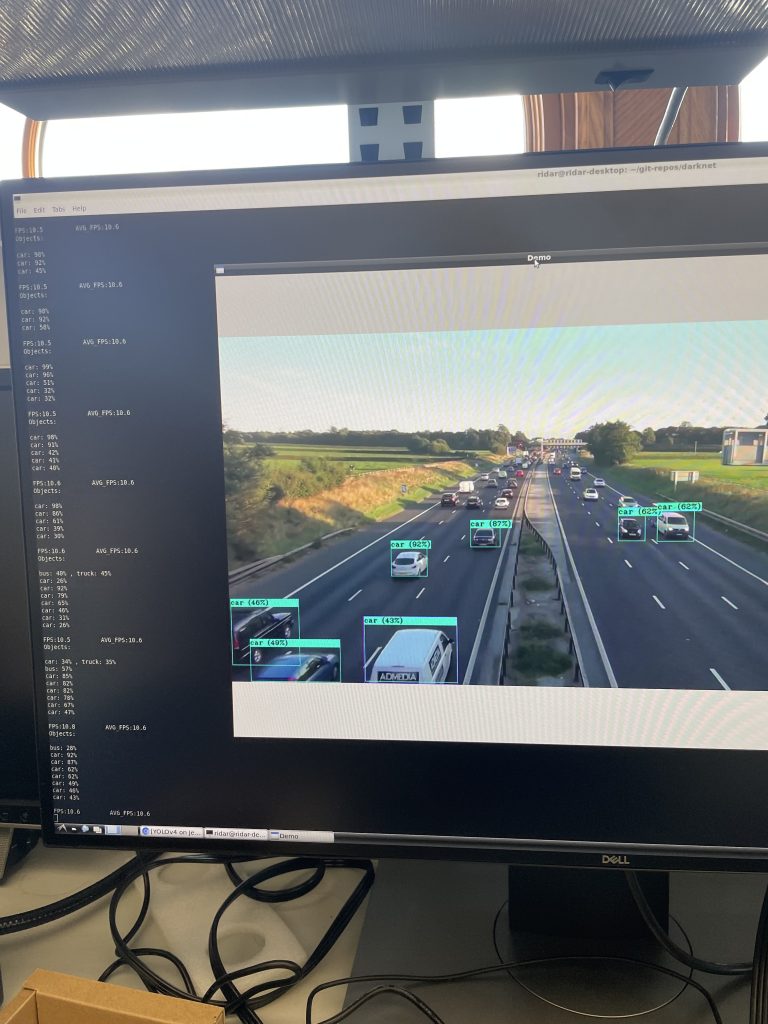

A lot of progress was made the past week in terms of the integrations of all the subsystems of the project (the camera with YOLO detection and Lidar). Initially we were planning on using two Jetson Nanos and communicating between both, one running the YOLO algorithm and another running the ROS with the Lidar. We were going to do this because we thought the processing power of the YOLO algorithm would be too much for a singular Jetson Nano to run both subsystems. However, after some testing, we realized that both subsystems can be run on one Jetson Nano if we choose not to display the camera and we run the tiny configuration of yolov4 which is less accurate than normal yolov4 since it has less layers in it’s neural network but is inherently much faster.

ROS is very lightweight and the Lidar itself doesn’t require much processing at all, hence we were able to run both on a singular Jetson Nano without maxing out the processing power of it. This simplifies things a lot.

We were able to find the co-ordinates of each of the detections of cars and persons only from the YOLO algorithm, and then with this we were able to write these to an external file. From the external file, a ROS publisher and subscriber nodes are used to read and write from a topic. The ROS subscriber node deals with the Lidar processing and reads the co-ordinates from the topic that the ROS publisher node writes to. With the combination of the data, an algorithm that was written up processes the data to display a GUI that shows where the car/person is from the lidar/camera. A video was taken and will be shown in the final presentation.

With the progress made, the schedule is almost back on track and the only things left now is the physical design which is currently in progress to house the components and testing the system on cars. The plan for next week is to keep testing the system, finish up the physical design of the system and also final presentation preparations.