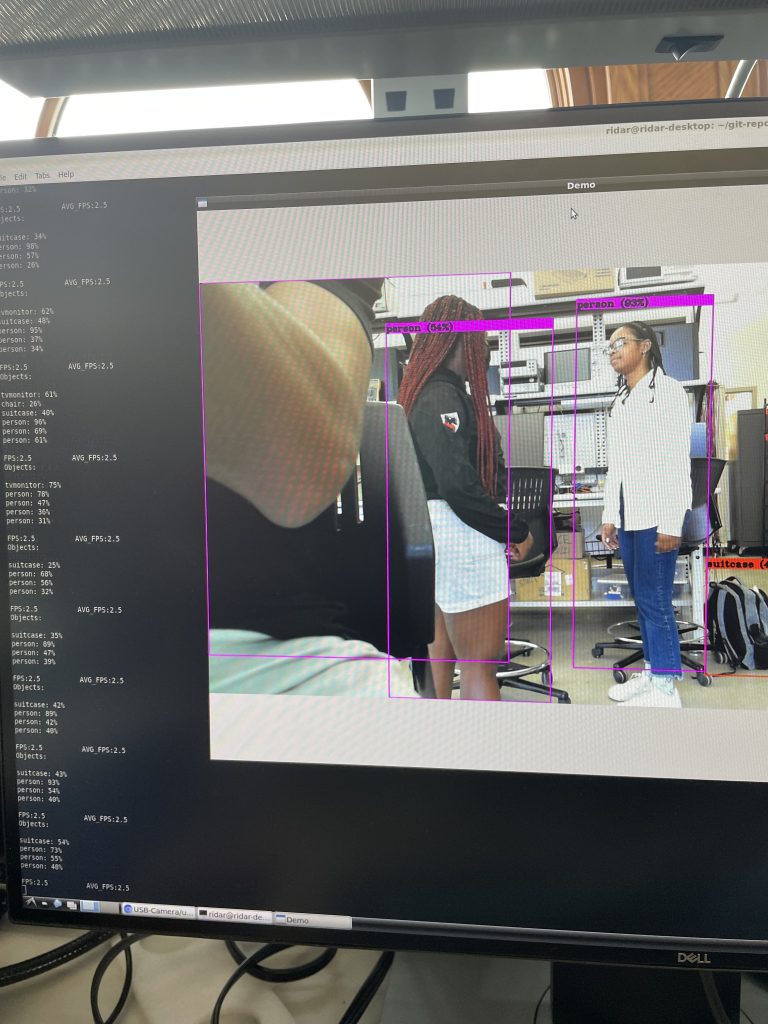

For the past week, significant process was made with getting the YOLO real-time detection algorithm working on the Jetson Nano. After re-flashing the SD card on the Jetson Nano and trying again with the darknet version of the YOLO version instead of TensorRT, I was finally able to get the YOLO algorithm working on the Jetson Nano. The CSI camera was still very unresponsive and I was still struggling to get the OpenCV library to work with the Gstreamer pipeline. However, using a USB camera, I was able to get the YOLO detection algorithm running on the camera with an average of 2.5 fps with a resolution of 1920×1080. Below is a picture of the YOLOv4 tiny version running on the Jetson Nano:

As you can see from the picture, the algorithm was able to detect people standing behind me with above 70% accuracy.

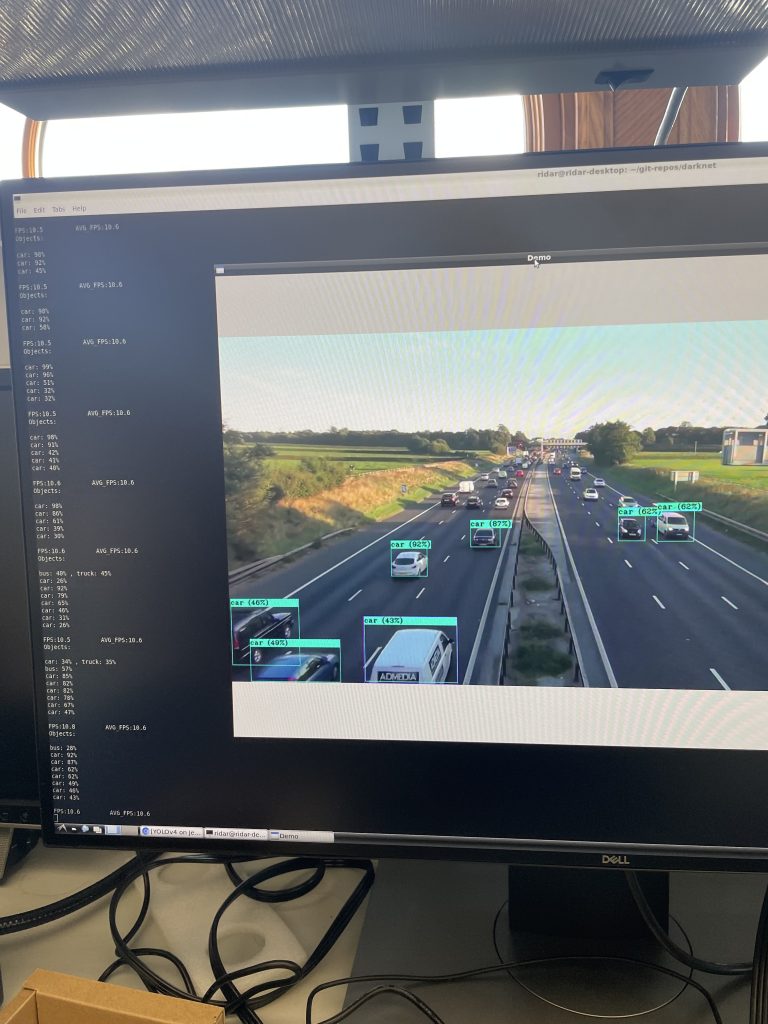

In order to test the algorithm on cars, I downloaded a 30 minute YouTube video of traffic on a highway and then ran the algorithm on this video. The output of the algorithm can be shown below in the picture below:

The plan for next week is to be able to extract the predictions of the algorithm along with the position of the predictions and pass them on to the other Jetson Nano so that this data can be analyzed along with the data from the lidar in order to determine the severity of the warning to the user. I am still currently set back in terms of my schedule because of how long it took to get the YOLO algorithm to work but a lot of progress can be made this week now because it is working!