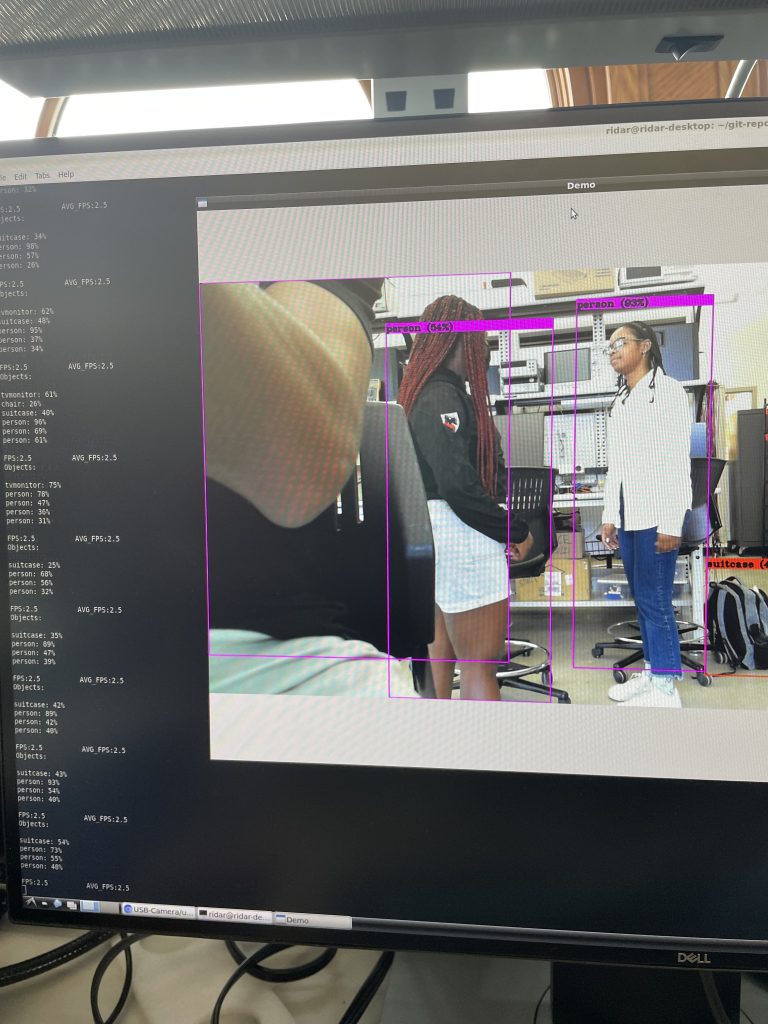

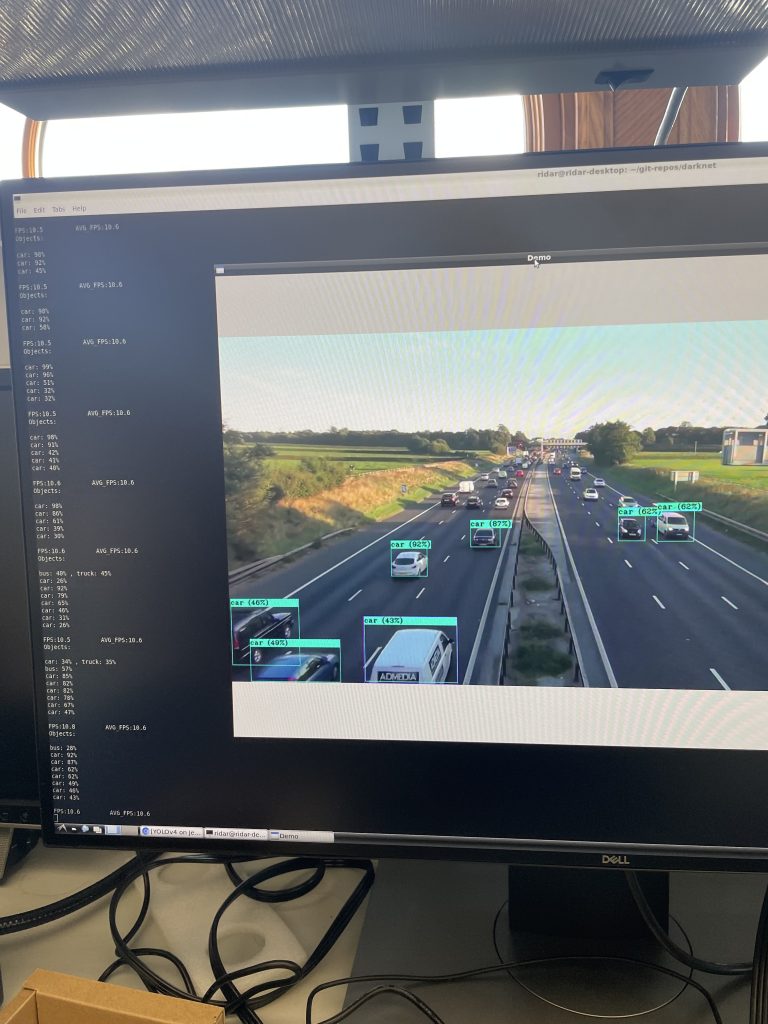

This past week, there was clear significant progress made within our group, hitting near our MVP. Regarding the work that I did over the past week, there was a quite a few. The first being that me and chad worked together to scour through the YOLO code and were able to successfully print out and grab the coordinates of the bounding boxes that were being drawn per frame. From here, we integrated both the lidar with the single Jetson as we found it did not create that much latency. With both components together, we needed to send over the coordinates to the Lidar script. We tried to make a ROS publisher node in the YOLO script but the paths of the dependencies were really messed up and convoluted. So, I wrote a function inside YOLO that constantly writes to a file that coordinates of bounding boxes in the most recent frame. Then, I wrote a ROS publisher node that took the coordinates from the script and published to the topic that Ethan’s node was subscribed to. From there, everything kind of came together!

With this done, we are basically on track with our schedule and all is left is testing, flushing out the range of our program, and physical components.

Next week, I hope to print out rest of acrylic for design, test the system more with cars and people, and change the range of the system.

Here is the link to a video of a base showing of our system: https://drive.google.com/file/d/1uesYUynypOKnusUUs-6F8mhb_bZTSKB7/view?usp=sharing