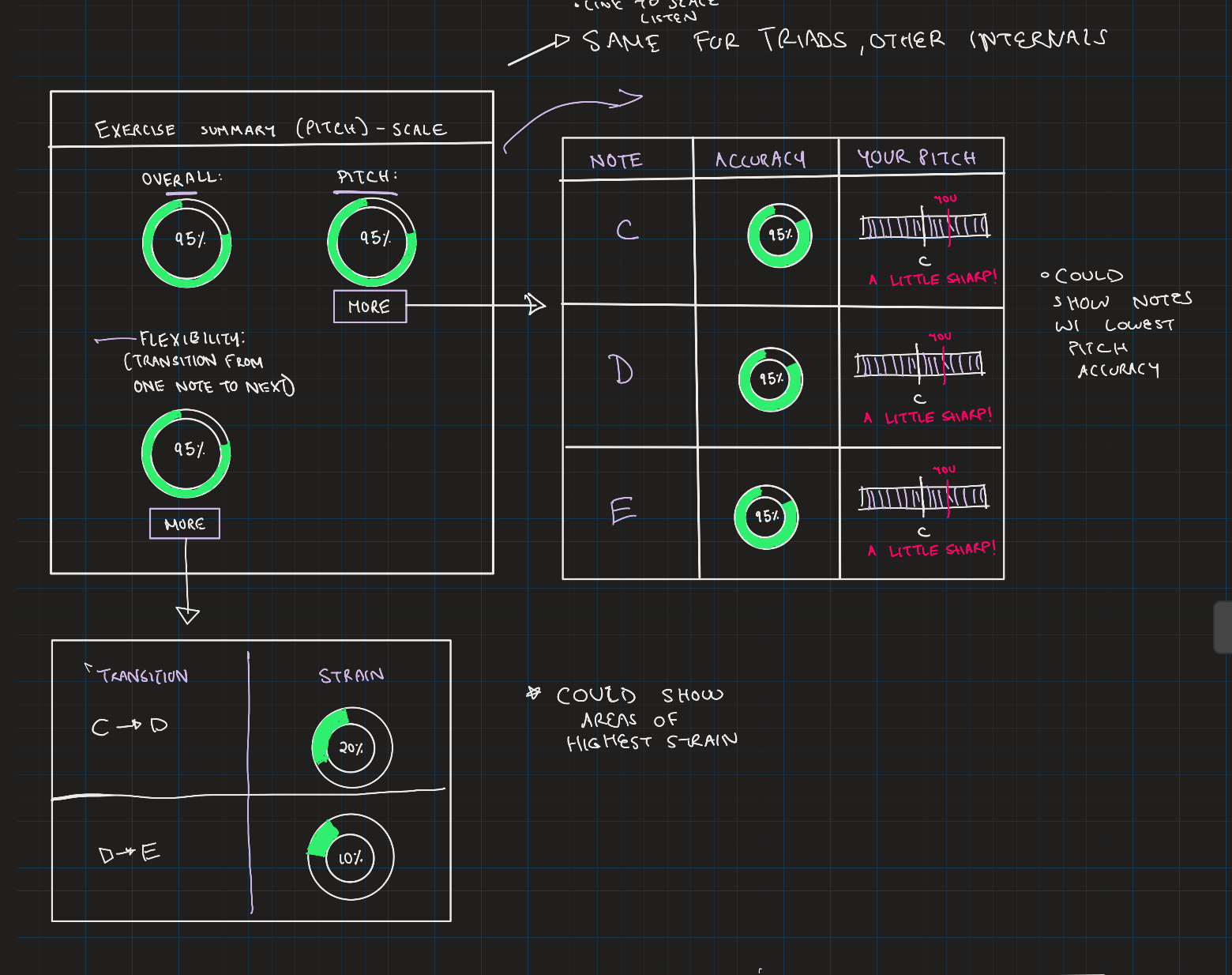

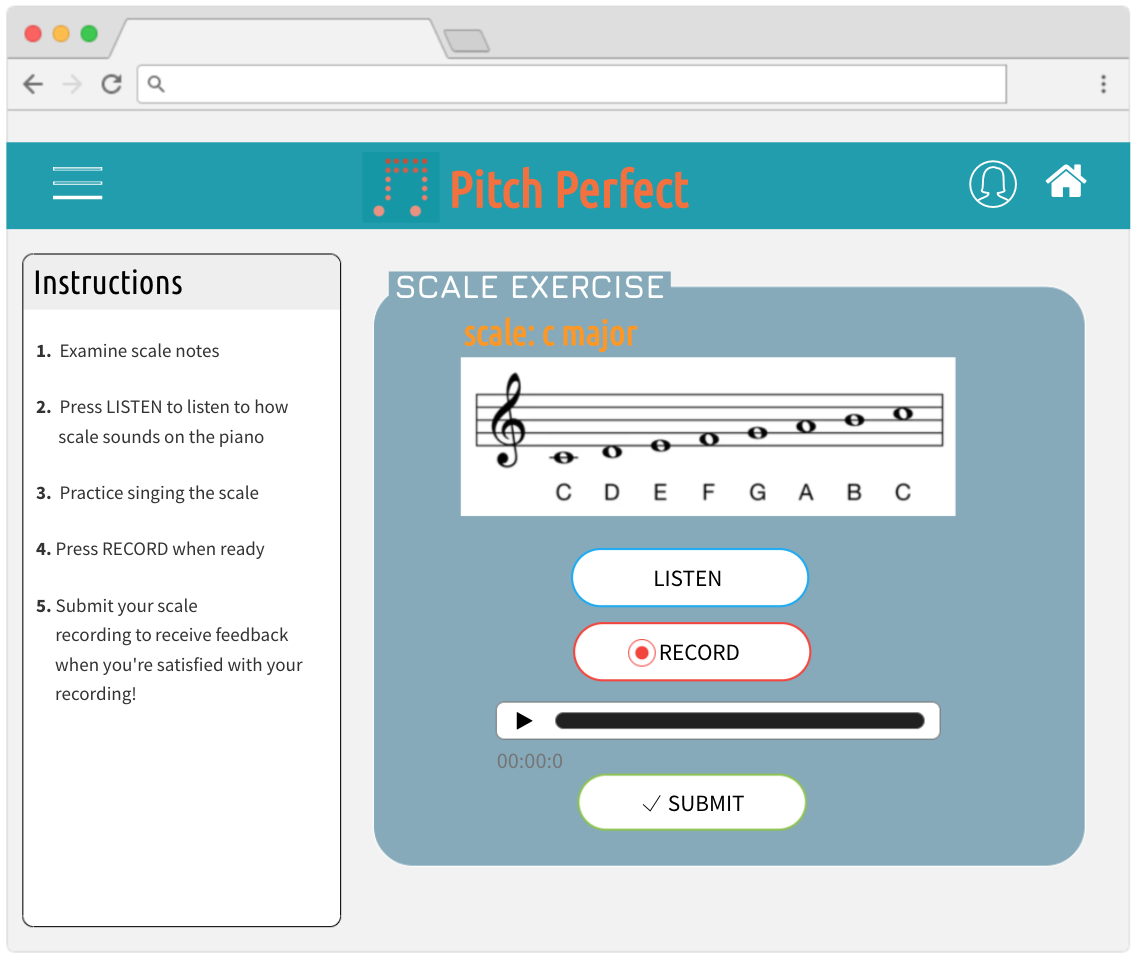

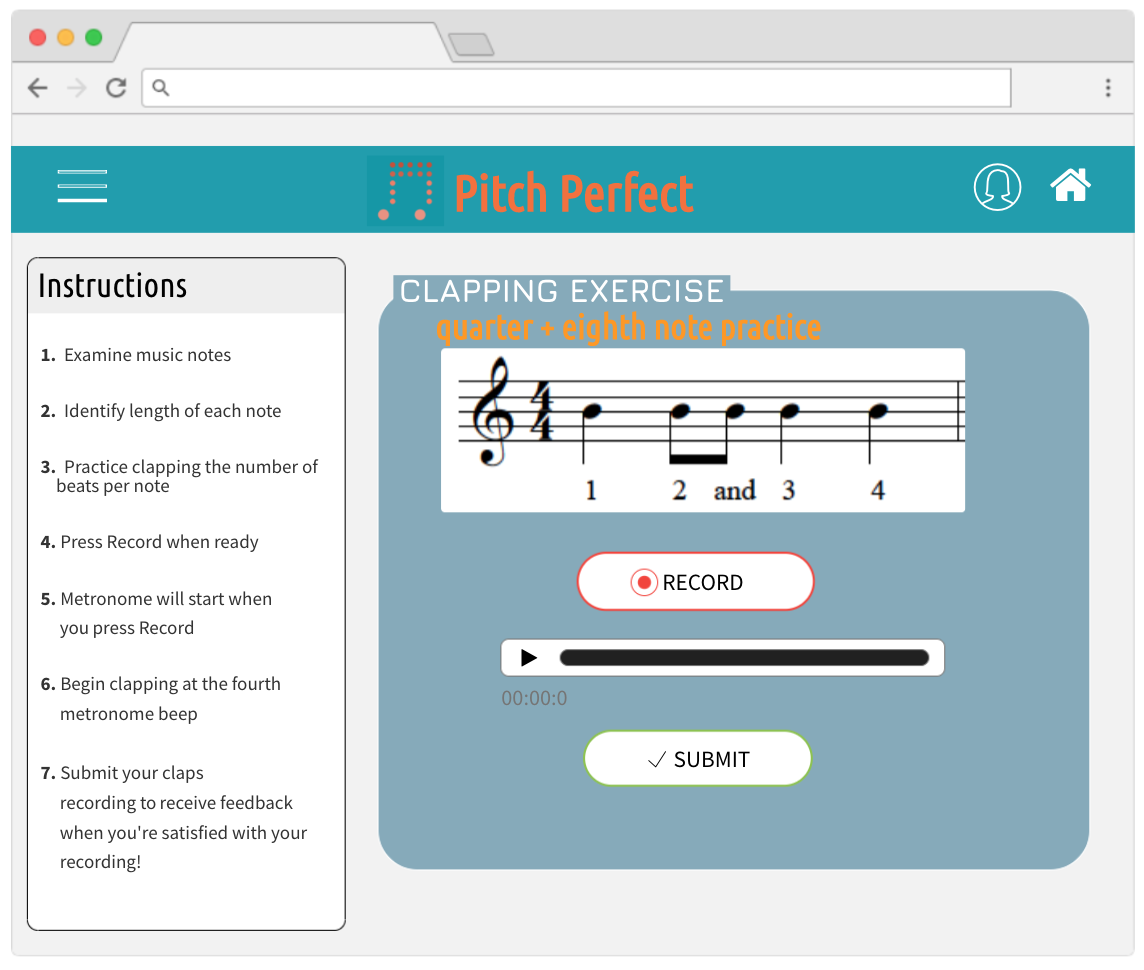

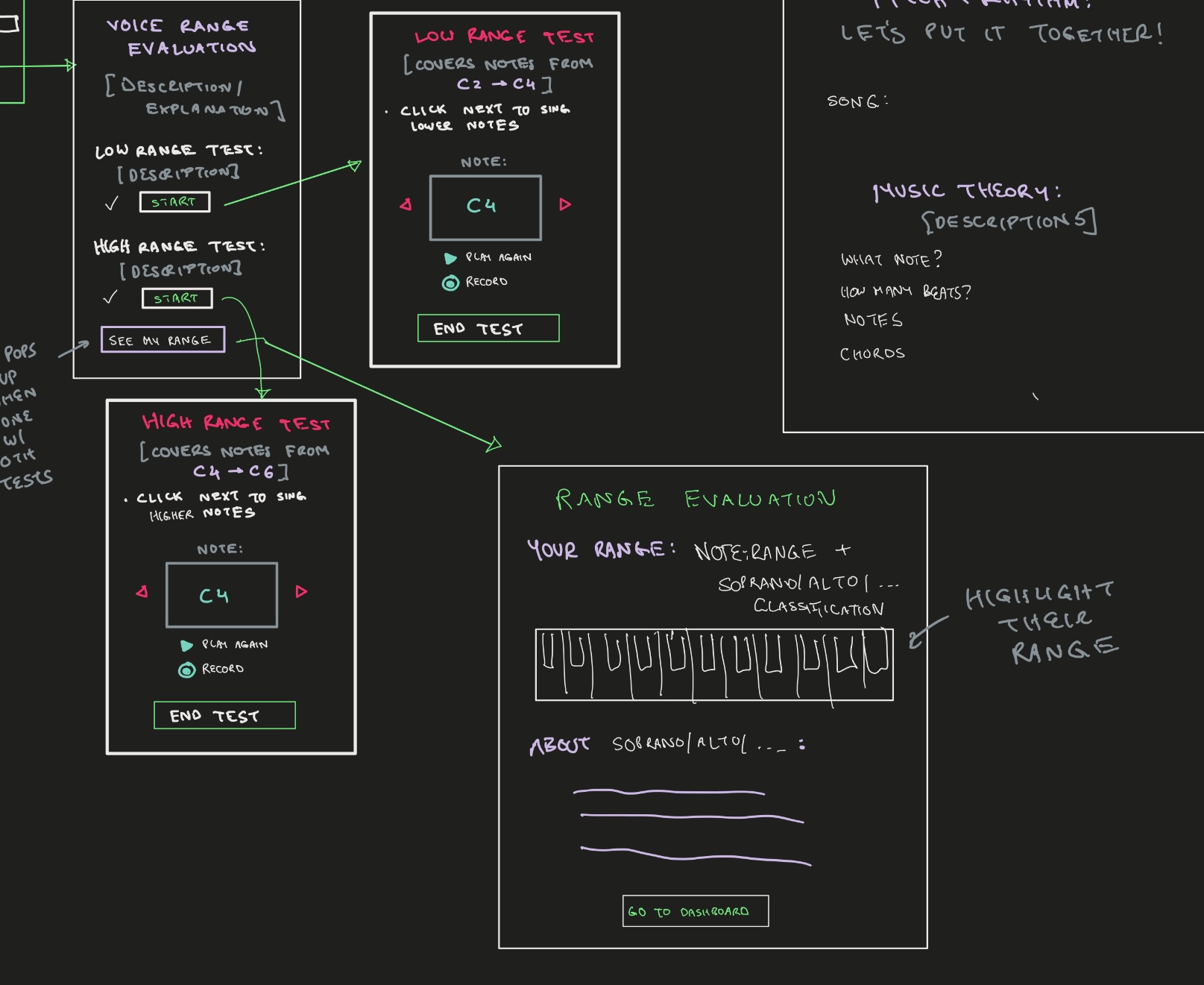

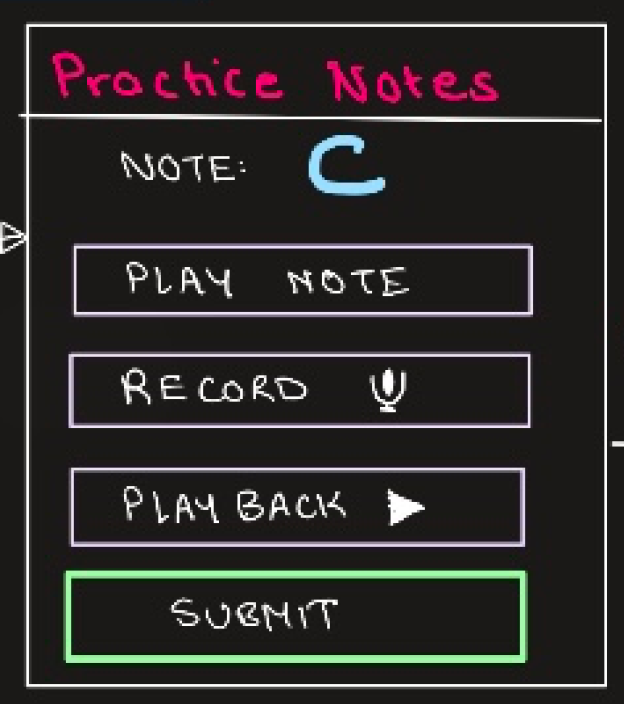

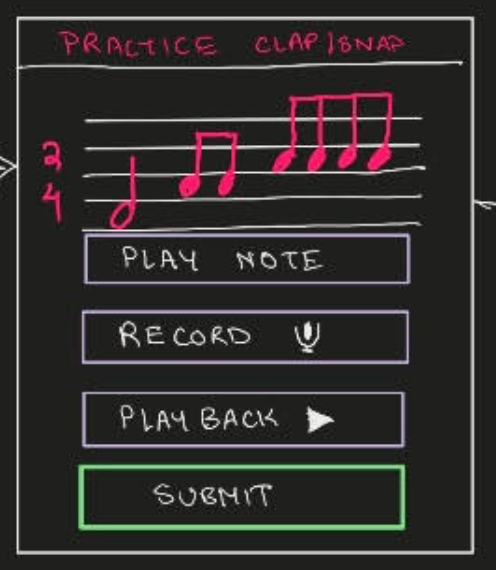

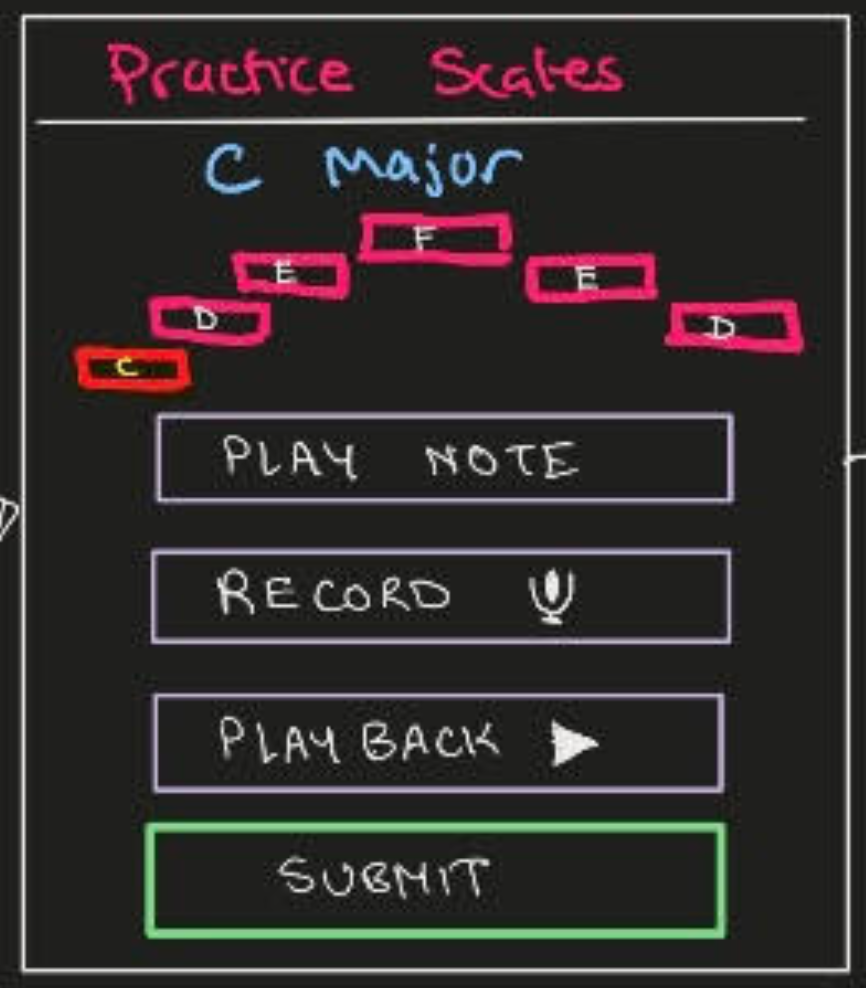

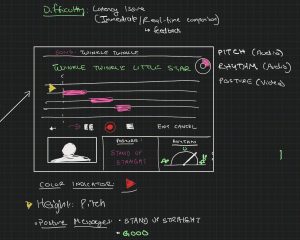

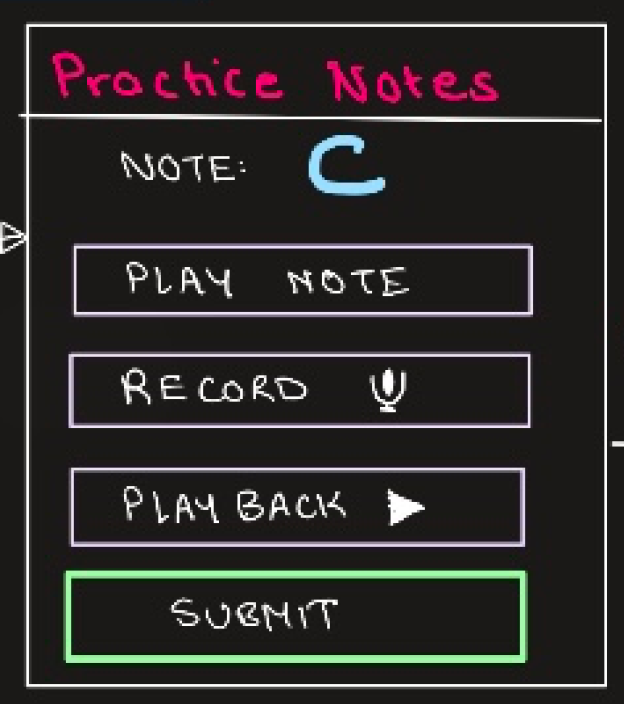

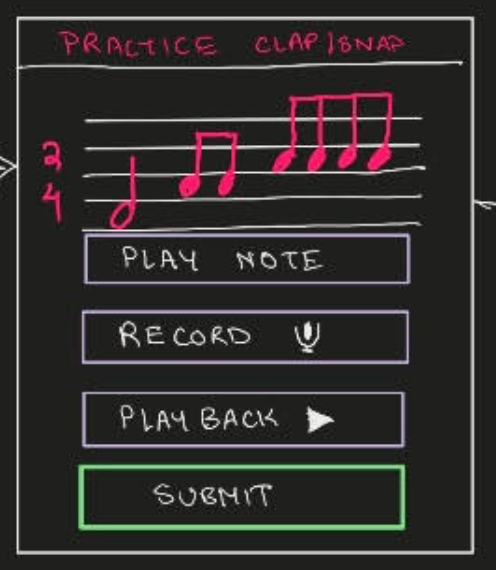

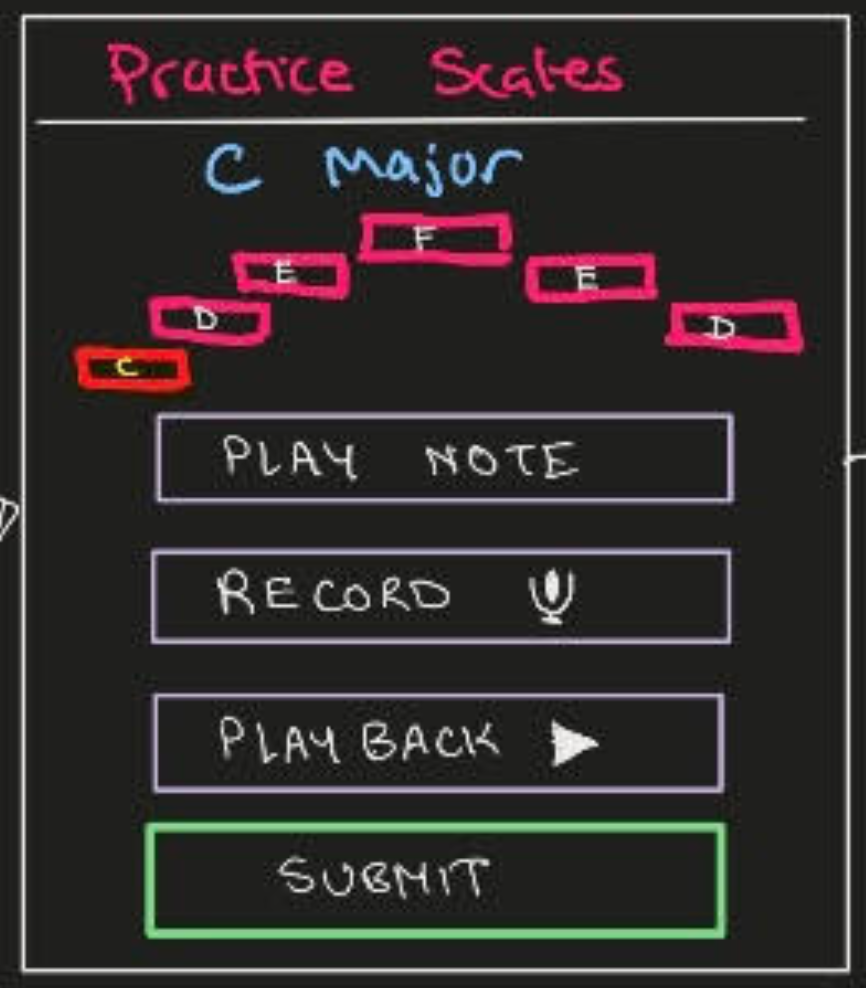

This week, I presented our proposal and received some feedback from Professor Sullivan on simplifying our product more. We acknowledged that providing real-time feedback on audio input and video input will have many latency issues. This has pushed us to simplify our project. Since our team shifted the product’s use case from users being able to practice songs to doing short singing exercises, without real-time feedback, but with feedback after recording, I was able to brainstorm a few ideas for singing exercises and how the user interaction would roughly look like for them. I attached the wireframes for those below.

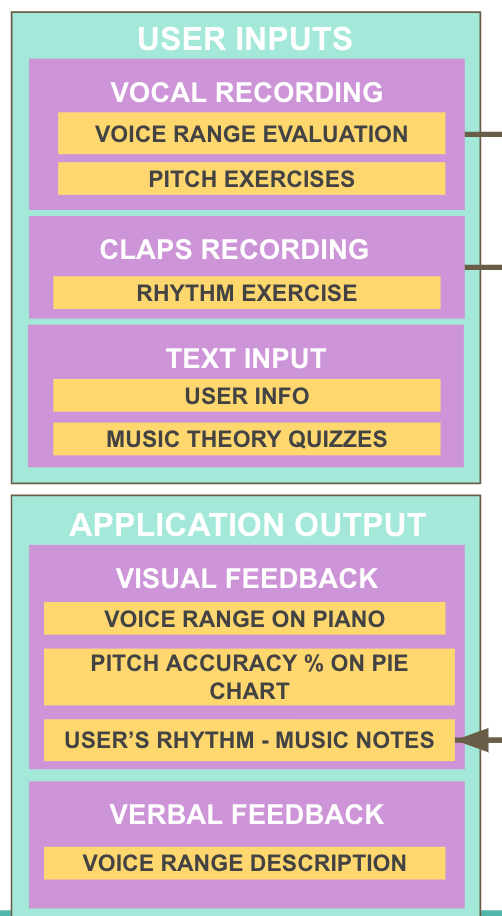

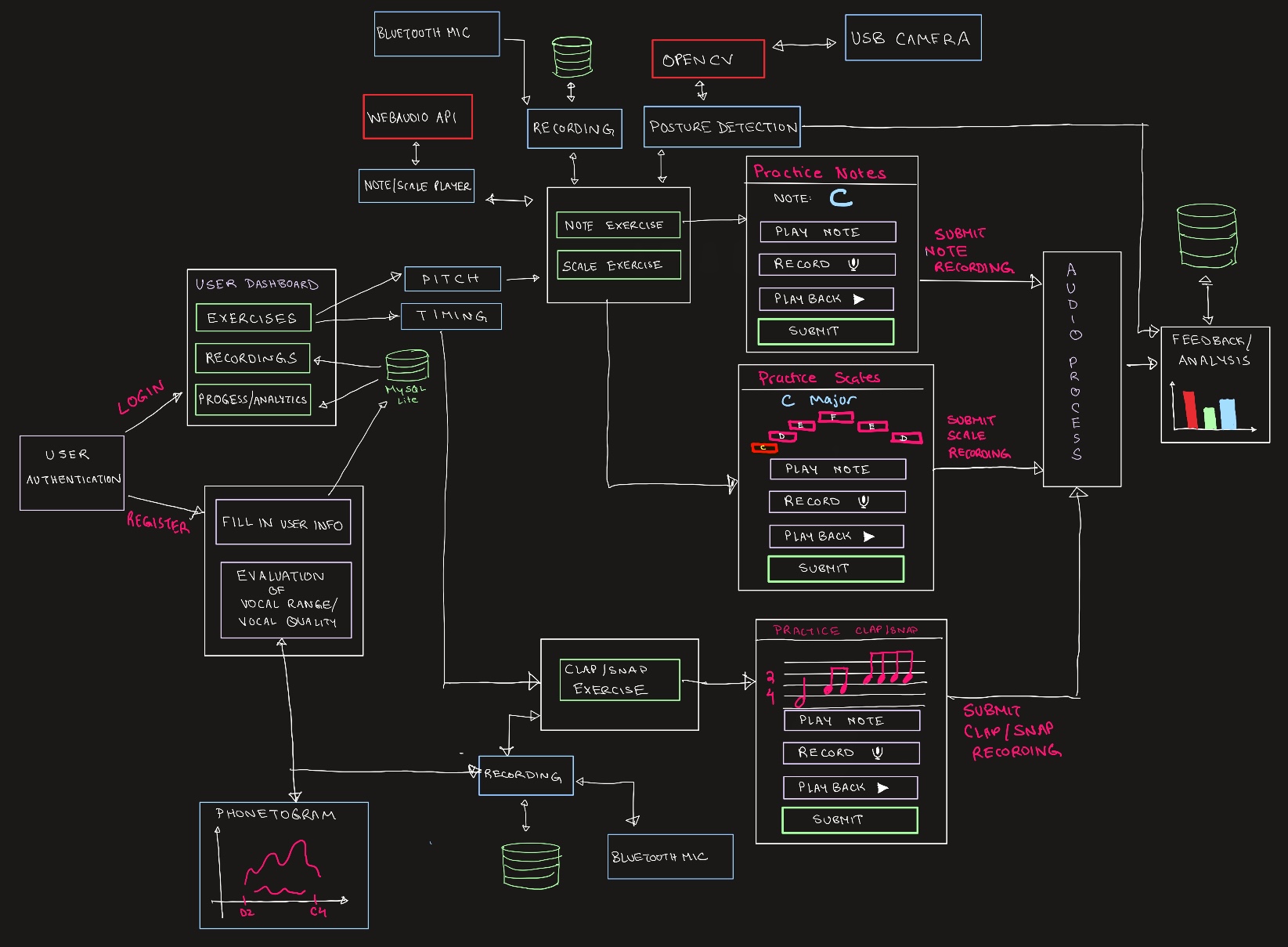

I was also able to design an UX flow/system integration diagram for what our team planned as our new design for the product’s transition to non-realtime and singing exercise focus. This diagram can be found in the team status report.

I also did some research on microphones with good noise cancelling ability and webcams with high resolution. We also decided today that we want the user to able to have headphones to listen to their audio. Here are some of the best options we have:

Camera: https://www.logitech.com/en-us/products/webcams/c270-hd-webcam.960-000694.html

Microphones/Headphones: https://www.jabra.com/business/office-headsets/jabra-evolve/jabra-evolve-75##7599-832-109

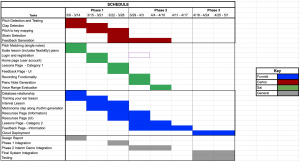

My progress is a little behind of what I planned, but this is due to the pivot in our design that we planned originally before our proposal presentation. Some actions I will take to catch up to the project schedule will be to put in some research and work into the project for at least an hour almost everyday this week with documentation/notes and to plan according to the design presentation specifications.

For next week, I want to think of other singing exercises we could implement and how the UI would look for those. I want to get a comprehensive understanding of how WebAudio API works and plan out exactly how I will use it for the UI. I also want to figure out the best way (best visualizations/infographics) to show users their feedback on their singing and their progress reports for their overall performance history. I also want to plan out our Django Framework organization (views/models/controller) so that I can get started on coding that the next week.