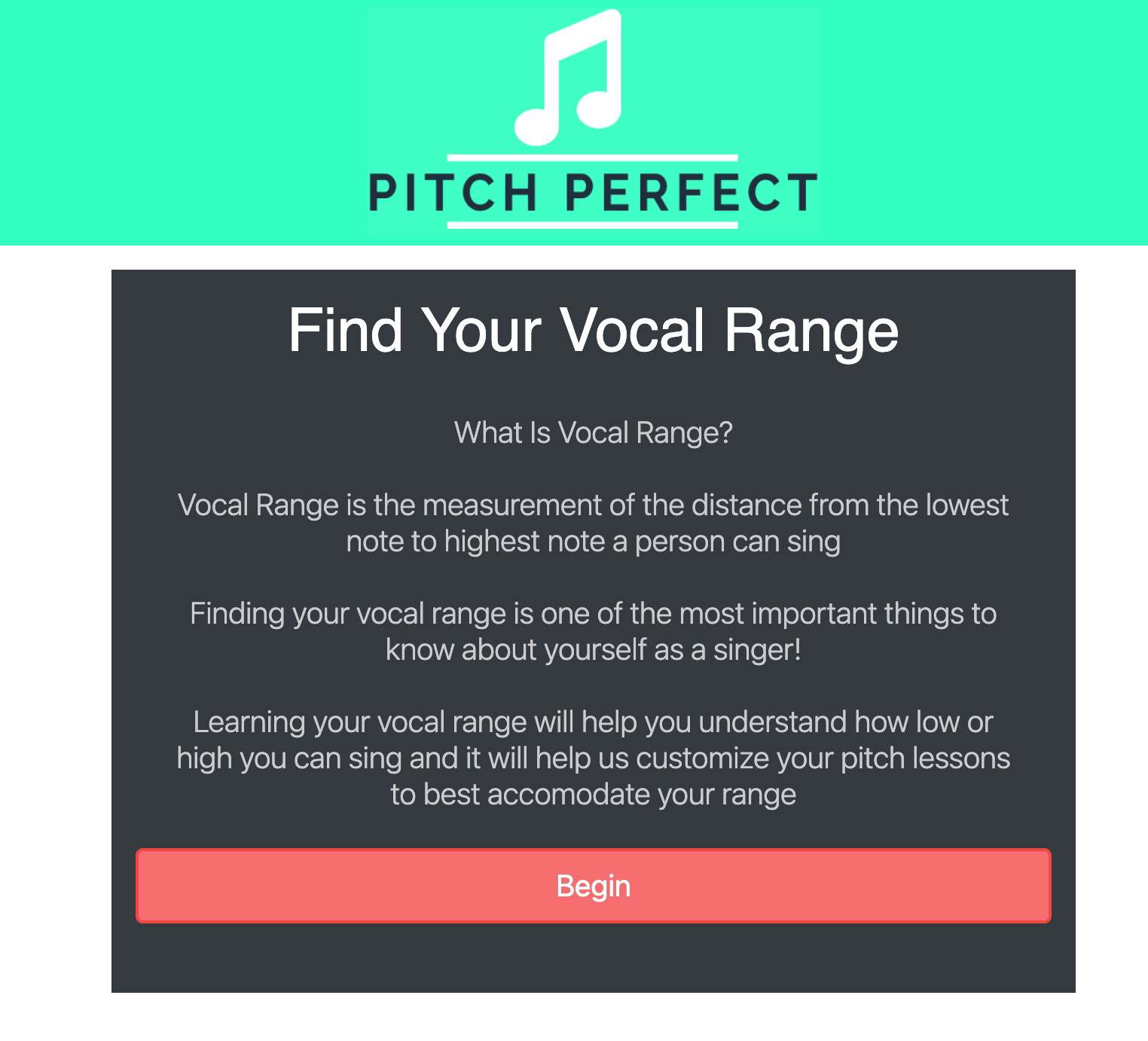

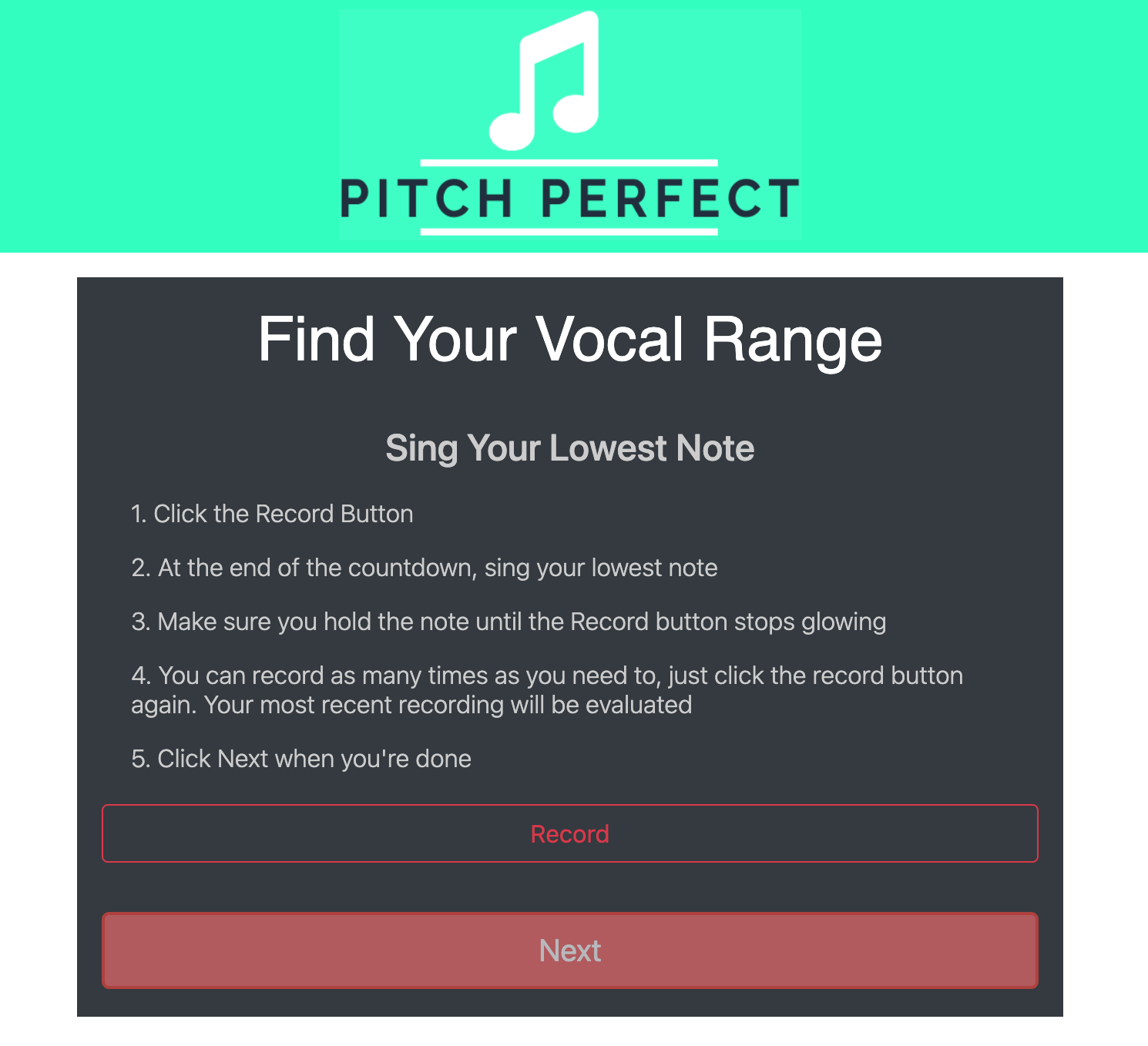

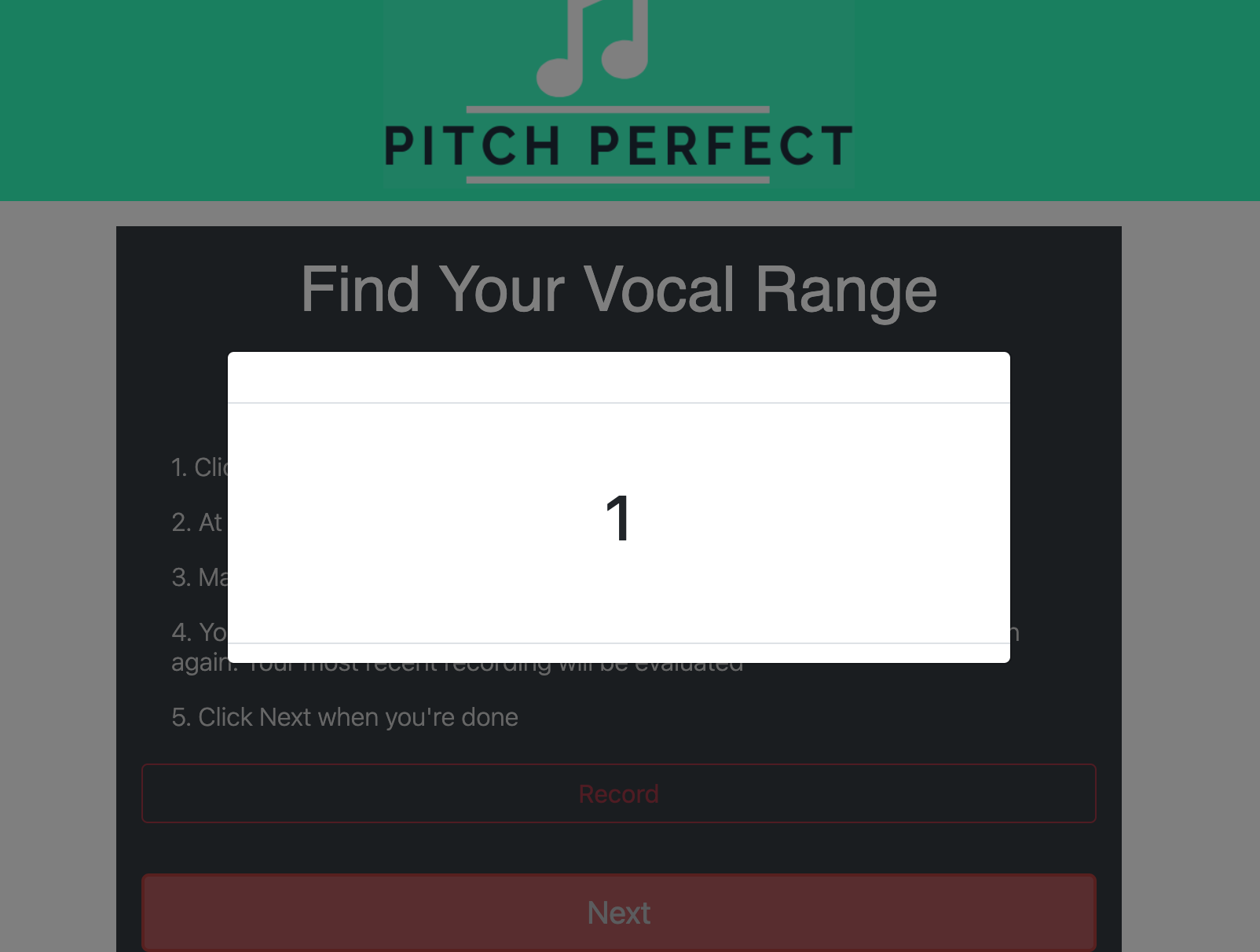

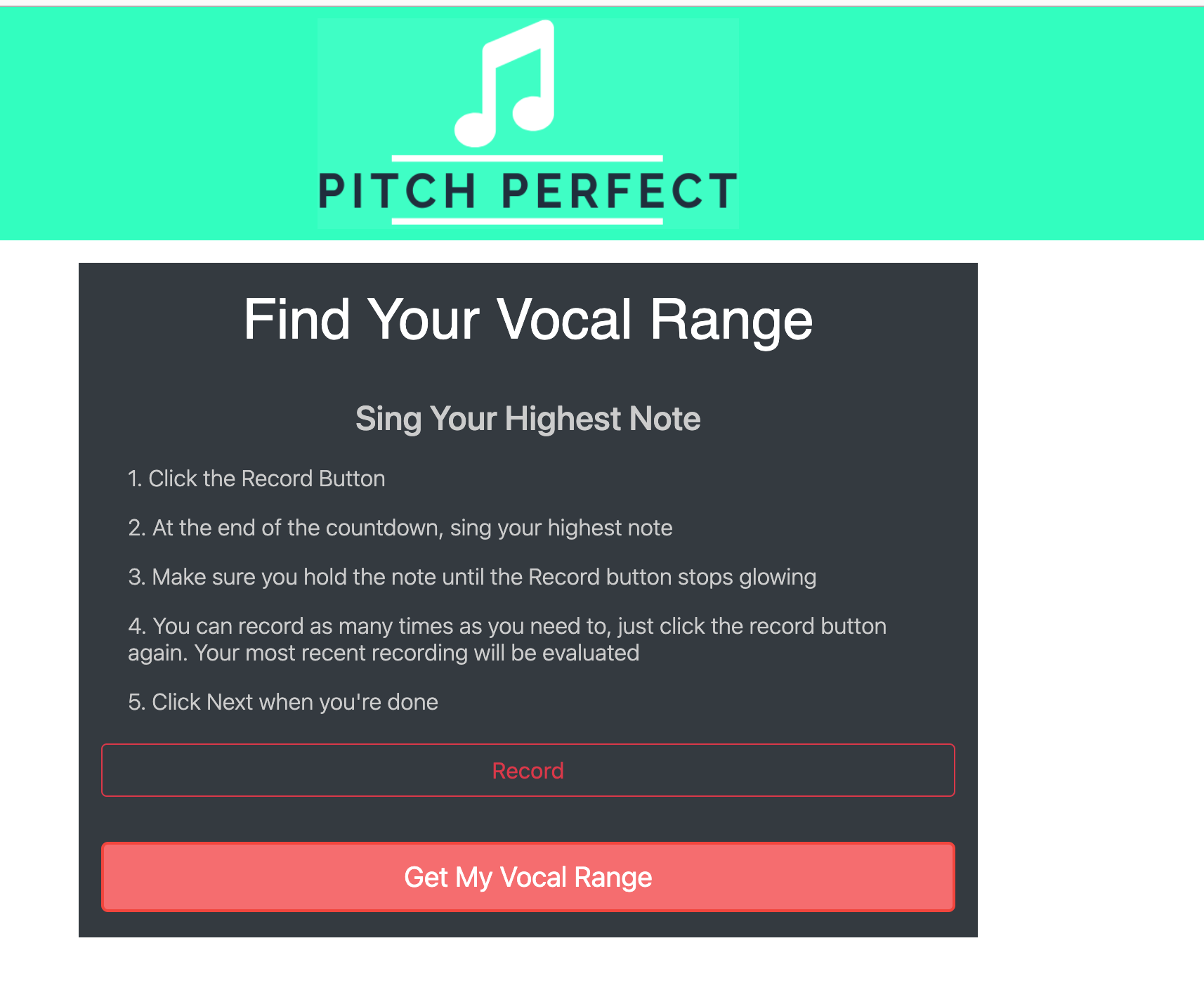

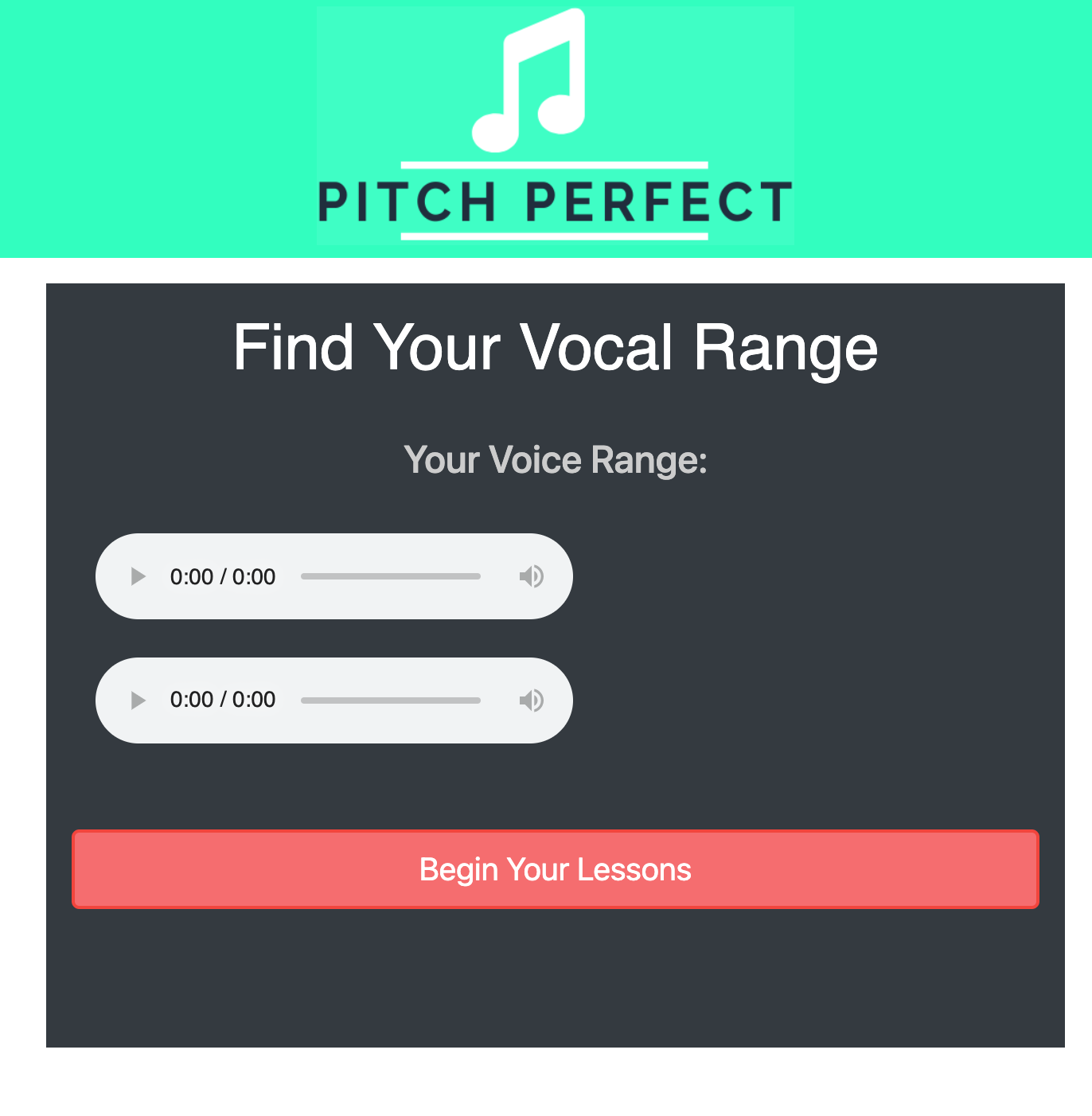

This week, I was able to get started on the voice range evaluation component of the web app. This was a bit tricky because I wanted to use the code I already had for the pitch lessons to record for this component, but that code was kind of not scalable to the voice range. So a good amount of time went into making that code scalable for the voice range as well as the rhythm exercises. The voice range evaluation frontend with its recording capability is complete, however it still needs to be integrated with the audio processing component to map the audio files to a voice range to output to the user. Me and Funmbi were able to integrate both our parts of the web app together. We integrated the vexflow music notes and the play rhythm + recording functionality to complete the clapping exercises. We also worked on the dashboard to bring all the different components together and link them together. The dashboard pic can be found in the team status report. For the upcoming week, I’ll be working with Funmbi on deploying what we so far, since it might be a bit tricky with the audio files, external APIs and packages we are using. I will also try to finish up the voice range exercise and integrate with Carlos’s audio processing algorithms so that I can actually display feedback to the users for the pitch lessons, rhythm lessons, and voice range evaluation. There’s still a good amount of work to do, so I feel that I may be behind, but hopefully the integration won’t take too long. I’ve attached photos of the voice range evaluation run-through.