This Week:

- Correctly added sound feedback with correct timing at start up: IMG_1271

- set up frame by frame analysis with Shayan’s code

Next week:

- Test in realtime stoplight

- Connect Yasaswini’s code

Carnegie Mellon ECE Capstone, Spring 2021 || Jeanette D-D., Shayan G., Yasaswini D.

This Week:

Next week:

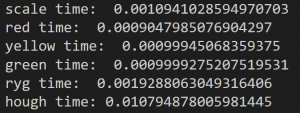

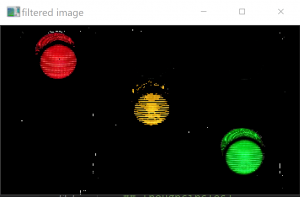

My sole focus area this week was further Look Light traffic light detection cum Traffic Light Color algorithm. This image processing / pattern recognition based algorithm is based on the Hough transform, which returns the positions of circles of radii within a specified range as found within an input image. Because of the potential that the ML model would not be trained for the time being, I decided to work on using my existing algorithm to deduce the color of the light from the image of the whole scene as opposed to individual lights.

My approach was essentially applying my existing algorithm for each individual color filter (“red”, “green”, “yellow”), getting the number of circles identified and picking the color which resulted in the most circles.

Results:

* On a side note, I was able to improve accuracy since the last status update by increasing the intensity of the median blur (larger kernel) to reduce the effect of noise that would be erroneously tagged as circular. (One example of such erroneous tagging, was the “detection” of circles in patches of leaves/shrubbery.)

With Yasaswini’s recent progress in her algorithm, my algorithm will no longer have to search for circles in the entire scene, as originally intended. I anticipate the accuracy to increase as I test my algorithm integrated with Yasaswini’s algorithm.

Progress made to actually output a color as opposed to a list of circles. Progress made to slightly improve accuracy. Seems like the right track. 🙂

Completed this week:

For Next Week:

My sole focus area this week was further Look Light traffic light detection cum Traffic Light Color algorithm. This image processing / pattern recognition based algorithm is based on the Hough transform, which returns the positions of circles of radii within a specified range as found within an input image.

My work was broken up into the following areas:

Not as much progress as desired. Improvements w.r.t. exposure and look angle were not as high as desired. Hopefully, the amended combined approach is a useful experiment. If accurate, it certainly will cut down on latency too, a metric I haven’t been paying too much attention to as of yet.

This week:

Next week

Completed this week:

For next week:

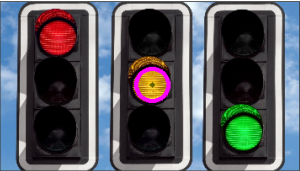

Continuing from last week, my sole focus area this week was the Look Light traffic light detection algorithm. Whereas, last week dealt more with me better understanding the Hough Transform itself, this past week dealt more with me fine tune the Transform parameterization and preprocessing image inputs to the Transform.

After last week, I was able to partially resolve these issues, but circles were still being mislabeled in simpler images of traffic lights, in Fig 1 below. Notice how, for the yellow light, the transform detected the curved edge of the light fixture above the light (in which yellow light was reflected) rather than the light itself.

Look Detection algorithm progress may seem slow. However, because it incorporates color filtering, this progress may also help me with light color detection in the future. (It’s already distinguishing the colors of simple lights as show in Fig 2-4).