Completed this week:

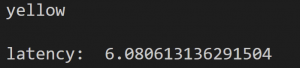

- Did more real time testing

- Focused on debugging why it wouldn’t recognize the color

- Went through the pictures taken and tried to see what was being identified/cropped

- Traffic light is being recognized and cropped but the color isn’t being detected in some cases

- Helped assemble the Raspberry Pi and the camera to the belt

- Used sticky glue/velcro to get it to stick

- Tested out the fit

For Next Week:

- Prepare for the final presentation!

- Film the video

- Work on the poster