PROGRESS MADE

My sole focus area on this week was mainly the Look Light traffic light detection cum Traffic Light Color algorithm. As mentioned last week, my most prominent objective was to increase the classification accuracy of the algorithm given the outputs from the CV algorithm.

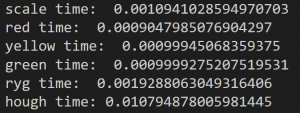

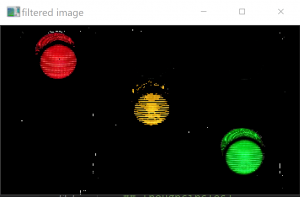

To address these new problems and leverage the fact that the images reliably only contain (at least portions of) traffic lights, I explored how much more accuracy I could afford by scrapping circle detection altogether and primarily relying on color filtering and thresholding. In other words, this alternative algorithm would still filter on yellow, red, and green in series with thresholding but now use the argmax (argmax of {red, yellow, green}) of the sum of remaining pixels post-thresholding as a means of determining the color.

Results

This approach did increase the classification accuracy. The previous iteration algorithm’s accuracy with the inputs passed in from the CV algorithm were unfortunately around 50% no our validation set, which is unacceptable. The new algorithm’s accuracy increased to nearly 90%, which was close to our overall goal metric from our Design. The full results are reported below:

| Overall: | |||

| Non-Look Light | 14 | ||

| Look Light | 29 | ||

| Total | 43 | ||

| Of Look: | |||

| Actual R | Actual Y | Actual G | |

| Output R | 14 | 2 | 0 |

| Output Y | 0 | 2 | 0 |

| Output G | 1 | 0 | 10 |

| Correct | 0.896552 | ||

| False Pos | 0.034483 | ||

| False Neg | 0.068966 | ||

PROGRESS

The LookLight algorithm is now at a (more) acceptable state for the Final demo. 🙂

DELIVERABLES FOR NEXT WEEK

Fix any small problems when integrating.

Present / demo our findings! 🙂