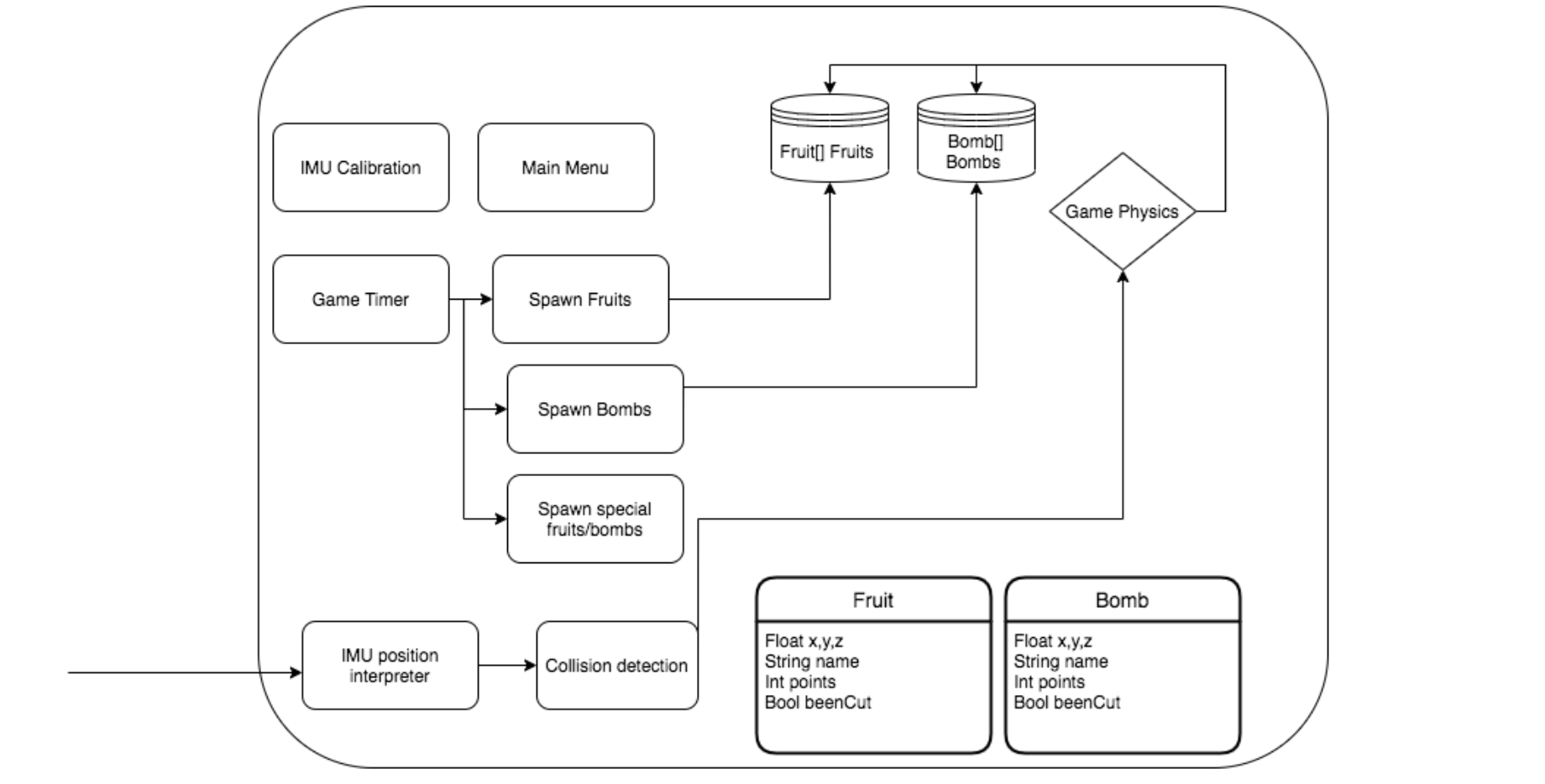

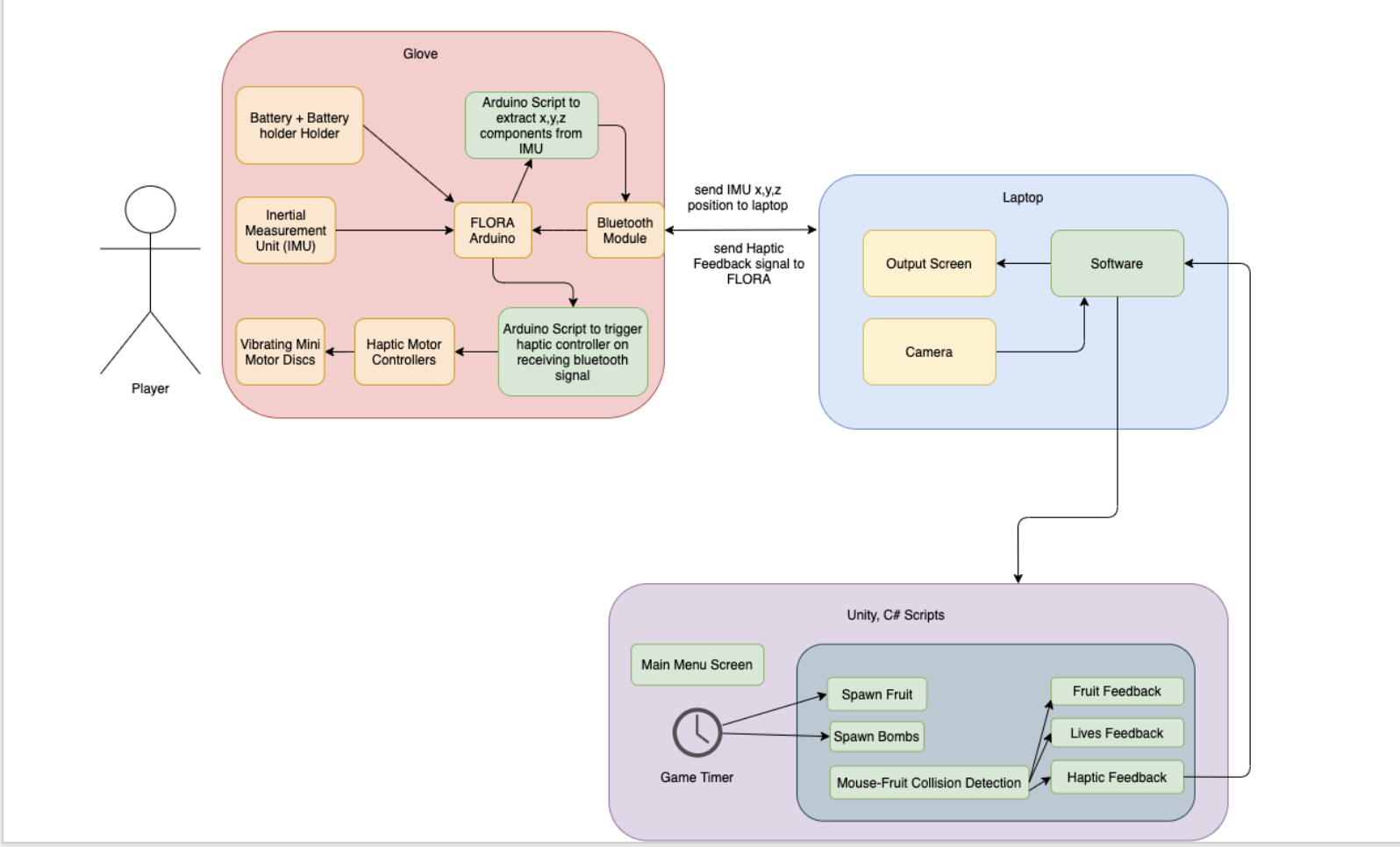

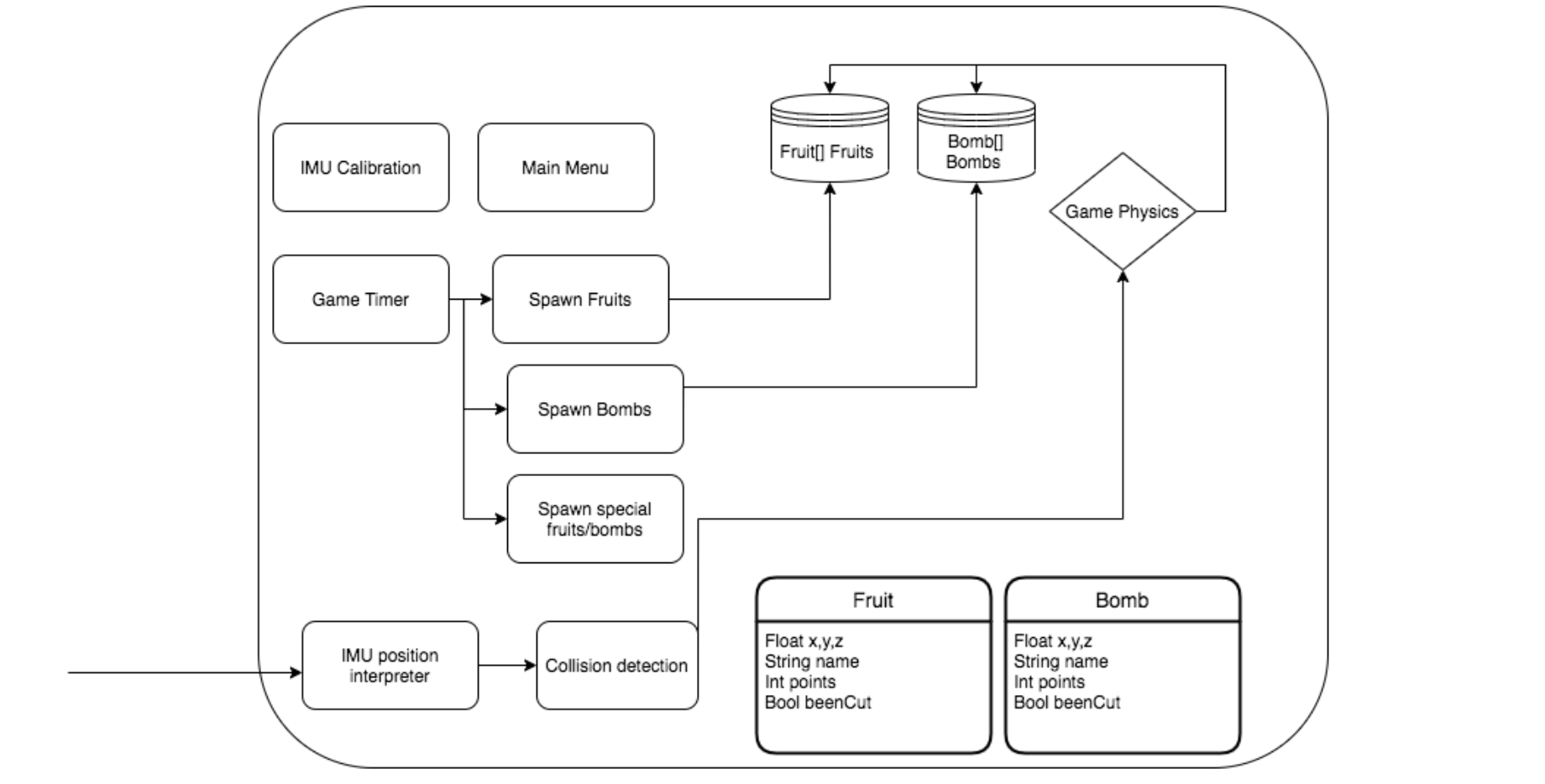

This week I worked on building the software block diagram below.

Additionally, I worked on designing our data structures, Classes, functions and any interactions in the game. This will enable us to easily develop the software once we have completed glove development. As a group we decided to put most of our effort on developing the glove with high accuracy and low latency and later focus on Unity development.

I also researched how to use the IMU and found that we might need to do some type of preprocessing before using IMU data. It seems like we might need to use either a Kalman filter or Complementary filter in our preprocessing step, so I worked on developing these filters in Arduino.

We are on schedule

For next week I will be working on the Design report. I will specifically be working on writing about the software aspects of our project. I will also work on further implementing the preprocessing step for the IMU data and design the calibration step of the IMU-glove.