Very briefly, there are only three items left in the agenda. These are the integration of the warping code with the app (which we will attempt in the next two days), writing up the final report, and creating the demo video. From our discussion with Professor Sullivan, we know that it is already an accomplishment to have the individual components working and the project integrated 2/3 of the way. In a perfect world, the app and warping algorithm would be have a shared language and be easy to mix together. Our current ability to have inputs/outputs of each piece flow into each other is enough to show that with such a situation, we would have been able to add this final piece. The other two items have due dates in the next week. We are working on creating them now!

Mayur’s Status Report for 5/2

Honestly, there isn’t much to say now. Akash sent me the code which bounded the songs to -10/+15 BPM in the song selection algorithm, and I integrated the new version into the app. Integrating the time warping algorithm with the app code remains difficult. All that’s left otherwise is the final report and the demo video. Overall, capstone has been very design heavy. I gave my feedback to the course instructors via the FCEs, and I honestly had a positive experience with the course.

Team Status Report for 4/25

We have two weeks left before the end of the semester. At this point, most of our project is wrapping up, although we still have things to aim for. Each component of our project works individually, and 2/3 of the pieces are integrated. We are able to demonstrate that the inputs/outputs of each components match our expectations, and would flow as desired if integrated properly.

The final presentation is on Monday, and will be done by Akash. Accordingly, our group spent time this week running tests for our components, working on the slide deck, and generating graphs to include in the powerpoint. We finalized our decision to move to the phase vocoder as well in consideration of the fact that the wavelet transform experienced greater loss and took longer to process music.

Before “demo day”, we have the following goals:

- Finish testing, and possibly re-test components post-integration

- Attempt to integrate the final two portions

- Implement the -15/+10 BPM requirement for Song Selection

- Create the Video

- Final Report

Mayur’s Status Report 4/25

Very short update for this week. We are paradoxically both wrapping up and trying to maximize the amount we finish. I continued attempting to integrate without much success. Namely, my Android phone was not excepting USB connections, which made it difficult to actually physically try out the app. The issue was eventually solved by removing the battery from my phone and putting it back in. Otherwise, I worked with Akash to get the App working on his phone. There were a few problems that we solved, which he will explain in his report. Finally, the presentation is next week, so I am working on the slides. I am still hopeful in getting the integration done, but it is pretty difficult to do.

Mayur’s Status Report for 4/18

While I believed that the Song Selection Algorithm had been fully implemented with the code, it was not the case. My code was building, but I had not actually attempted to test it on my phone (which was a mistake). As it turns out, I needed to adjust memory settings within the gradle files of the App so that I could increase the allotted memory to hold more songs at runtime. Afterwards, I started to work on integrating the time warping code. This is extremely complicated for several reasons. First, the code requires passing arguments via the JNI. So, code needs to be adjusted so that data is properly serialized/typecast when it is sent between portions of the code. Secondly, the audioread function from matlab needs to be rewritten for C/C++. Finally, several more files need to be “included” with the project, which requires working with CMake and its associated files.

At the moment, I have about 20 tabs open on my computer trying to figure out how to make this work. For now, I believe that the best approach is to send a string with the file name to the C++ code, warping it within C++, writing a new file with C++, and then returning the location of the new file to be played to the Java code. The number one tip for using the JNI according to the official docs in regards to speed is minimizing the amount of data that is sent across it. The JNI needs to marshall/unmarshall all data, which takes a long time. For this reason, doing it the way described would most likely be the fastest approach. Next week I am hoping that we will have finished completely integrating the app and possibly begun testing certain parts. The presentation is the week after!

Mayur’s Status Report for 4/11

This week, Akash rewrote his code in Java and sent it to me. On my end, I added the song selection algorithm he sent into the code. This week highlighted one source of time sink in the future; up until now, I have only been writing code with a “proof of concept” mindset. Unfortunately, this means that the other two parts of the project are much more difficult to integrate, as the code is not designed well for this to happen. In the coming week, I will be refactoring the code so that it will be [hopefully] as simple as dragging-and-dropping Arushi’s code into the app. It’s pretty obvious integrating will be more complicated, but I want to get the code to as close as that state as possible so that it will be less of a hassle in the future.

Team Status Report for 4/4

Next week is the demo of our project. We will be showing each of our subsystems to Professor Sullivan and Jens.

-Mayur aims to have preliminary implementations of each part of his subsystem (the phone app)

-Akash aims to have a roughly close-to-done implementation on the song selector algorithm

-Arushi wants to have progress on the Wavelet algorithm to show.

We look forward to the feedback we will receive, and we think it will help us moving forward.

Mayur’s Status Report for 4/4

This week saw a bit of a pivot for me. Originally, my plan was to add music to the app and show that it was possible to run C code (call a function) from within the codebase.

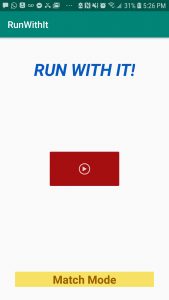

First, I did the former. Indeed, I can play “Africa” by Toto with a WAV file when a user clicks the Play button. And, I can stop it when the user presses the Pause button. At this point, adding more music is fairly straightforward.

The pivot comes in from the second goal. Arushi was able to write a Vocoder in Matlab, and she found a link about how to run MatLab on Android. My network was really bad, and it took me 10 hours to download the Matlab software to my computer. I am hoping to investigate the possibility of using Matlab instead of C on Android using the suggestions at this link: https://www.mathworks.com/matlabcentral/answers/417602-can-matlab-code-is-used-in-android-studio-for-application. This way, I can explain the progress during the demo and maybe allow Arushi an easier time in developing the Wavelet code.

Next week is the Demo, for which my subsystem should be close to done. The idea is to have each part done to some degree (C/Matlab code, UI, music playability), so that all that is left is to polish the parts and integrate it with the other subsystems. Hence, that is my goal for the next week (to make sure the subparts have a preliminary implementation).

Mayur’s Status Report for 3/28

My goal for last week was to finish off the basic step counting functionality of the app so that Akash could run… and I finished! I downloaded the app to my phone before sending it to Akash. After pressing a “play” button, the app begins counting steps. Instead of using the step counter sensor, I am currently using the step detector. From our discussion with Professor Sullivan, we decided to gather data every second so that we could test the limits of the warping algorithm. As described in our research phase, the step counter takes multiple extra seconds to gather its measurements. This is in order for it to filter out false positives (which takes extra processing time). When I tried it out with the app, I found that I wasn’t getting any measurements for the first 6-9 seconds after starting up the app. Since the step counter took a variable number of multiple seconds to gather data that we wanted at the granularity of a second, it was not possible to use it in this case. If we switch back to the granularity of 60 or 90 seconds, we will switch back to it then.

Initially, I displayed the step counts on the screen in the form of an alert dialog. This did not work out for two reasons. The first was that I was unsure if there was a limit to the character count of a dialog box. The second was that I could not find a way to copy the text of the dialog into an email program. As a solution, I implemented code that brought up a ready-filled email to send out the step counts.

My goal for next week is to play music off the phone. As a stretch goal, I would want to call some function in C/C++ just as a proof-of-concept. Admittedly, the UI looks hideous at the moment. This is something else I can fix.

Pictures:

<– The UI

<– The UI

<– Automatically pulls up email with pre-filled body after user presses pause button

<– Automatically pulls up email with pre-filled body after user presses pause button

Mayur’s Status Report for 3/21

As I described in last week’s report, the outbreak of COVID-19 has forced all classes online. Our group wrote a Statement of Work that describes how we plan on dividing the project (see the team status report for this week). Essentially, we will be continuing with our initial division of labor, with small modifications to compensate for the difficulty of integrating our individual portions. During this next week, I will be creating the framework that allows Android to record step counts. I will be writing an app that will allow Akash to record his pace at 90 seconds intervals during a 30 minute run. Currently, my plan is to emit the recorded data as a list and send it in an email with a hard-coded address. This is subject to change, however. Aarushi will be using the data we gather to test out her time warping implementation.

In regards to the implementation of the project this week, I spent time attempting to work with the Native C/C++ App with Android Studio. Before, I was working with an Empty Activity instead. As it turns out, there were many more problems than I initially anticipated with setting up the new app. Right from the start, I received errors when Android Studio tried to build the project. Specifically, I received errors because I had not accepted certain licenses. I attempted to follow the solutions in this link https://stackoverflow.com/questions/54273412/failed-to-install-the-following-android-sdk-packages-as-some-licences-have-not-b to solve the problem. But, I got an error along the way that told me the JAVA_HOME environment variable had not been set. I was confused at first, since I assumed that Android Studio would just set it to the JDK (actually JRE) that came embedded with the program (apparently not). To solve the issue, I downloaded the latest version of Java (jdk-14) and set my environment variables manually. Surprisingly, Android Studio does not support versions of Java above 8, and so I had to uninstall it. Then, I found out that there are GUI issues with using the default shell with Windows when trying to accept the licenses. As a response, I downloaded Git Bash. Eventually, this was my solution on how to fix the issues I had:

- Download Git Bash

- Navigate to ~/AppData/Local/Android/Sdk/tools/bin

- Run $ export JAVA_HOME=”C:\Program Files\Android\Android Studio\jre”

- Run $ ./sdkmanager.bat –licenses

- Reply yes to every prompt

- Reload Android Studio and attempt to re-build the project

- Accept every prompt to resolve issues related to getting/using a C/C++ compiler