Status Report

—

Risk Management Update (most remains the same)

We have built risk management into our project in a couple ways. First in terms of the schedule, as we mentioned before, we added slack time into the schedule to make sure we can account for any issues we run into as we develop our project. This allows us to work out these issues without running out of time at the end of the semester.

From the design side, we have backups for potential problems that will come up while working on the project. We have four specific cases for our metrics that we laid out earlier. The main risk factor is using the Dual-Tree Complex Wavelet Transform Phase Vocoder. If it does not work, we will fall back on using the STFT based Phase Vocoder that we know works well for music. We may attempt to implement our own, or use a library on GitHub. We have done our research and are putting most of our time into this aspect of the project since it is the focus point that differentiates us from other apps like this, while also being the primary risk factor.

The second biggest risk factor is the accuracy of the step detection from the smartphones. We have done testing and seen that the phone meets our accuracy requirements, so hopefully this will not be an issue, but if we find out during implementation and testing that the accuracy is not as good as we thought, we are going to order a pedometer that we can collect the data from instead.

The only change in our risk management is to account for lost time and lost ability to work physically together. This may deter integration, and full system testing capabilities. This is because we decided to use the Samsung S9 as our base test device since it had sufficient step count accuracy. With our new situation, Akash is the only member who has access to this device and he was not an initial test subject of our running data. Our discussion with our professor, Professor Sullivan, and TA, Jens, helped generate a reasonable plan to possibly adjust. This will serve as our backup plan. We will each write our individual parts, test them independently, and create deliverables that convey their functionalities. The latter mentioned deliverable is a new addition to our project. This new addition is to account for the case that we are not able to integrate the individual components. Thus, while we will aim to integrate the components, it will be a challenge and stretch goal.

The smaller risk factors involve the timing of the application, which will involve us widening the timing windows for our refresh rate, and minimizing the time it takes for the app to start when initially opened.

In terms of budget, we have not run into any issues yet, and do not plan on, since we have most everything we need already.

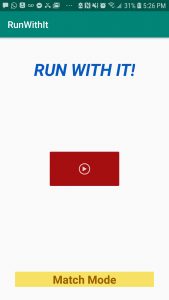

<– The UI

<– The UI <– Automatically pulls up email with pre-filled body after user presses pause button

<– Automatically pulls up email with pre-filled body after user presses pause button