Jonathan’s Status Update for Saturday April 25

This week I mainly finished the hardware and continued refining the real-time imaging.

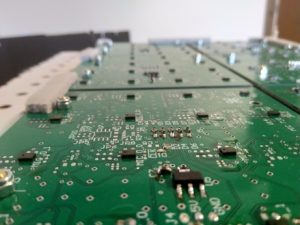

The hardware was half completed at the beginning of this week, with 48 microphones assembled on their boards and tested. At the beginning of this week I assembled the remaining 48.

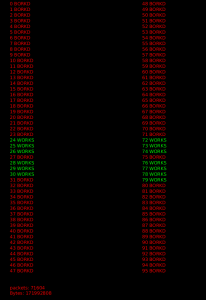

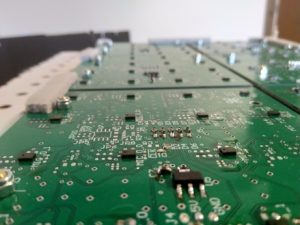

Since the package for these microphones, LGA_CAV, are very small, with a sub-mm pitch, and no exposed pins, soldering was difficult and often required rework. Several iterations of the process to solder them were used, beginning with a reflow oven, eventually moving to hot air and solder past, then manual tinning followed by hot air reflow. The final process was slightly more time consuming than the first two, but significantly more reliable. In all, around 20% of the microphones had to be reworked. In order to aid in troubleshooting, a few tools were used. The first was a simple utility I wrote based on the real-time array processor, which examined each microphone for a few common failures (stuck 0, stuck 1, “following” its partner, and conflicting with its partner).

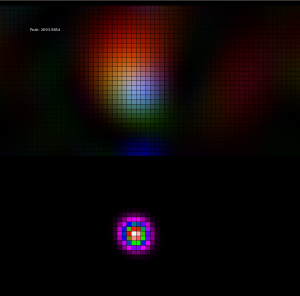

This image shows the output of this program (the “bork detector”) for a partially working board. Only microphones 24-31 and 72-79 are connected (a single, 16-microphone board), but 27,75, and 31 are broken. This enabled quickly determining where to look for further debugging.

The data interface of PDM microphones is designed for stereo use, so each pair takes a single clock line, and has a single digital output for both. Based on the state of another pin, each “partner” outputs data either on the rising or falling edge of the clock, and goes hi-z in the other clock state. This allows the FPGA to use just half as many pins as there are microphones (in this case, 48 pins to read 96 microphones). Often the errors in soldering could be figured out based on this.

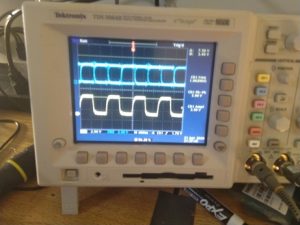

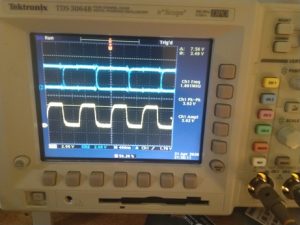

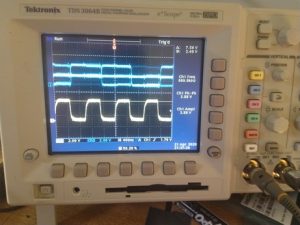

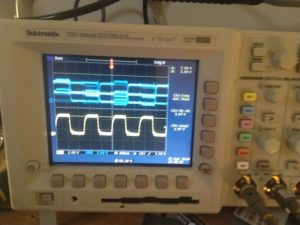

Using an oscilloscope, a few common errors could quickly be identified, and tracked to a specific microphone, by probing the clock line and data line of a pair (blue is data, yellow clock):

Both microphones are working

The falling-edge microphone is working, but the data line of the rising-edge microphone (micn_1) is disconnected

The falling-edge microphone is working, but the rising-edge microphone’s select line is disconnected.

The falling-edge microphone is working, but the rising-edge microphone’s select line is connected to the wrong direction (low, where it should be high).

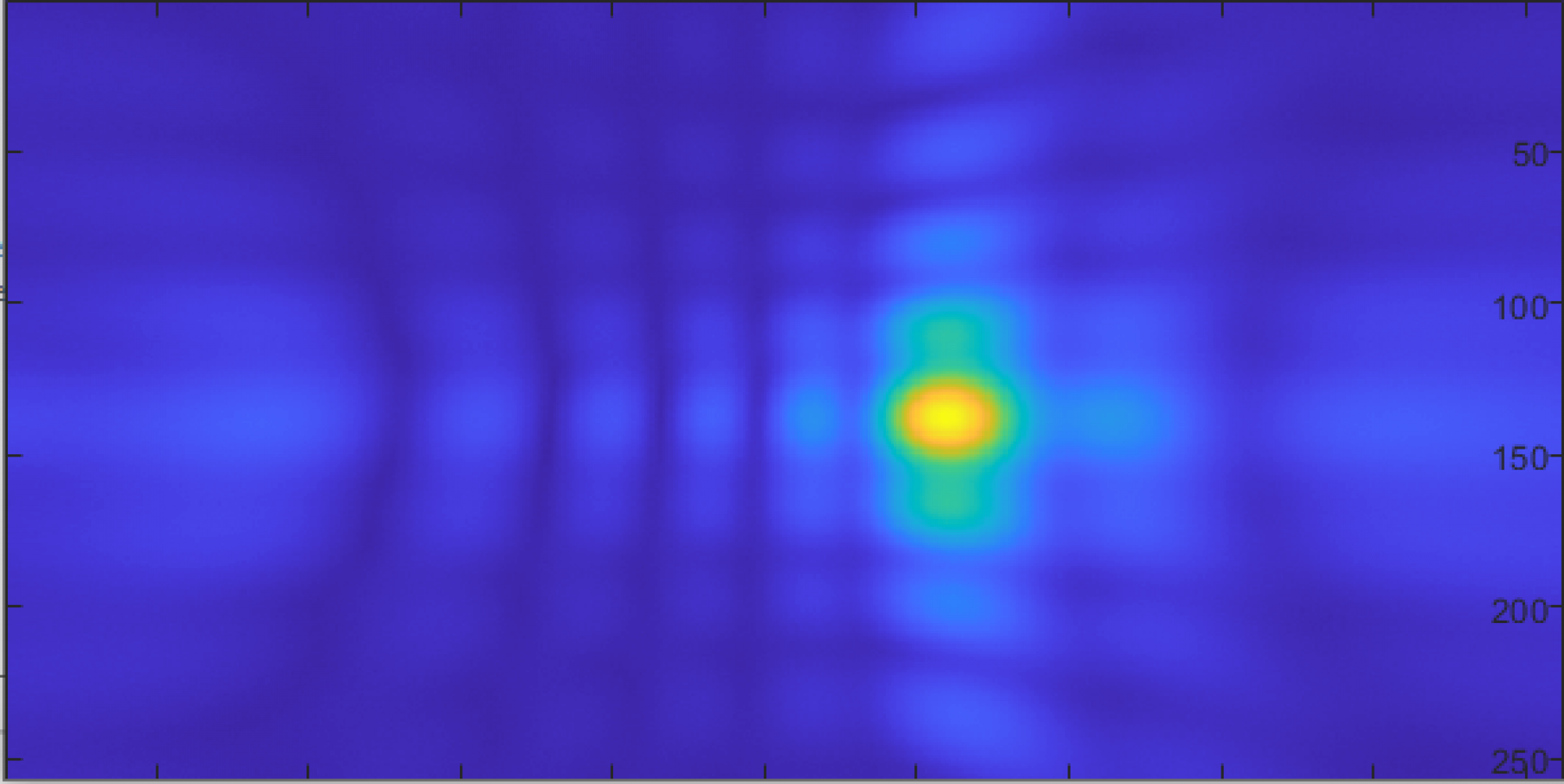

The other major thing that I worked on this week was refinements to the real-time processing software. The two main breakthroughs here that allowed for working high-resolution, real time imaging was using a process which I’ll refer to as product of images (there may be another name for this in the literature or industry, but I couldn’t find it), and frequency-domain processing.

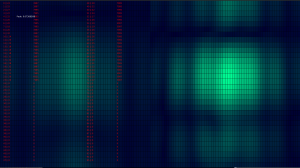

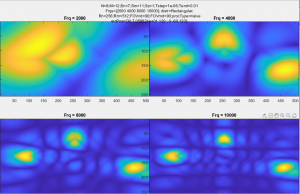

Before using product of images, the images generated by each frequency were separate, as in this image where two frequencies (4000 and 6000Hz) are shown in two images side by side:

Neither image is particularly good on its own (this particular image also used only half of the array, so the Y axis has particularly low gain). They can be improved significantly by multiplying two or more of these images together though. Much like how a Kalman filter multiplies distributions to get the the best properties of all the sensors available to it, this multiplies the images from several (typically three or four) frequencies, to get the small spot size of the higher frequencies, as well as the stability and lower sidelobes of lower frequencies. This also allows a high degree of selectivity, a noise source that does not have all characteristics of the source we’re looking for will be reduced dramatically.

For a simple example, suppose we have a fan that has relatively flat (“white”) noise from 100Hz – 5KHz, and 10dB lower noise above 5KHz (these numbers based roughly on the fan in my room). If the source we’re looking at has strong components at 2,4,6,and 8 KHz, and the two have roughly equal peak signal power, then “normal” time domain processing that adds power across the entire band would have the fan be vastly more powerful than the source we’re looking for, as the overall signal power would be greater because of the very wide bandwidth (4.9KHz bandwidth as opposed to just a few tens of Hz, depending on the exact microphone bitrate). Doing product of images though would have the two equal at 2 and 4KHz, but would add the 10dB difference at both 6 and 8KHz, in theory giving a 20dB SNR over the fan. This, for example, is an image created from about 8 feet away, where the source was so quiet my phone microphone couldn’t pick it up more than 2-3 inches away:

In practice this worked exceptionally well, largely cancelling external noise, and even some reflections, for very quiet sources. Most of the real-time imaging used this technique, some pictures and videos also took the component images and mapped each to a color based on its frequency (red for low, green for medium, blue for high), and just made an image based on these. In this case, artifacts at specific frequencies were much more visible, but it did give more information about the frequency content of sources, and allowed identifying sources that did not have all the selected frequencies. In the image above, the top part is an RGB image, the lower uses product of images.

Finally, frequency domain processing was used to allow very fast operation, to get multiple frames per second. Essentially each input channel is multiplied by a sine and cosine wave, and the sum of each of those waves over the entire input duration (typically 50mS) is stored as a single complex number. So for a microphone, if f(t) is the reading (1 or -1) at time t, and c is the frequency we’re analyzing divided by the sample rate, then this complex number is given by f(0)*cos(c*0) + f(1)*cos(c*1) … + f(n)*cos(c*n) + f(0)*sin(c*0)*j + f(1)*sin(c*1)*j + f(n)*sin(c*n)*j. Once all of these are computed, they’re approximately normalized (any values too small are kept small, larger values are kept relatively small, but allowed to grow logarithmically). To generate images, a phase table, which was precomputed when the program first started, is used to map a phase offset for each element, for each pixel. This phase delay is proportional to the frequency of interest, and what the time delay would have been if we were doing time domain delay-and-sum. Each microphone’s complex output value is multiplied by a value with this phase and magnitude 1, and then those numbers are summed, and the amplitude taken, to get a value for that pixel in the final image. While significantly more complicated than delay-and-sum, and much more limited as it can only look at a small number of specific frequencies, this can be done very quickly. The final real-time imaging program was able to achieve 2-3 frames per second, where post-processing in the time domain typically takes several seconds (or even minutes, depending on the exact processing method being used).

Team status update for Saturday April 25

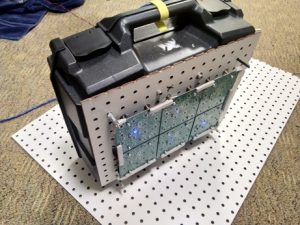

This week saw the completion of all major parts of the project. The hardware is finished:

And software is working:

John worked mainly on finishing the hardware, completing the remaining half of the array this week, Sarah and Ryan on the software, generating images from array data.

Going forward, we mainly plan to make minor updates to the software, primarily to make it easier to use and configure. We may also make minor changes to improve image quality.

Sarah’s status report for Saturday, Apr. 25

This week, I worked with Ryan to build delay and sum beamforming without the use of external libraries provided from MATLAB.

This beamforming allows amplifying signals from a specific direction while suppressing other signals. This conventional method of beamforming provides ease of implementation. The method has been used widely for processing arrays. A disadvantage would be in low resolution in closely spaced targets. However, as long as we are able to detect the source of the leak for our project, this is acceptable. Several steps were taken to achieve the output. First off, signals captured in microphones have similar looking waveforms but include delays. I calculated the delay with the fact that we know the angle, distance between the microphones, and distance from the sound source. By simple geometry and algebra with polar coordinates, I was able to get x,y and z for distance and divided by the speed of sound to get the delay. Two other delay calculation methods were also taken but, the results were better shown for the current method. Then, the signal of each microphone was shifted by an appropriate delay amount. The shifted microphones were then summed up and normalized by the number of microphone channels.

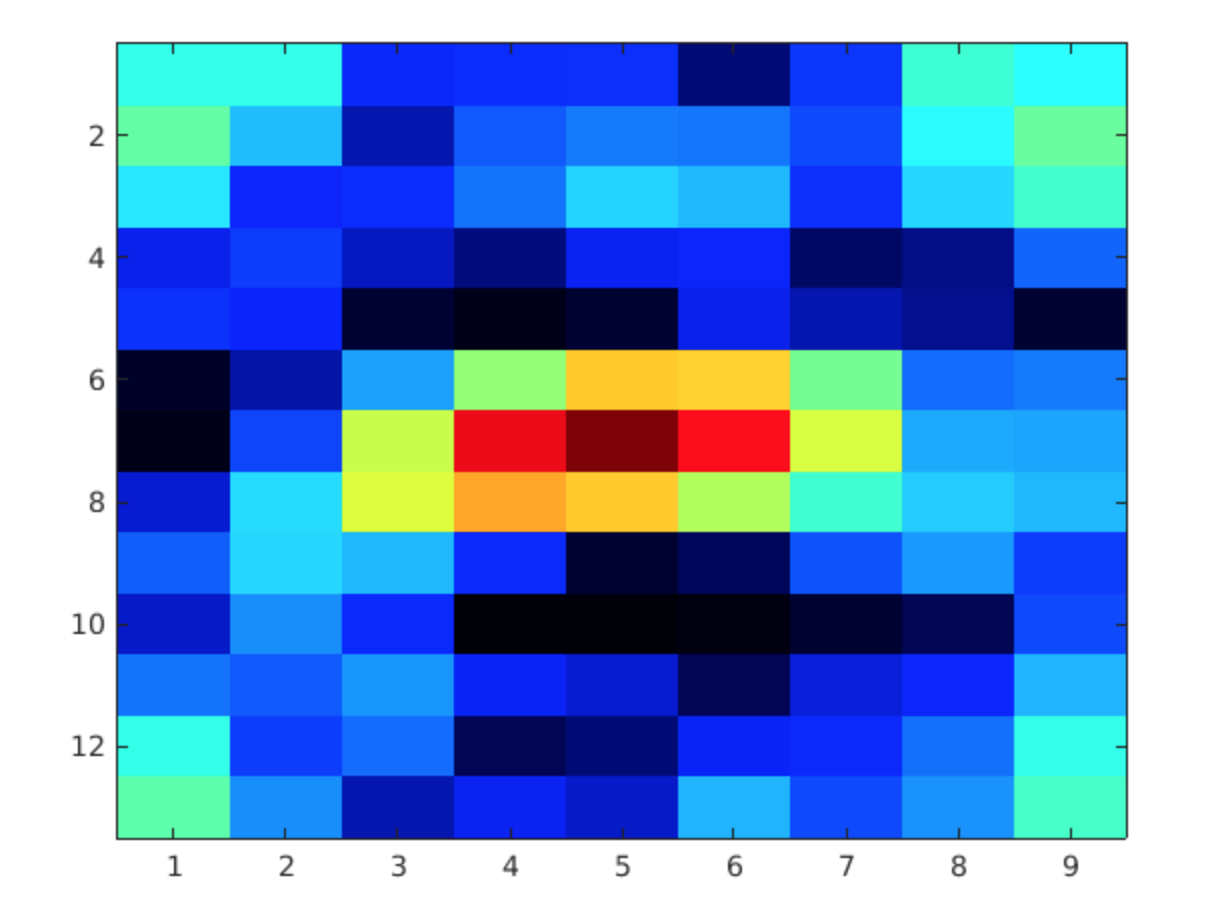

Visualization of the beamforming output was simply done through a heatmap. Sound source regions were shown in brighter colors. The resolution ended up to be low, but an approximate location can still be visible through the heat map.

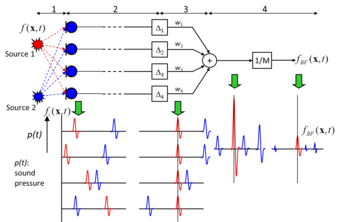

2k Gaussian (source from center)

Synthesized Leak (source slightly off center to the left)

Real Leak (source slightly off center to the left)

Next week, I will work on the final report with my teammates!

Ryan’s status report for Saturday, Apr. 25

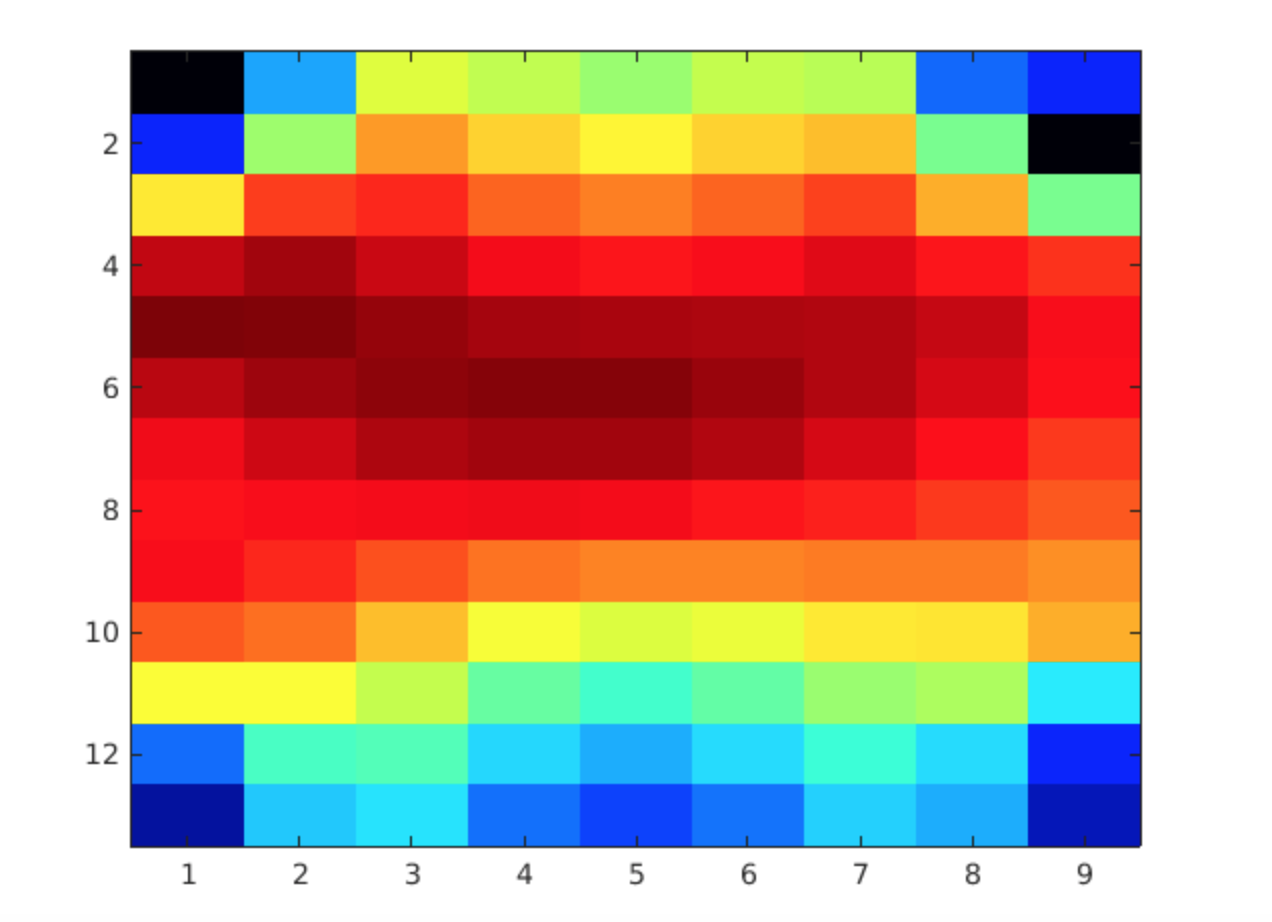

In Matlab, Sarah and I wrote the algorithm for beamforming using the sum & delay method. A simplified block diagram is shown below:

When we have an array of microphones, because of the distance differences between each microphone and the source, we can “steer” the array and focus on a very specific spot. By sweeping the spot over the area of interest, we can identify from where the sound is coming from.

The “delay” in sum and delay beamforming is attributed to delaying the microphone output for each microphone depending on its distance from the sound source. The signals with appropriate delays applied are then summed up. If the sound source matches the focused beam, the signals constructively interfere and the resulting signal will have a large amplitude. On the other hand, if the sound source is not where the beam is focused, the signals will be out of phase with each other, and a relatively low amplitude signal will be created. Using this fact, the location of a sound source can be mapped without physically moving or rotating the microphone array. This technology is also used in 5G communications where the cell tower uses beamforming to focus the signal onto the direction of your phone for higher SNR and throughput.

Team status report for Saturday April 18

This week we made significant headway towards the finished project. The first three microphone boards are populated and tested, and most of the software for real-time visualization and beamforming has been written. At this point, we all have our heads down, finishing our portion of the project, so most of the progress this week has been detailed in individual reports. There were some hiccups as we’re expanding the number of operational microphones in the system, but that should be fixed next week.

This coming week, we’ll all continue working on our respective parts, planning to finish before the end of the week. John will mainly be working on finishing the hardware, and Ryan and Sarah, the software.

We are roughly on track with regards to our revised timeline, the hardware should be done within a few days, and all the elements of the software are in place and just need to be refined and debugged.

Jonathan’s status report for Saturday, Apr. 18

This week I mainly worked on improving the real-time visualizer, and building more of the hardware.

The real-time visualizer previously just did time-domain delay and sum, followed by a Fourier transform of the resulting data. This worked but is slow, particularly as more pixels are added to the output image. To improve the resolution and speed, I switched to taking the FFT of every channel immediately, then, only at the frequencies of interest, adding a phase delay to each one (which is computed ahead of time), then summing. This reduces the amount of information in the final image (to only the exact frequencies we’re interested in), but is extremely fast. Roughly, the work for delay-and-sum is 50K (readings/mic) * 96 (mics) per pixel, so ~50K*96*128 multiply-and-accumulate operations for a 128-pixel frame. With overhead, this is around a billion operations per frame, and at 20 frames per second, this is far too slow. The phase-delay processing needs only about 3 (bins/mic) * 8 (ops / complex multiply) * 96 (mics) * 128 (pixels), which is only about 300K operations, which any computer could easily run 20 times per second. This isn’t exact, it’s closer to a “big O” for the work number than an actual number of operations, and doesn’t account for cache or anything, but does give a basic idea of what kind of speed-up this type of processing offers.

I did also look into a few other things related to the real-time processing. One was that since we know our source has a few strong components at definite frequencies, is multiplying the angle-of-arrival information of all frequencies together gives a sharper and more stable peak in the direction of the source. This can also account, to some degree, for aliasing and other spatial problems – it’s almost impossible for all frequencies to have sidelobes in exactly the same spots, and as long as a single frequency has a very low value in that direction, the product of all the frequencies will also have a very low value there. With some basic 1D testing with a 4-element array, this worked relatively well. The other thing I experimented with was using a 3D FFT to process all of the data with a single (albeit complex) operation. To play with this, I used the matlab simulator that I used earlier to design the array. The results were pretty comparable to the images that came out of the delay-and-sum beamforming, but ran nearly 200 times faster.

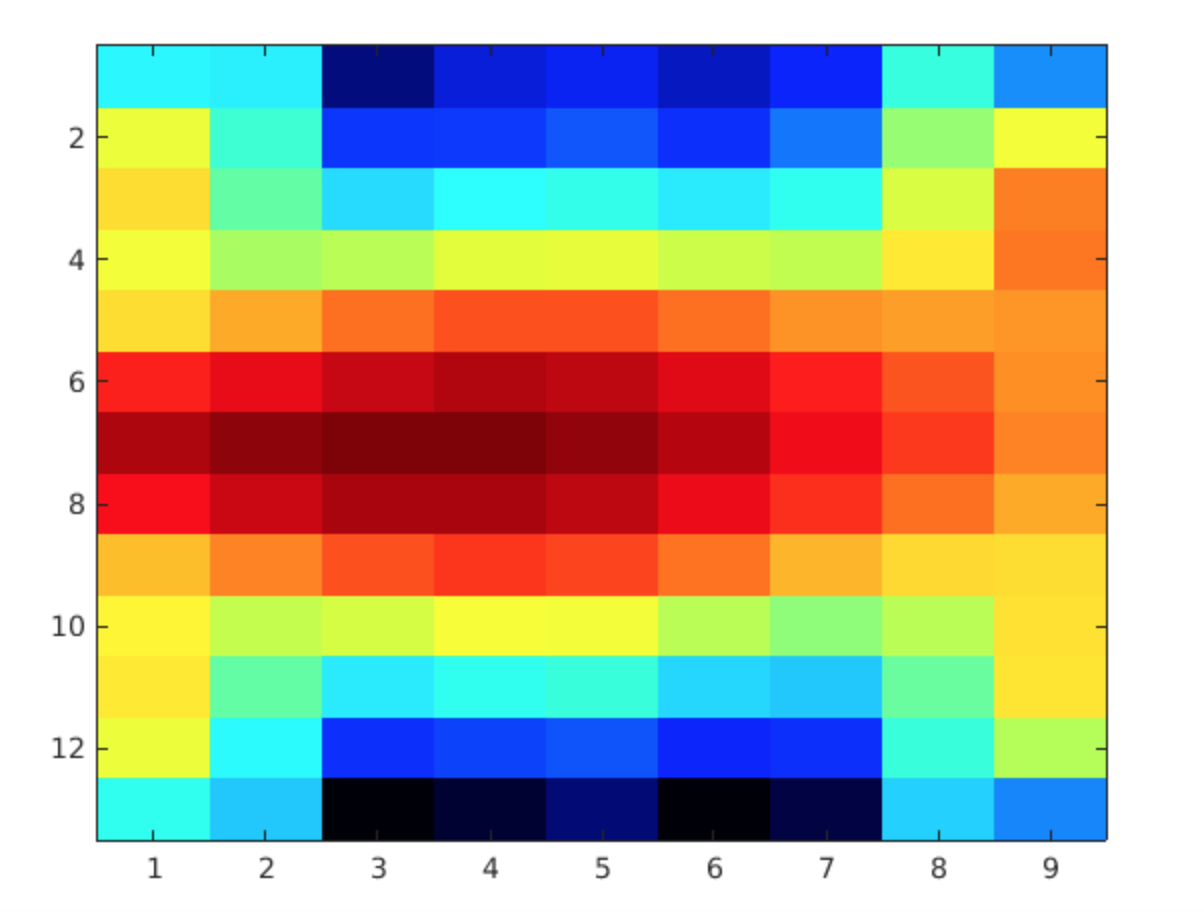

output from delay-and-sum.

output from 3D FFT

The two main disadvantages are that the 3D FFT has a fixed resolution output-the same as the physical array (8×12). To increase the resolution slightly, I wrote a bit of code to do complex interpolation between pixels. This “recovers” some of the information held in the phase of the output array, which normally would not be used in an image (or at least, not in a comprehensible form), and makes new pixels using this. This is relatively computationally expensive though, and only slightly improves the resolution. Because of the relative complexity of implementing this, and the relatively small boost in performance compared with phase-delay, this will probably not be used in the final visualizer.

Finally, the hardware has made significant progress since last week, three out of the six microphone boards have been assembled, and tested in a limited capacity. No images have been created yet, though I’ve taken some logs for Sarah and Ryan to start running processing on some actual data. I did do some heuristic processing to make sure the output from every microphone “looks” right. The actual soldering of these boards ended up being a very significant challenge. After a few attempts to get the oven to work well, I decided to do all of them by hand with the hot air station. Of the 48 microphones soldered so far, 3 were completely destroyed (2 by getting solder/flux in the port, and 1 by overheating), and about 12 did not solder correctly on the first try and had to be reworked. I plan to stop here for a day or two, and get everything else working, before soldering the last 3 boards.

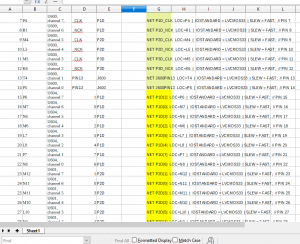

Finally, the FPGA firmware was modified slightly. Previously, timestamps for each microphone reading were included in the packets, to find “breaks” (dropped packets/readings) between readings in the packet handling, logfiles, or processing code. Since all of that is working reliably at this point, and that introduced significant (32Mbps) overhead, I’ve removed the individual timestamps and replaced it with packet indexing, each packet has a single number which identifies it. In this way missing packets can still be identified, but with very little overhead. The FPGA also now reads all 96 microphone channels, simultaneously, where previously it only read a single board. Since this required many pins, and the exact pinout may change for wiring reasons, I made a spreadsheet to keep track of what was connected where, and used this spreadsheet to automatically generate the .ucf file for all the pins based on their assignments within the sheet.

Ryan’s status report for Saturday, Apr. 18

This week, I was planning to analyze recordings from a 4×4 microphone array for sensitivity variances over frequency. Unfortunately we were having some trouble converting the captured PDM signals to a PCM wav file, and I did not have much time to look into this.

Next week, I’ll investigate this issue, and once we have the PCM wav recordings, I should be able to generate a EQ profile for each microphone for accurate level matching between them.

Sarah’s Status Report for Saturday, Apr. 18th

This week, as the network driver started to work, I received several logfiles from John. The logfiles were revised with headers that included information about the length of the data, logfile version, time start of recording, and location of errors. The recording was from a 4×4 array with the logfile including some real data and some chunk of non-real data. I worked on outputting wav files from the pdm data but faced an error in hex to bin conversion. I believe that major problems may have been due to minor changes in the logfiles. As the length of the data is given now, it may be helpful to use the number and not hard code which we previously did.

Next week, I will make sure the error get fixed and have the output. Hopefully, the output matches the results that John received through real-time processing.

Team status report for Saturday April 11

This week started with the midpoint demo, which worked relatively well, showing mainly the real-time visualization shown in one of the previous updates. During the remainder of the week significant progress was made in several areas, particularly the network driver, which was previously impeding progress on the processing software.

Some progress on the hardware was also made (see John’s update), with the first board getting several microphones populated, tested, and hooked up to the FPGA. With this, we have finally moved from proof-of-concept tools, and started using the final hardware-firmware-software stack. Though these parts will of course be modified, at this point, there is no longer anything entirely new to be added.