This week I focused on some of the data pre-processing methods we will use for our algorithm. Note that the things mentioned in the first two paragraphs in this report may not be used for our current method which involves projecting a laser strip. I first looked at the geometry for converting between the data returned from a depth camera to cartesian coordinates. This involved doing some rudimentary trigonometry to get these calculations. The depth camera should return the z-distance to each pixel, but I also accounted for the case where the Intel SR305 camera would return distance from the center of the camera to the pixel instead. We will be able to see which one it is when we actually get the camera and test it on a flat surface like a flat cube. The math computed is as follows:

As mentioned in the previous status update, we were considering using an ICP (Iterative Closest Point) algorithm to combine different scans for the depth camera method and also accurately determine the angle between scans. The ICP algorithm determines the transformation between two point clouds from different angles of the object by using least squares to match duplicate points – these point clouds would be constructed by mapping the scanned pixel and depth to their corresponding 3D cartesian coordinates as shown from the math above. Similar to gradient descent, ICP works best when starting at a good starting point to avoid being stuck at local minima and also save computation time. One potential issue with ICP is that shapes like spheres or upright cylinders would have uniform point clouds across any angle – a good method to help with this risk factor is to start the ICP algorithm using the rotational data from the stepper motor, an area that Chakara has researched on this week. Essentially, we will have the rotational data from the stepper motor and then use ICP to determine the rotational difference more precisely between the two scans, then find duplicate points and combine the two point clouds this way.

I also looked into constructing a mesh from a point cloud. The point cloud would likely be stored in a PCD (Point Cloud Data) file format. PCD files provide more flexibility and speed than other formats like STL/OBJ/PLY, and we can use the PCL (Point Cloud Library) in C++ to process this point cloud format as fast as possible. The PCL library provides many useful functions such as estimating normals and performing triangulation (constructing a triangle mesh from XYZ points and normals). Since our data will just be a list of XYZ coordinates, we can easily convert this to the PCD format to be used with the PCL library. The triangulation algorithm works by maintaining a fringe list of points from which the mesh can be grown and slowly extending the mesh out until it covers all the points. There are many tunable parameters such as size of the neighborhood for searching points to connect, maximum edge length of the triangles, as well as maximum allowed difference between normals to connect that point, which helps deal with sharp edges and outlier points. The flow of the triangulation algorithm involves estimating the normals, combining that data with the XYZ point data, initializing the search tree and other objects, then using PCL’s reconstruction method to obtain the triangle mesh object. The algorithm will output a PolygonMesh object which can be saved as an OBJ file using PCL, which is a common format for 3d printing (and tends to perform better than STL format). There will probably be many optimization opportunities or bugs/issues to be fixed with this design as it is just a basic design based on what is available in the PCL library.

I also looked a bit into noise reduction and outlier removal for filtering the raw point cloud data. There are many papers that discuss approaches and some even use neural nets to get probability that a point is an outlier and remove it in that sense. This would require further research and trying out different methods as I don’t completely understand what the different papers are talking about just yet. There are also libraries that have their own noise reduction functions, such as the PCL library among a few others, but it is definitely better to do more research and write our own noise reduction/outlier removal algorithm for better efficiency, unless the PCL library function is already optimized for the PCD data format.

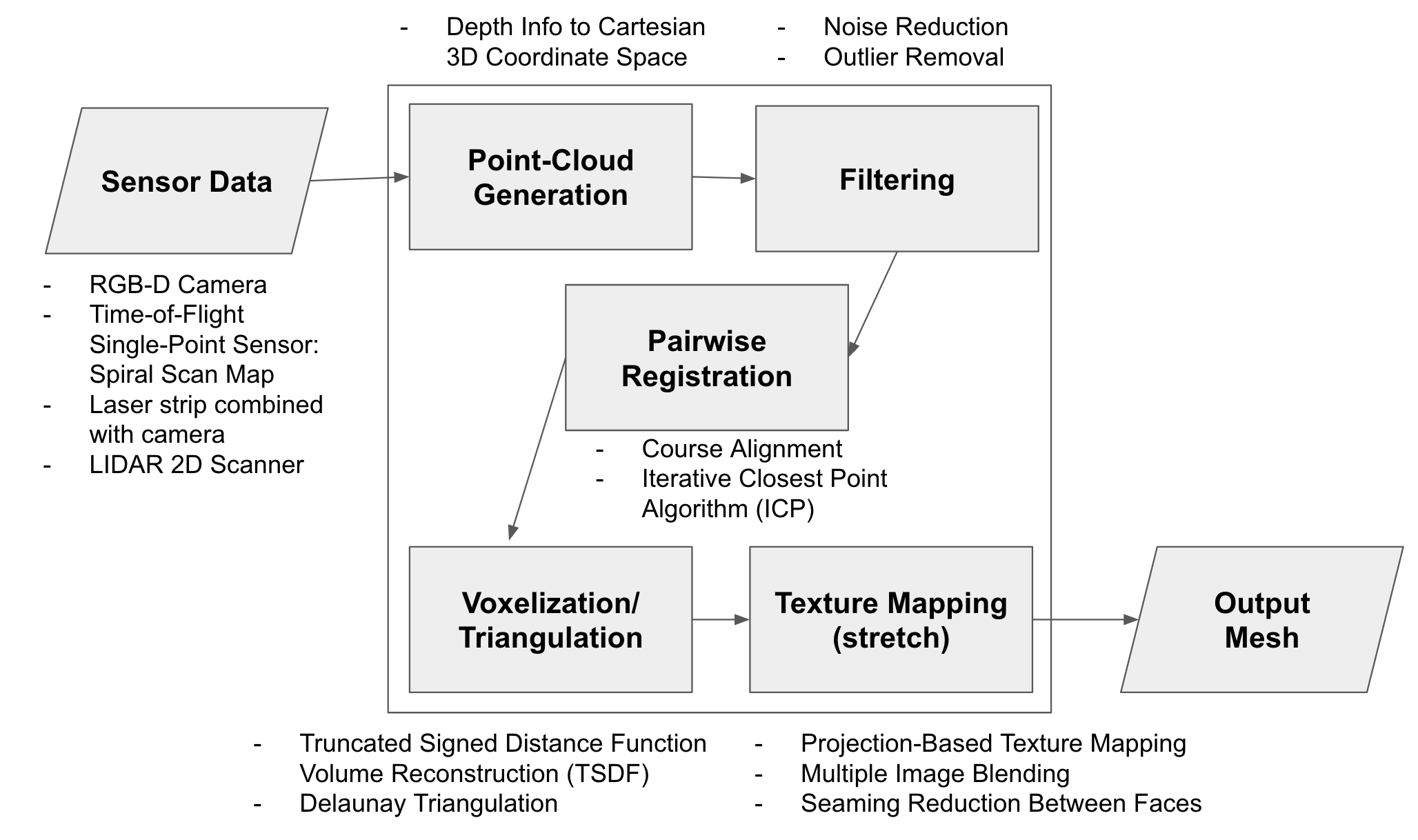

We discussed using a projected laser strip and a CCD camera to detect how this laser strip warps around the object to determine depth and generate a point cloud, so our updated pipeline is shown below.

By next week, I will be looking to completely understand the laser strip projection approach, as well as dive much deeper into noise reduction and outlier removal methods. I may also look into experimenting with triangulation from point clouds and playing around with the PCL library. My main focus will be on noise reduction/outlier removal since that is independent of our scanning sensors – it just takes in a point cloud generated from sensor data and does a bunch of computations and transformations.