This week I have been implementing the point cloud generation algorithm for the simulated 3D scan. This has involved snooping around the blender and writing simple scripts to extract the data relevant to transformations between the different spaces of our algorithm. For example, while it is not displayed directly on the UI, blender keeps track of transformations from the local space of each object to the space of its parent. So by writing a script like the following:

![]()

We were able to extract a transformation matrix (4×4 for a 3d vector, including translation, scale, and rotation) converting points in the coordinate space of the laser line to world space. By using this, we can simply get an equation for the laser plane by applying this transformation to a very simple vector (1,0,0,1). Similar scripts were written to extract camera transform data, which was used to implement the transformation of pixels in the image to world space.

The two major elements that are written so far are:

- The detection of laser pixels in an image

- The transformation of screen pixels to corresponding points in world space

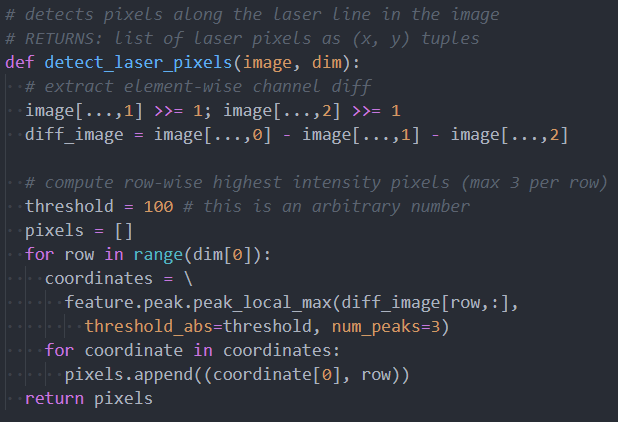

A rough version of laser pixel detection was implemented last week, but this week I was able to optimize it to run in a fraction of the time it took earlier. I have also set the maximum number of laser pixels to detect per row to 3, so that there are not too many excess points being added to the point cloud. The code right now is below:

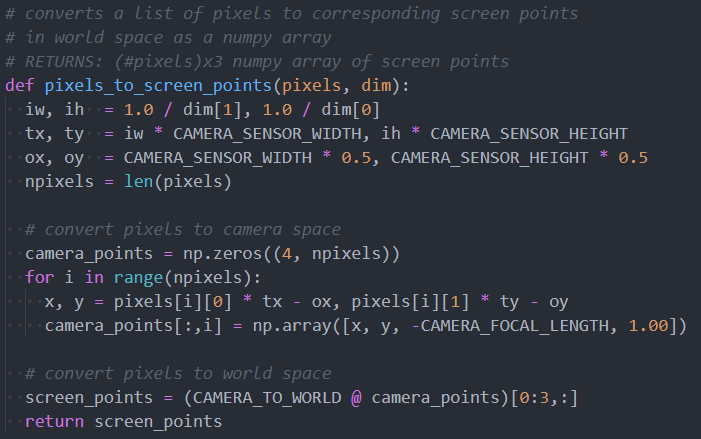

Transformations from pixels to screen points is then implemented in two steps:

- Convert pixels to camera space

- Transform camera space positions to world space

Step 1 is implemented by using the perspective camera model, with a known focal point and sensor dimensions from the blender file used during the simulation. Step 2 is implemented by applying the inverse transform of the camera onto the points of the sensor. The code is below:

The next steps to complete are ray-plane intersection to get the position of those pixels projected on the object in world space, and finally reverse rotation to get the same points in object space. After this, we will have completed the pipeline for a single scan. I plan to have this completed by our team meeting on Monday. Hopefully, a full example scan will be able to be completed by this time.

Right now, I believe the work is ahead of schedule, and a working demo will be available early next week. Because of the extra slack time this provides us, we may work on adding additional features for our demo, such as a web application GUI.