This week, I focused on two aspects of the project. First, I finished up the storytelling portion of the design report. I already finished ironing out the details and conducting research on the metrics and validation prior to the design presentation, but I edited the report in accordance with the feedback we received (i.e. being more clear about where design choices came from and detailing how we plan to keep our validation objective).

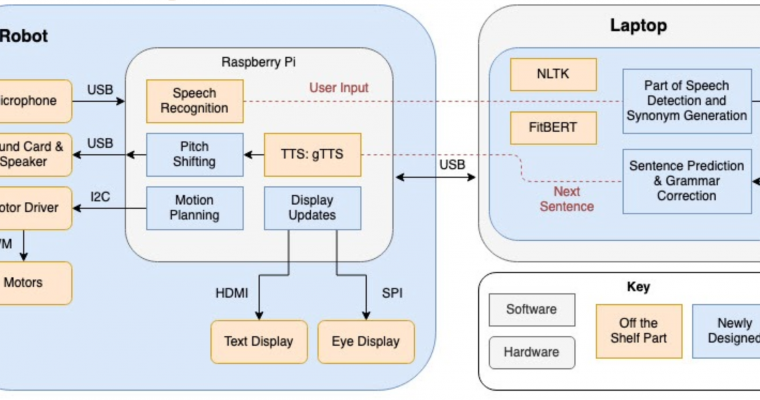

In addition, I created a program to generate synonyms or antonyms of a word given the part of speech. NLTK already has a tool that does this, but the tool itself does not always output the words you are looking for. For example, when looking for the antonyms of ‘sad’, the tool does not output ‘happy’. Since synonym generation is quite crucial to the performance of the story generating module, I created an algorithm that does a breadth first search for any possible synonyms and antonyms. Since the output of this algorithm will be fed into FitBERT, which ranks the words based on best fit, it is better to generate a longer list than move on after just one possible word is found. I am not yet sure how many word possibilities to generate, and I will play around with this list size once I start working with FitBERT.

Even with a BFS algorithm, the ML model is having a hard time linking some obviously related words. For example, “enormous” and “big” are not synonyms of each other, even at a distance of 2 or 3. As I work on the FitBERT portion, I think I might need to use a different synonym generation tool to supplement this program. I think the best option would be to just consult thesaurus.com, but that slows down the program and requires wifi. I might create multiple versions of this program and test them for speed and accuracy in conjunction with the actual stories we will be using, just to ensure we are still meeting our requirements.

Here are some examples of the inputs and outputs this program generates:

input: angry, synonyms, adjective

output: {‘wild’, ‘furious’, ‘raging’, ‘tempestuous’, ‘angry’}

input: sad, antonyms, adjective

output: {‘glad’}

input: quickly, synonyms, adverb

output: {‘promptly’, ‘chop-chop’, ‘rapidly’, ‘quickly’, ‘speedily’, ‘apace’, ‘quick’, ‘cursorily’}

Next week, I plan to start writing the algorithm that fills in the blank based on previous user input. I am a little bit ahead of schedule since I’ve already finished the groundwork for the other components, but the extra slack time will allow me to go back and fine-tune them. I also need to create a lot more templates to do so, so I will spend the first half of the week creating at least 5 more templates for testing.