This week, I began adding various ML models to the benchmarking suite. The total # and variation of models is yet to be fully discussed, but some basic models have already been implemented. This took longer than expected since due to my unfamiliarity with ML and the PyTorch library, I ran into multiple issues with passing outputs from one function to inputs of another.

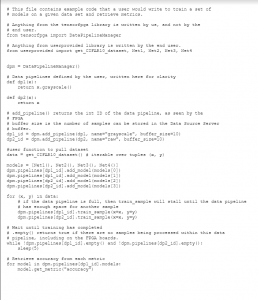

Additionally, TJ and I wrote some example code for what a user would write in order to train a set of models on a certain data set and to be able to read specific metrics. The sample code is attached below:

As specified in the code, the DataPipelineManager class and all associated functions will be implemented by us. The user is responsible for providing the specific data set, as well as which models will be used for training.

Overall, I would say progress is on schedule. This week I plan on adding in a wider range of models, making sure that this range encompasses and covers most if not all of our scope. Additionally, once a certain amount of models are setup, I plan on training the given models on both the CPU on the data source machine, as well as a GPU (NVIDIA 1080).