This week, I was able to provide preliminary numbers on the training result of our model test set on a GPU, as well as a different, higher end CPU as well. The full statistics can be seen at the bottom of the status report. The main takeaway is that although the GPU and CPU have almost the same average time, wider models trained much faster on the GPU than the CPU. Likewise, deeper models trained faster on the CPU. Additionally, I also realized that I was not fully utilizing the GPU when training each model sequentially. A possibility that I would like to explore in the future would be to possibly train multiple models simultaneously on a GPU to maybe get a better average throughput.

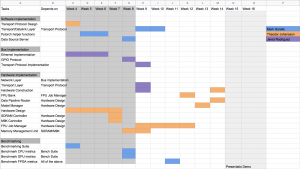

I also worked with TJ and Jared to write up the Statement of Work as well as figuring out what our plan for the project was in the future. During this stage, I more concretely defined what I would be doing in order to validate the Software portion of our project. These methods are more clearly defined in the Team Status Report.

I would say I am on schedule this week.

This coming week, I plan to start working on setting up a form of validation for the software portion of the Project.

Hardware: NVIDIA GeForce RTX 2060 SUPER

Trained models with an average throughput of 24.454581

Trained models at an average time of 40.866 seconds

Model 1 stats: Time taken: 28.239 seconds, Loss value: 966.509

Model 2 stats: Time taken: 24.933 seconds, Loss value: 732.629

Model 3 stats: Time taken: 25.145 seconds, Loss value: 804.955

Model 4 stats: Time taken: 30.565 seconds, Loss value: 727.075

Model 5 stats: Time taken: 38.075 seconds, Loss value: 749.385

Model 6 stats: Time taken: 48.607 seconds, Loss value: 852.541

Model 7 stats: Time taken: 50.306 seconds, Loss value: 852.214

Model 8 stats: Time taken: 55.584 seconds, Loss value: 902.778

Model 9 stats: Time taken: 60.663 seconds, Loss value: 1151.682

Model 10 stats: Time taken: 65.919 seconds, Loss value: 1150.963

Model 11 stats: Time taken: 24.625 seconds, Loss value: 674.633

Model 12 stats: Time taken: 24.847 seconds, Loss value: 617.159

Model 13 stats: Time taken: 25.726 seconds, Loss value: 606.477

Model 14 stats: Time taken: 32.640 seconds, Loss value: 566.784

Model 15 stats: Time taken: 37.984 seconds, Loss value: 590.729

Model 16 stats: Time taken: 29.991 seconds, Loss value: 1055.854

Model 17 stats: Time taken: 30.329 seconds, Loss value: 965.328

Model 18 stats: Time taken: 30.178 seconds, Loss value: 992.295

Model 19 stats: Time taken: 35.499 seconds, Loss value: 956.919

Model 20 stats: Time taken: 43.565 seconds, Loss value: 1139.789

Model 21 stats: Time taken: 54.319 seconds, Loss value: 1137.847

Model 22 stats: Time taken: 55.855 seconds, Loss value: 1151.514

Model 23 stats: Time taken: 61.021 seconds, Loss value: 1151.118

Model 24 stats: Time taken: 66.462 seconds, Loss value: 1151.826

Model 25 stats: Time taken: 71.953 seconds, Loss value: 1151.610

Model 26 stats: Time taken: 30.304 seconds, Loss value: 936.803

Model 27 stats: Time taken: 30.150 seconds, Loss value: 906.943

Model 28 stats: Time taken: 30.375 seconds, Loss value: 904.646

Model 29 stats: Time taken: 38.212 seconds, Loss value: 895.794

Model 30 stats: Time taken: 43.902 seconds, Loss value: 949.094

Hardware: Intel(R) Core(TM) i7-9700F CPU @ 3.00 GHz 3.00 GHz

Trained models with an average throughput of 24.921083

Trained models at an average time of 40.100 seconds

Model 1 stats: Time taken: 12.247 seconds, Loss value: 994.131

Model 2 stats: Time taken: 14.784 seconds, Loss value: 759.224

Model 3 stats: Time taken: 14.622 seconds, Loss value: 811.628

Model 4 stats: Time taken: 20.136 seconds, Loss value: 715.087

Model 5 stats: Time taken: 22.665 seconds, Loss value: 768.794

Model 6 stats: Time taken: 24.846 seconds, Loss value: 838.152

Model 7 stats: Time taken: 33.105 seconds, Loss value: 889.310

Model 8 stats: Time taken: 36.692 seconds, Loss value: 973.237

Model 9 stats: Time taken: 39.287 seconds, Loss value: 965.914

Model 10 stats: Time taken: 39.266 seconds, Loss value: 1150.552

Model 11 stats: Time taken: 19.270 seconds, Loss value: 697.697

Model 12 stats: Time taken: 24.998 seconds, Loss value: 623.859

Model 13 stats: Time taken: 27.572 seconds, Loss value: 618.720

Model 14 stats: Time taken: 151.185 seconds, Loss value: 606.125

Model 15 stats: Time taken: 83.843 seconds, Loss value: 576.875

Model 16 stats: Time taken: 18.548 seconds, Loss value: 1068.568

Model 17 stats: Time taken: 22.174 seconds, Loss value: 963.580

Model 18 stats: Time taken: 20.550 seconds, Loss value: 971.986

Model 19 stats: Time taken: 26.596 seconds, Loss value: 1005.134

Model 20 stats: Time taken: 28.523 seconds, Loss value: 1049.507

Model 21 stats: Time taken: 31.286 seconds, Loss value: 1139.938

Model 22 stats: Time taken: 36.644 seconds, Loss value: 1152.150

Model 23 stats: Time taken: 37.675 seconds, Loss value: 1150.964

Model 24 stats: Time taken: 38.490 seconds, Loss value: 1152.036

Model 25 stats: Time taken: 40.988 seconds, Loss value: 1151.915

Model 26 stats: Time taken: 26.474 seconds, Loss value: 923.730

Model 27 stats: Time taken: 32.037 seconds, Loss value: 904.503

Model 28 stats: Time taken: 33.611 seconds, Loss value: 905.850

Model 29 stats: Time taken: 156.003 seconds, Loss value: 902.330

Model 30 stats: Time taken: 88.890 seconds, Loss value: 954.624